Recently, a high-profile psychology paper involving a reproducibility guru AND a paranormality believer was retracted, so I forced Smut Clyde to write the backstory of it.

This was the retracted paper:

John Protzko , Jon Krosnick , Leif Nelson , Brian A. Nosek , Jordan Axt , Matt Berent , Nicholas Buttrick , Matthew DeBell , Charles R. Ebersole , Sebastian Lundmark , Bo MacInnis , Michael O’Donnell , Hannah Perfecto , James E. Pustejovsky , Scott S. Roeder , Jan Walleczek , Jonathan W. Schooler High replicability of newly discovered social-behavioural findings is achievable Nature Human Behaviour (2023) doi: 10.1038/s41562-023-01749-9

Its prominent coauthors:

- Brian Nosek, GOD of research integrity and research reproducibility, at least in psychology and neuroscience. This psychology professor at the University of Virginia in USA is the founder of Center for Open Science (COS), its preprint server PsyArXiv, and of the Reproducibility Project, where Nosek and his team first reproduced 100 psychology studies, and then 53 papers on cancer research.

- Leif Nelson, economy professor at University of California Berkeley, one of the 3 guys behind the Data Colada blog which exposed several high profile fraud cases in psychology, most recently those of Francesca Gino and Dan Ariely. Data Colada bloggers were then sued by Gino, and won.

- Jonathan Schooler, psychology professor at University of California Santa Barbara, firm believer into extrasensory perception (“precognition could be real“) and a fan of the parapsychologist Daryl Bem. Smut Clyde’s story is about Bem and Schooler.

Published a year ago, the paper was celebrated by Nosek as scientific proof that his COS achievements about preregistration and reproducibility are saving science (“The Reforms Are Working“). Science itself was also celebrating in November 2023:

“Now, one of the first systematic tests of these practices in psychology suggests they do indeed boost replication rates. When researchers “preregistered” their studies—committing to a written experiment and data analysis plan in advance—other labs were able to replicate 86% of the results, they report today in Nature Human Behaviour. That’s much higher than the 30% to 70% replication rates found in other large-scale studies. […]

It’s the first replication attempt that has followed studies from their conception through to independent replication, says Brian Nosek, executive director of the Center for Open Science and one of the four lab directors. Rather than choose a sample of studies from the literature retrospectively, he and his colleagues wanted to track whether they could more easily replicate work that had tried to improve rigor right from the start: “And we succeeded!””

Well, they did not. The study was criticised already back then, by Berna Devezer from University of Idaho in USA and Joseph Bak-Coleman from Max Planck Institute in Konstanz, Germany, who protested that the 16 findings selected for replication were neither randomly selected nor representative, i.e. more likely to be reproducible. Devezer and Bak-Coleman published their criticism on OSF PsyArXiv, it now also accompanies the retraction as Matter Arising, there is also a detailed blog post by Bak-Coleman.

The retraction notice for Nosek’s paper from 24 September 2024 stated:

“The Editors are retracting this article following concerns initially raised by Bak-Coleman and Devezer1.

The concerns relate to lack of transparency and misstatement of the hypotheses and predictions the reported meta-study was designed to test; lack of preregistration for measures and analyses supporting the titular claim (against statements asserting preregistration in the published article); selection of outcome measures and analyses with knowledge of the data; and incomplete reporting of data and analyses.

Post-publication peer review and editorial examination of materials made available by the authors upheld these concerns. As a result, the Editors no longer have confidence in the reliability of the findings and conclusions reported in this article. The authors have been invited to submit a new manuscript for peer review.

All authors agree to this retraction due to incorrect statements of preregistration for the meta-study as a whole but disagree with other concerns listed in this note.”

It’s more like a big ball of wibbly wobbly… time-y wimey… stuff

By Smut Clyde

This Isn’t-it-Ironic retraction happened a month ago. Leonid asked me to delve into its background and I responded with my usual timeliness. So gather round the campfire, everyone, while Uncle Smut regales you with another blood-chilling, spine-curdling tale… this time, about psychologists not sciencing properly. It shall be brief, and sadly deficient in novelty. Better information is available from Bak-Coleman’s blogpost; and from Daniel Engber at Slate.

This Isn’t-it-Ironic retraction happened a month ago. Leonid asked me to delve into its background and I responded with my usual timeliness. So gather round the campfire, everyone, while Uncle Smut regales you with another blood-chilling, spine-curdling tale… this time, about psychologists not sciencing properly. It shall be brief, and sadly deficient in novelty. Better information is available from Bak-Coleman’s blogpost; and from Daniel Engber at Slate.

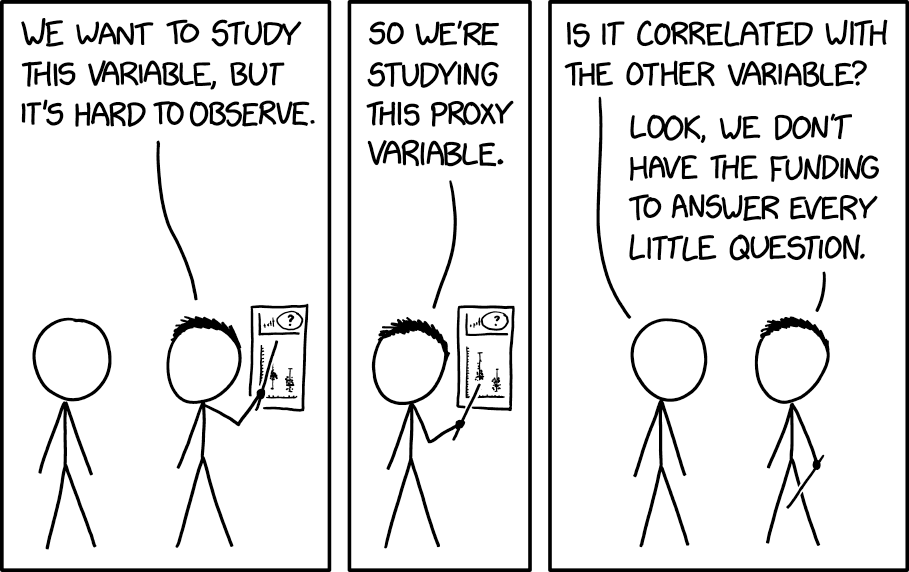

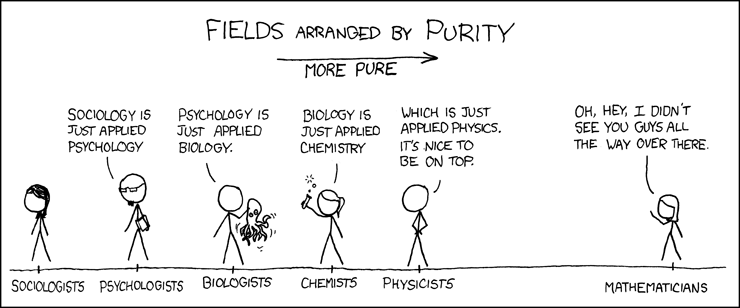

We’re dealing in particular with psychometrics, the quantitative mode of academic psychology, where there are psychological variables (constructs) to be measured. The thing to bear in mind is that the variables are not directly observable. The measurements might come from asking people to assign numbers to items in a questionnaire, or from scoring behavioural measures (“How many times did the subject scratch their nose during the interview?”), but either way they are separated from the variables by a whole nomological network. It’s like trying to map a world made entirely from jelly, using surveyor instruments that are also made of jelly (and calibrated against jelly benchmarks). Psychology is hard. The presence of means and standard deviations and ANOVA tests does not transform psychology into an easy discipline like physics.

Psychology also gave us Daryl Bem‘s precognition studies. Who knew that I was going to write that? Every backstory has a backstory of its own, but how Professor Bem became a reluctant convert to the idea of additional human senses beyond the recognised ones – specifically, a precognitive sense – is not relevant here. All that matters is that over many years he conducted a series of experiments to legitimise this ignis fatuus with all the rigor he could summon. Nine separate sub-trials used tasks and stimuli designed to engage his subjects’ emotions and invoke this fugitive, unreliable mode of perception. His results met the standards of Journal of Personality & Social Psychology (JPSP) and were published in 2011, under the title “Feeling the future: Experimental evidence for anomalous retroactive influences on cognition and affect“.

The Stress of Her Regard

“Colin A Ross […] is also Data Point #4 in support of my theory that ‘Psychiatrists are consistently crazypants’. Cases #1 to #3 being Hans Eysenck, Peter Gøtzsche and Hannibal Lecter.” – Smut Clyde

Seven years of labor on the instruments of time

Those studies receive a lot of credit as a driving force in transforming how science is done. Unintentionally, Bem laid bare the institutional bias in psychology publishing that favours bullshit, and inspired the novel concept that (hear me out) the results of psychology experiments should be reproducible. For Richard Wiseman and Stuart Ritchie tried but failed to replicate Bem’s observations, but they couldn’t get the failure published… the journals were not merely uninterested in negative replication trials and were in fact actively antagonistic to the idea of replication trials at all, whether successful or not. Editors shared a tacit acceptance that ‘trying to replicate’ should be eschewed to avoid disappointment for everyone. Submissions to JPSP and then two other journals were rejected by the editors; at a fourth journal the manuscript was sent out for review but rejected on the advice of Reviewer #2 (i.e. Daryl Bem). But PLoS One finally published it, Huzzah!

“This journal does not publish replication studies, whether successful or unsuccessful”.

New Scientist (2011)

Bah Humbug

Edinburgh psychologists announce in Nature Communications genes for being rich. A Christmas Carol.

Then things heated up for a while, with Animated Exchanges of Views within the scholarly societies. It wasn’t just about Bem any more; his precognition studies were just one symptom of a broader problem with the popularity of Just-So stories within psychology (e.g. the ‘Social Priming’ school of fantasy fiction). Simmons et al. were inspired to write “False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant” to show how easily a confession could be tortured out of perfectly innocent data… notably, a confession that listening to Beatles music made the subjects chronologically younger.

It grieves me to report that the aspect of the debate given most prominence in the media was the nature of the visual stimuli that Bem picked to activate the posited precognitive skills of his college-age participants, which is to say, erotic ones. Soft Retro porn if you like. That got Leonid’s interest too, but you and I are above such prurient details.

“Wu didn’t want to say out loud that the professor’s porno pictures weren’t hot, so she lied: Yeah, sure, they’re erotic.”

Slate (2017)

Daniel Kahneman was forced to weigh in, telling researchers that they needed to commit themselves to the principle of reproducible results or else he would have to turn the car around and drive home. Even then, not everyone agreed that psychology needed to revise its methods, and I was glad to have my expectations confirmed when the absolute plonker who reacted to Kahneman’s manifesto by comparing reformers and replication-demanders to climate-change denialists, turned out to be a prominent social primer, Norbert Schwarz. I am not making this up.

“You can think of this as psychology’s version of the climate-change debate,” says Schwarz. “The consensus of the vast majority of psychologists closely familiar with work in this area gets drowned out by claims of a few persistent priming sceptics.”

Nature (2012)

After the recriminations and soul-searching died down, people were more widely aware that the literature of behavioural / social psychology is a quagmire of flim-flam, dominated by a paradigm where authors would throw all their data into a box and shake it about until a coincidental but publishable correlation fell out of the crosstabs tables somewhere. There was a growing sense that p-hacking was not the optimal approach. Brian Wansink (now buried under obloquy rather than admiration) was a victim of the change in the wind.

Blood Sugar Sex Magick

“Don’t we all want to poke pins into fabric mannequins labelled as our loved ones, or to deface their photographs or whatever? Umm, neither do I.” – Smut Clyde

Bem is not Wansink; nor is he Diederik Stapel. He comes from the research tradition where you distill your observations into an explanatory / predictive theory (along with introspection, flaws in existing theories, and lots of beer), then collect data to convince others to accept it. This is “data as a rhetorical device”. This mostly works in physics and chemistry, but Bem forgot that the ability to fool oneself was as strong for him as it is for everyone else, and he lapsed into a state of statistical sin.

“I’m all for rigor,” he continued, “but I prefer other people do it. I see its importance—it’s fun for some people—but I don’t have the patience for it.” It’s been hard for him, he said, to move into a field where the data count for so much. “If you looked at all my past experiments, they were always rhetorical devices. I gathered data to show how my point would be made. I used data as a point of persuasion, and I never really worried about, ‘Will this replicate or will this not?’ ”

Bem quoted in Slate (2017)

So that’s why pre-registering one’s studies came into fashion, recording in advance which hypotheses would be tested and which analyses would be applied – rather than coming up with them in retrospect in the light of whatever Rorschach-like structure might be discerned in the experimental results.

Schwarz showed one response to Kahneman’s “Reform or die!” warning: total denial. In contrast, on Team “Preregister & Replicate” we have Brian Nosek, who started the Center for Open Science. The goal here is not only to encourage good psychology research practices as an ideal, but also to make them the easiest option, with infrastructure for sharing data and experimental details.

Michael Persinger’s crank magnetism

“What about you? Do you find it risible when I say the name…” Michael Persinger? Either you are laughing already, or you wonder what this is all about. Both audiences will sure be entertained by the following guest post of my regular contributor, Smut Clyde. For this is about Professor Michael Persinger, born 1945, psychologist…

Jonathon Schooler reacted to the situation in his own quirky way. Schooler agrees that preregistering methods and analysis is a good thing, and about the need for replications. Like a magic trick, an experiment should work the same way for anyone who performs it, and if not: we need to learn what details of the laboratory conditions need to be nailed down, even if it’s “researcher skepticism”.

At the same time, he was loath to concede that psychologists were fundamentally on the wrong track. When a nicely counter-intuitive phenomenon called “Verbal overshadowing” which he’d discovered at the start of his career (i.e. as the stepping-stone to tenure) disappeared, Schooler opted to believe that it had existed but then went away, due to the perversity of reality – in preference to accepting that it had never existed in the first place outside of his unconscious research malpractice. Here’s Schooler on air at NPR in 2011, along with a science-adjacent writer (Jonah Lehrer) who named and popularised the Decline Effect. Neither of them is likely to blunten Occam’s Razor by overuse.

JONATHAN SCHOOLER: Like – I, I say this with – some trepidation but I think we can’t rule out the possibility that there could be some way in which the active observation is actually changing the nature of reality.

[MUSIC/MUSIC UP AND UNDER]

That somehow in the process of observing effects, that we change the nature of those effects.

[MUSIC]

SEVERAL AT ONCE: Ah!

ROBERT KRULWICH: You’re in real trouble.

[GUYS LAUGHING]

JAD ABUMRAD: But it sounds like you’re saying that maybe the truth is running away from you or something?

JONATHAN SCHOOLER: Well, I – I’m not – you know, I’m not gonna say that I am certainly not gonna say that there’s some sort of intentionality to these effects disappearing, more that it’s almost – and again, this is just a speculation – some sort of habituation.

So just as when you put your hand on your leg you feel it and then as you leave it there it becomes less and less noticeable, somehow there may be some kind of habituation that happens in – with respect to these findings.

Decline and Fall

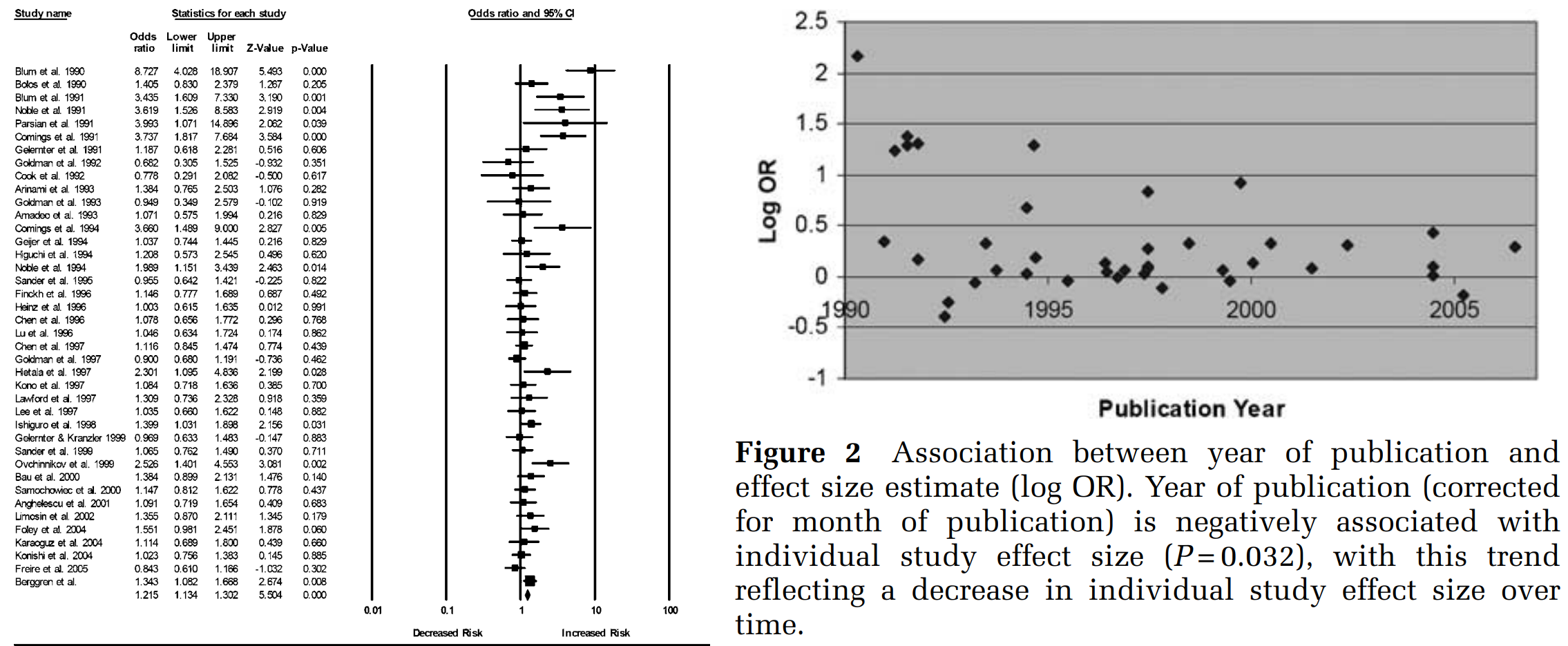

This ‘Decline effect‘ is one symptom of the fallen state of psych. Effect sizes dwindle with time after a dramatic discovery and the initial celebratory ballyhoo, often dwindling to the point of disappearance (coincidentally, ‘Ballyhoo’ is also the name of a rather good whiskey). This decline only seems to be a problem in psi research, psychology (but I repeat myself), and new drug testing.

One might think that there’s no mystery in the failure of tabloid-headline reports to replicate if they were made up in the first place, or an accident of happenstance that emerged from the background noise and were cherry-picked by some plonker using bad analytic practices. Such is the parsimonious account. Yes, selective serotonin reuptake inhibitors (SSRIs) like Prozac showed wonderful results in early trials compared to old-fashioned tricyclic antidepressants with patents about to expire, but perhaps that was because pharmaceutical companies had pissed mind-boggling amounts of money down the rat-hole of the fatally flawed completely fucked “serotonin deficiency” theory of depression, and research outcomes that showed SSRIs to be no improvement were simply not initially acceptable.

JONATHAN SCHOOLER: And I think this is, for me, the most troubling error to the decline effect, ‘cause you see like second generation anti-psychotic.

ROBERT KRULWICH: Second generation anti-psychotic.

JONATHAN SCHOOLER: These are drugs used to treat people with schizophrenia, bipolar. When they first came out in the late ‘80s, early ‘90s, some studies found that they were about twice as effective than first generation anti-psychotics.

JAD ABUMRAD: Mm!

JONATHAN SCHOOLER: And then what happened is the standard story of the decline effect. Cue the sound effect.

[SOUND EFFECT]

Which is clinical trial after clinical trial, the effect size just slowly started to fall apart. You see a similar decline with things like Prozac, and – anti-depressants –

ROBERT KRULWICH: Wow!

JONATHAN SCHOOLER: The effect of the drugs have gotten weaker, but the placebo effect has also gotten stronger. I was talking to one guy at a drug company who [LAUGHS] – he was kind of interesting. He blamed that on drug advertising. He said that they started to see their placebo effect go up in the late ‘90s when these drug companies started advertising.

Part 1: Frontiers in Paranormal Activities

This is my currently final (two-part) instalment on the topic of Frontiers listing by Jeffrey Beall as a potential, possible or probable predatory publisher. This time I will focus on the Frontiers scientists: the authors as well as the academic editors. In brief, it appears that Frontiers’ own rules for peer review and conflict of…

What does call out for explanation is the initial ‘doorstep’ of false replications from wannabees and imitators who will settle for being second discoverer if they can’t be first, postponing the inevitable decline. Perhaps a better name for the phenomenon would be “doorstep effect”, but no-one ever listens to Uncle Smut.

Other academics prefer more exotic explanations, though. Notably the Cosmic Censorship Hypothesis, that the universe does not like strong correlations – perhaps because there are Things that Man was Not Meant to Know. So the discovery of an interesting effect causes the universe to change the rules of reality until it goes away, like an oyster encysting a sand grain in a pearl. At least in psychology and drug research… cause-and-effect relationships in physics or chemistry or biology don’t seem to arouse the universe’s defensive immune mechanisms.

All you zombies

“Personally I prefer cats to humans because they are little furry psychopaths so we are on the same wavelength.” – Smut Clyde

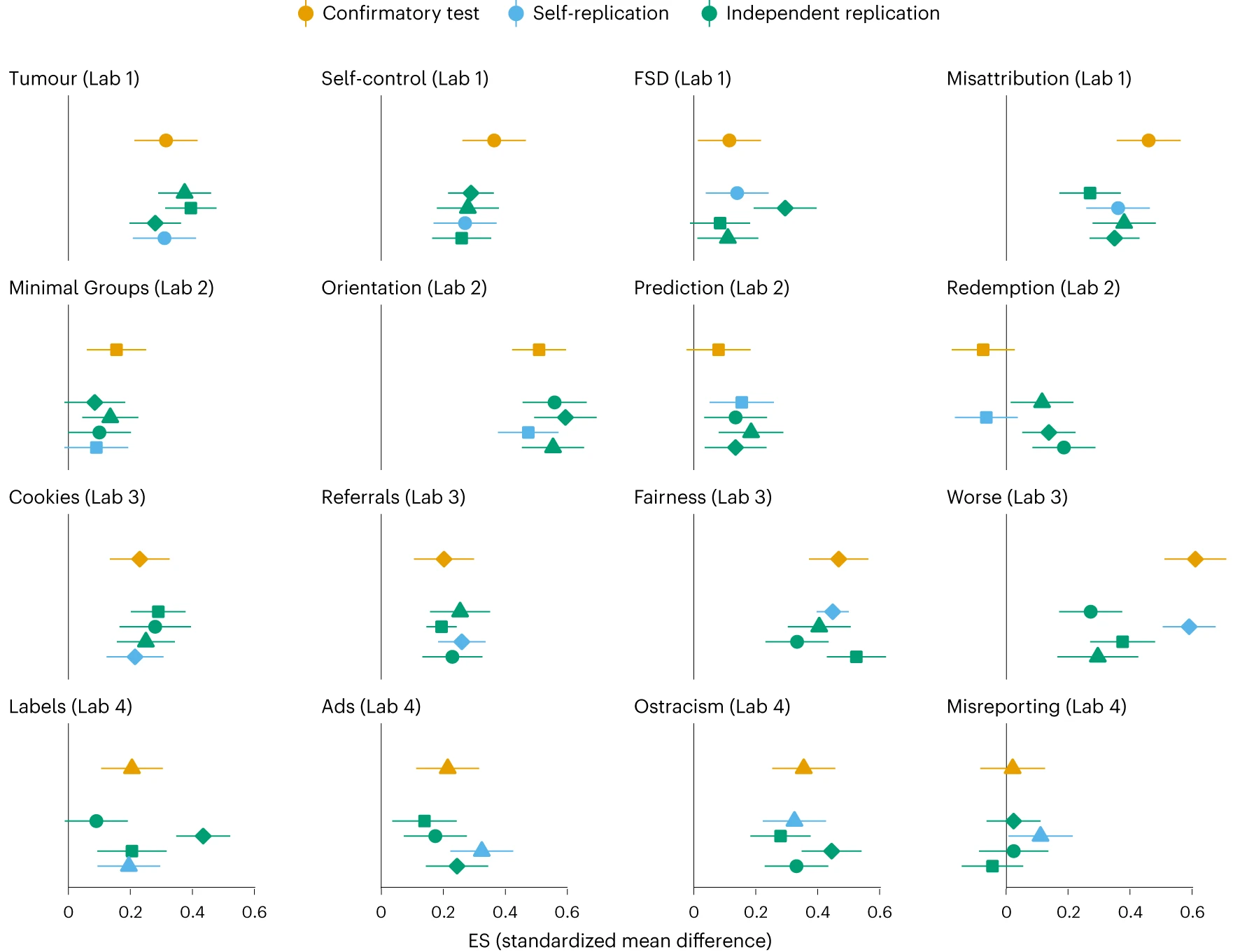

This is the point where Nosek herded the cats to conduct a multi-centre collaborative study and test the reproducibility of 16 high-profile reports in psychology (and beyond), to be a flagship for the Open-Science agenda. “Multi-centre” because experimental conditions should be shared, and made publicly available, and because one test of a real effect is whether it shows up no matter who looks for it.

John Protzko , Jon Krosnick , Leif Nelson , Brian A. Nosek , Jordan Axt , Matt Berent , Nicholas Buttrick , Matthew DeBell , Charles R. Ebersole , Sebastian Lundmark , Bo MacInnis , Michael O’Donnell , Hannah Perfecto , James E. Pustejovsky , Scott S. Roeder , Jan Walleczek , Jonathan W. Schooler High replicability of newly discovered social-behavioural findings is achievable Nature Human Behaviour (2023) doi: 10.1038/s41562-023-01749-9

The thing is, Nosek wanted Schooler on board with this project, as a prominent advocate of reproducibility. But Schooler shares Bem’s psi-power curiosity: at the same time as Bem’s precognition trials, Schooler was running his own, with initially positive results that could not be repeated (he was also a reviewer on Bem’s 2011 paper). As seen, he is firmly committed to the Cosmic Censorship hypothesis, and holds it responsible for the decline and eventual disappearance of psi-research programs like J. B. Rhine’s – the founder of parapsychology. This is why the preregistration for the whole project presented it as a study of the time-course of this expected decline in correlations and Effect Sizes.

“Both Schooler and Bem now propose that replications might be more likely to succeed when they’re performed by believers rather than by skeptics. […] “If it’s possible that consciousness influences reality and is sensitive to reality in ways that we don’t currently understand, then this might be part of the scientific process itself,” says Schooler. “Parapsychological factors may play out in the science of doing this research.””

Slate (2017)

Those goalposts won’t shift themselves

Wouldn’t you know it: to the credit of the original researchers, their original reports were vindicated, and the effect sizes didn’t decline with time. To put it another way, the Decline Effect succumbed to the Decline Effect. Nosek et al. felt obliged to retrospectively revise the goals of the replication project, making it all triumphant in the light of the actual findings. And science journalists were well-pleased; a hero was here to rescue psychology! If everyone just observed a few simple rules then the whole endeavour could continue as before!

Wouldn’t you know it: to the credit of the original researchers, their original reports were vindicated, and the effect sizes didn’t decline with time. To put it another way, the Decline Effect succumbed to the Decline Effect. Nosek et al. felt obliged to retrospectively revise the goals of the replication project, making it all triumphant in the light of the actual findings. And science journalists were well-pleased; a hero was here to rescue psychology! If everyone just observed a few simple rules then the whole endeavour could continue as before!

Informed onlookers could see the violations of the guidelines that the paper was intended to encourage. They went Cassandra-mode and were all “This ends badly”. However, Nosek et al. closed their ears.

* * * * * * * * *SPOILER ALERT * * * * * * *

It all ended badly.

Never mind, here’s Hawkwind!

Donate to Smut Clyde!

If you liked Smut Clyde’s work, you can leave here a small tip of 10 NZD (USD 7). Or several of small tips, just increase the amount as you like (2x=NZD 20; 5x=NZD 50). Your donation will go straight to Smut Clyde’s beer fund.

NZ$10.00

“wonderful results in early trials compared to old-fashioned tricyclic antidepressants with patents about to expire”

I (really!) first read the ante-ante-penultimate word there as “patients”.

LikeLike

Yes, ‘patients’ works too.

LikeLike

TL:DR psychology is not science!

LikeLike

A bit harsh. There have certainly been psychologists who were scientists.

The problem is that the right constructs and concepts don’t exist yet (and might never exist). Psychologists are like chemists, trying to do chemistry back before Dalton came up with “atoms” and “elements”, and there was only phlogiston and caloric. It was easier to do alchemy.

LikeLike