Biomedical scientists insist they absolutely need to perform cruel experiments on animals, especially on millions of mice, because this is how we will find a cure for cancer and other diseases.

But what if those experiments are sloppy at best and fake at worst? How can we trust scientists with making valid scientific conclusions if they are unable even to arrange a peer-reviewed figure without copy-pasting a mouse or two?

Sholto David follows up on his earlier investigation of the Dana Farber Cancer Institute (DFCI), and finds dodgy mouse images throughout USA and beyond.

Dana-Farberications at Harvard University

“Imagine what mistakes might be found in the raw data if anyone was allowed to look!” – Sholto David

Mice don’t count

By Sholto David

I was interested to see that the most popular image in the recent Dana-Farber media coverage was Irene Ghobrial’s formation of five mice that made a (now famous) “knight move” across Figure 2C in her 2014 paper in Blood. This image was republished by the New York Times, Nature, and others.

Yu Zhang, Michele Moschetta, Daisy Huynh, Yu-Tzu Tai, Yong Zhang, Wenjing Zhang, Yuji Mishima, Jennifer E. Ring, Winnie F. Tam, Qunli Xu, Patricia Maiso, Michaela Reagan, Ilyas Sahin, Antonio Sacco, Salomon Manier, Yosra Aljawai, Siobhan Glavey, Nikhil C. Munshi, Kenneth C. Anderson, Jonathan Pachter, Aldo M. Roccaro, Irene M. Ghobrial Pyk2 promotes tumor progression in multiple myeloma Blood (2014) doi: 10.1182/blood-2014-03-563981

I think I can understand why this captured people’s attention. Mice are probably a little easier to grasp than western blots (conceptually speaking, of course; they are probably quite difficult to grasp when running loose). Mice are also living, breathing beings, the consequences of muddling these experiments are more immediately apparent. And of course, experiments on mice are understood to be a key stepping stone towards experiments on humans…

But these mice have been rapidly eclipsed, a far greater threat to scientific integrity has risen! I am talking about the AI generated rat published by Frontiers…

Whilst I also thought this was funny, there is something uncomfortable about how much attention this hallucination gathered, and what the furious reaction seems to imply about the expectations people had of peer review at Frontiers. Michael Eisen (who hits consistent winners on Twitter) posted something succinct that reflected some of my thoughts.

So this blog post will be a discussion of the rodents in research that I think are truly important. To keep some sense of order, I will restrict myself to problems with bioluminescent images of mice that I have been posting about in the last few months, since these turned out to be so popular already… And where better to start than where I left off previously, at Dana-Farber of course!

Torturing Small Animals

Animal abuse and bad science go hand in hand. Meet professors Ute Moll, Jordi Muntané, Sam W Lee and others.

Harvard Medical School Affiliates

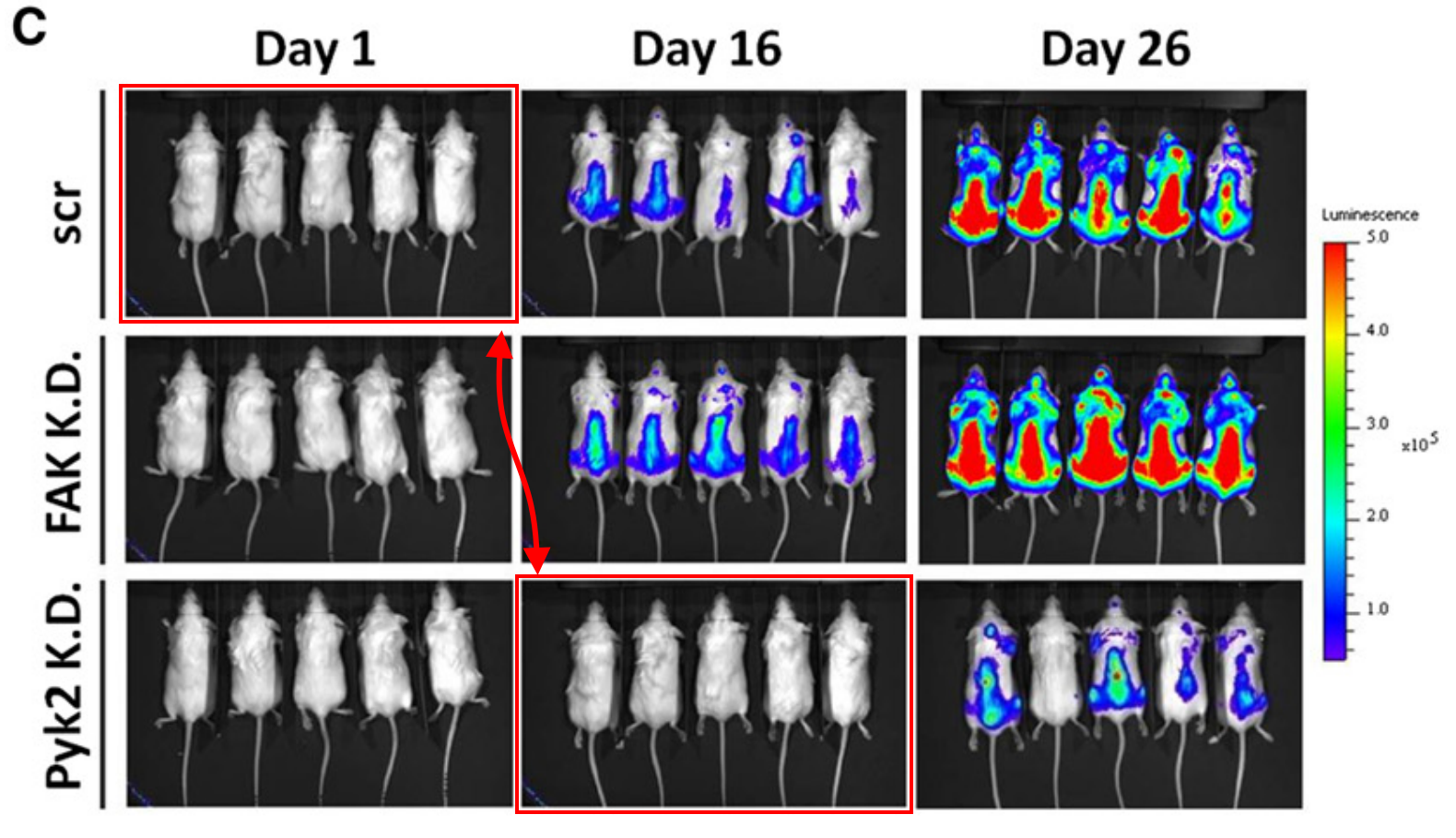

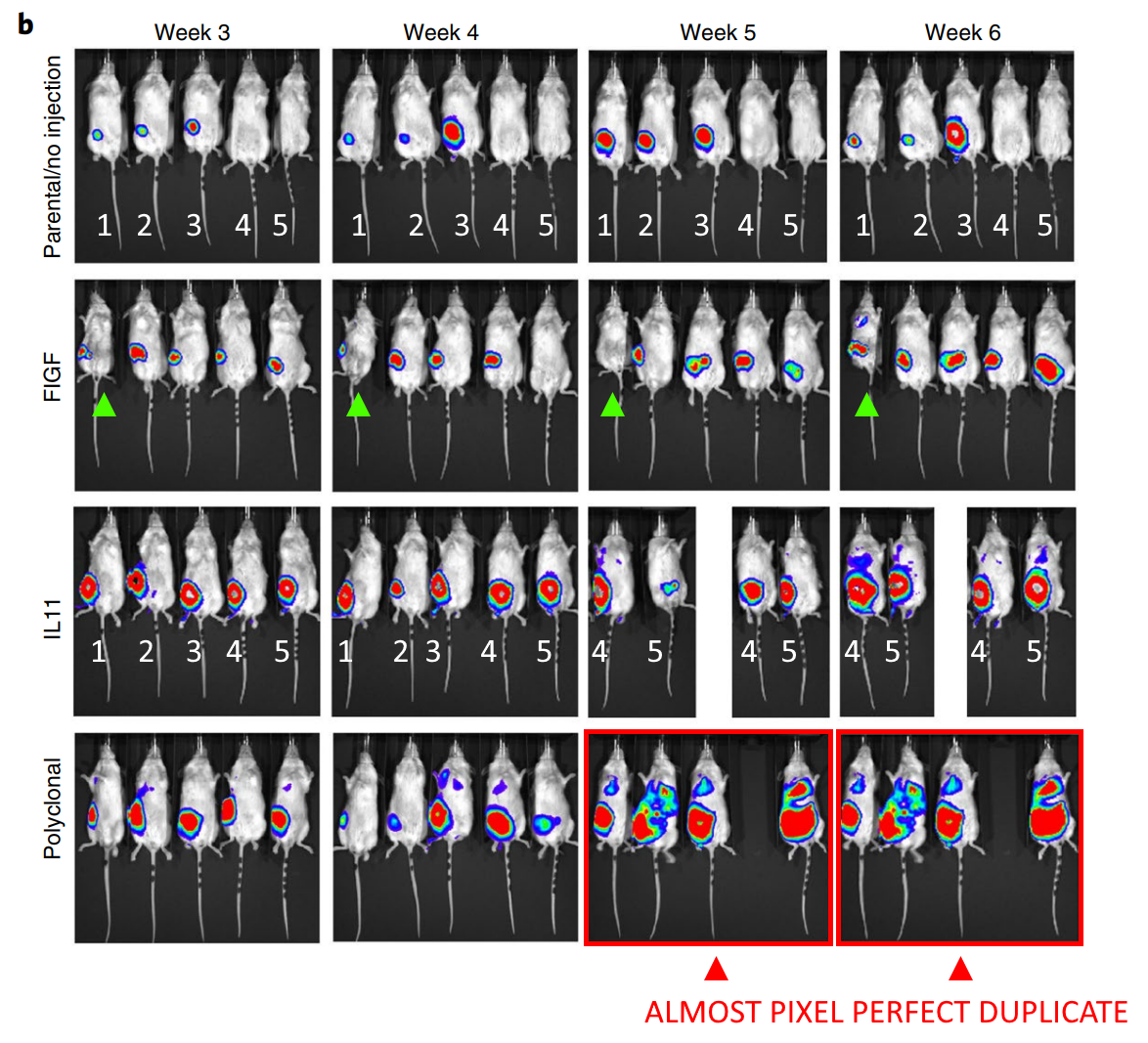

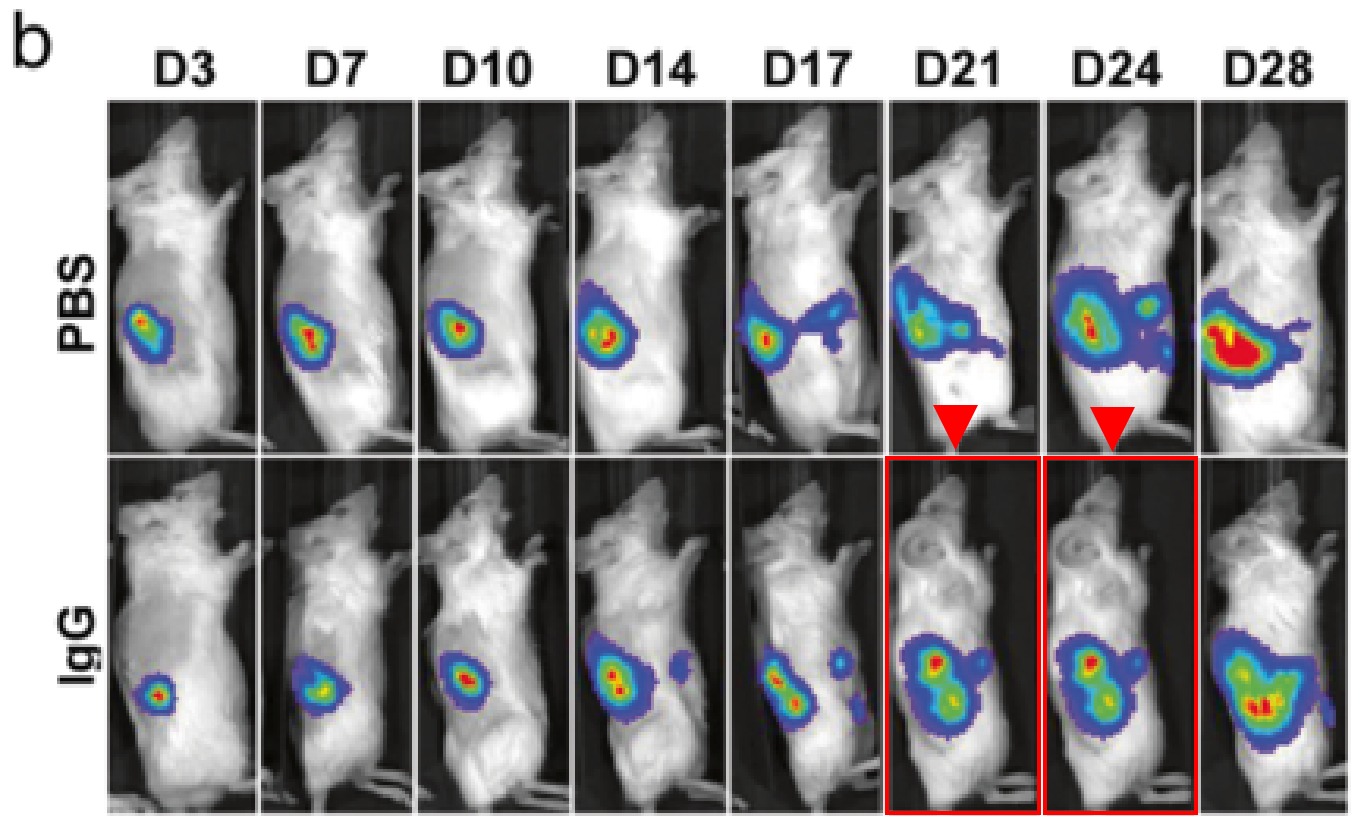

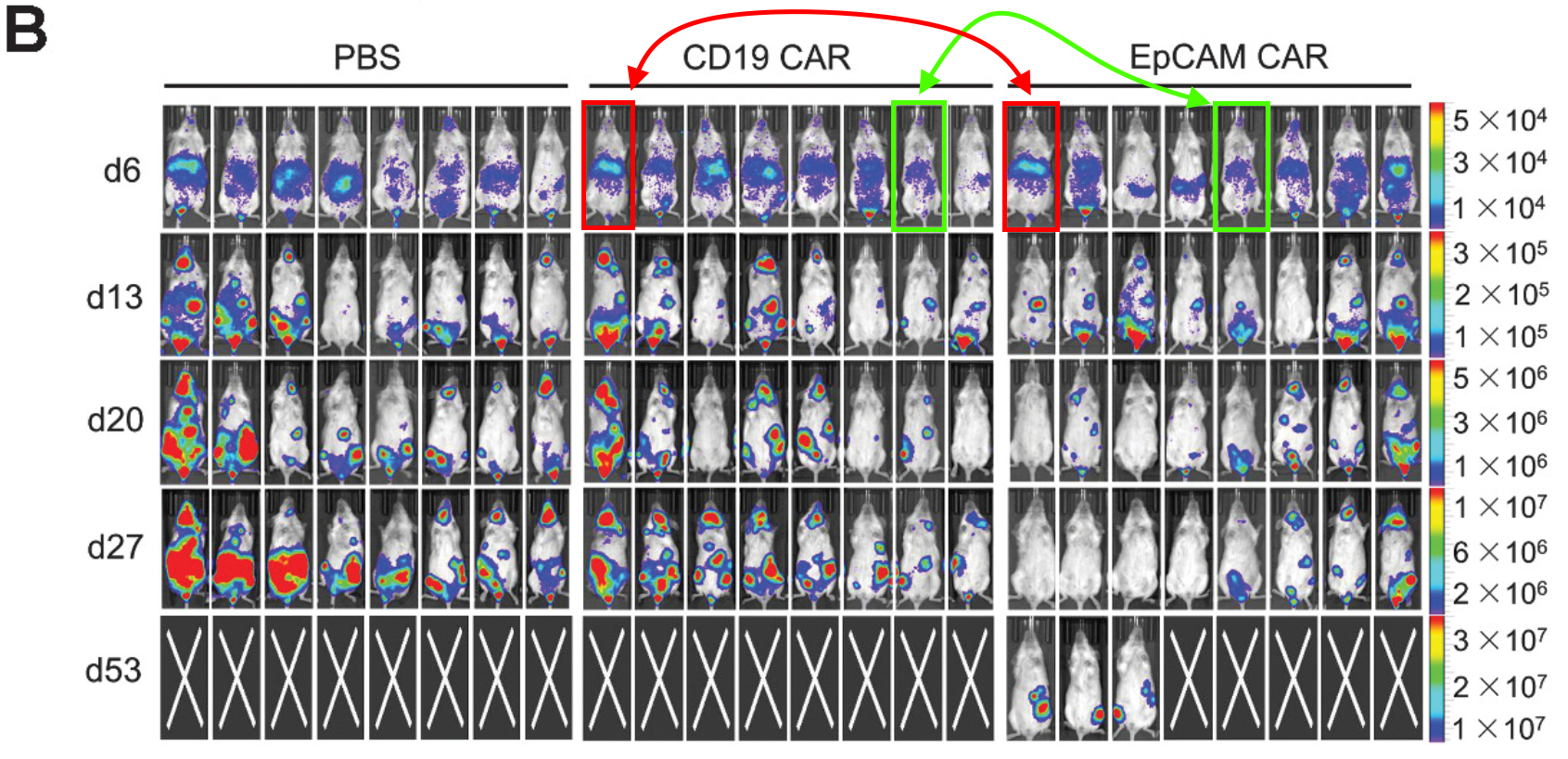

Kornelia Polyak is a Professor of Medicine at Dana-Farber Cancer Institute, and a co-leader of the Cancer Cell Biology Program. Pay attention to the mice in red rectangles at the bottom right of the figure below. These are the same mice, imaged at the same time, not a week apart as the figure legend claims.

Michalina Janiszewska, Doris P. Tabassum, Zafira Castaño, Simona Cristea, Kimiyo N. Yamamoto, Natalie L. Kingston, Katherine C. Murphy, Shaokun Shu, Nicholas W. Harper, Carlos Gil Del Alcazar, Maša Alečković, Muhammad B. Ekram, Ofir Cohen, Minsuk Kwak, Yuanbo Qin, Tyler Laszewski, Adrienne Luoma, Andriy Marusyk, Kai W. Wucherpfennig, Nikhil Wagle, Rong Fan, Franziska Michor, Sandra S. McAllister, Kornelia Polyak Subclonal cooperation drives metastasis by modulating local and systemic immune microenvironments Nature Cell Biology (2019) doi: 10.1038/s41556-019-0346-x

It’s useful to look at the bioluminescence signal, as well as the positions of the tails and paws. I asked an additional question on PubPeer about the way the mice are numbered (related to the stripes on the tail), none of the authors responded. When I emailed the journal and authors I received an indignant response from Kornelia, explaining that these images of mice are “only for illustration purposes“… A correction request has been submitted all the same.

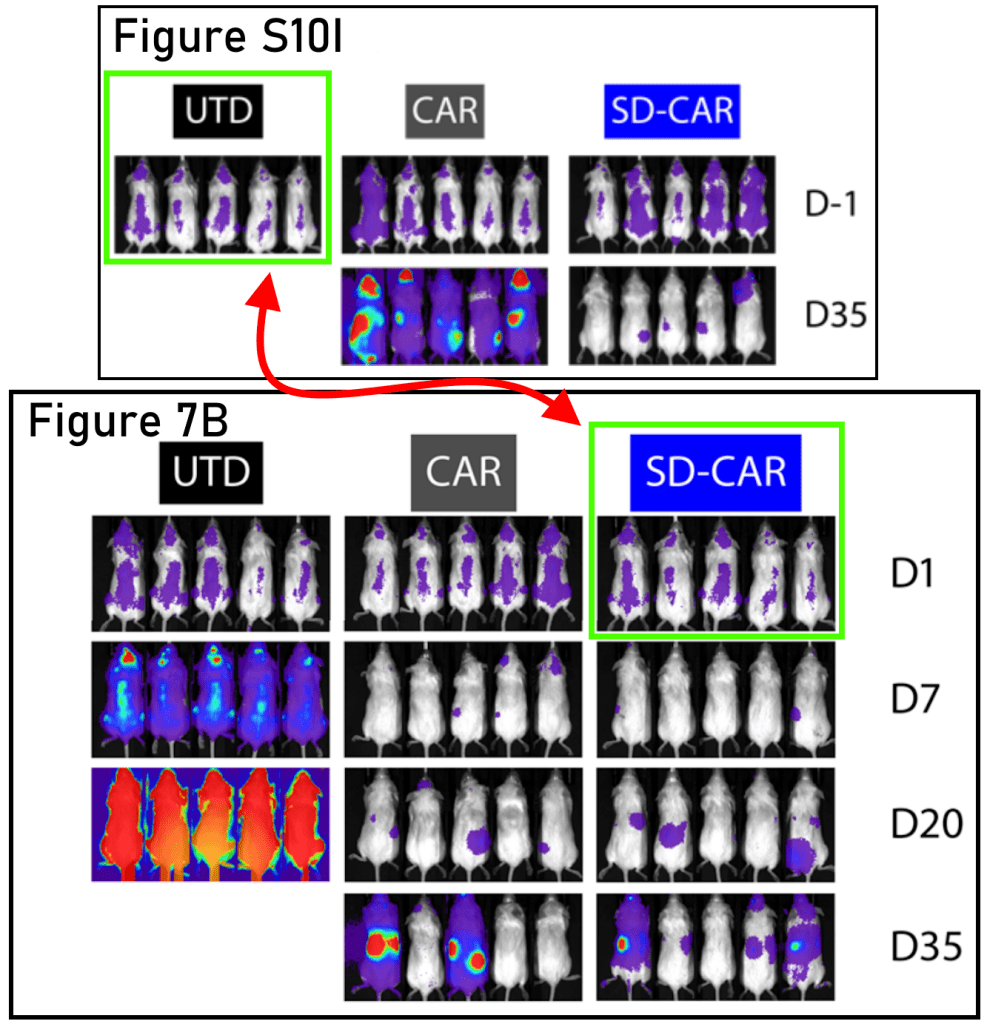

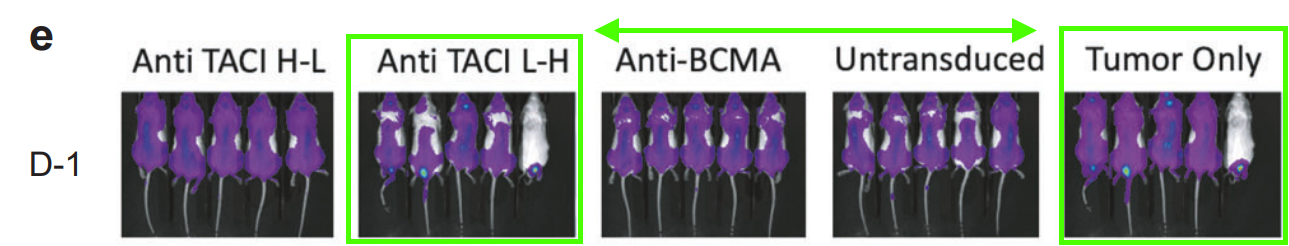

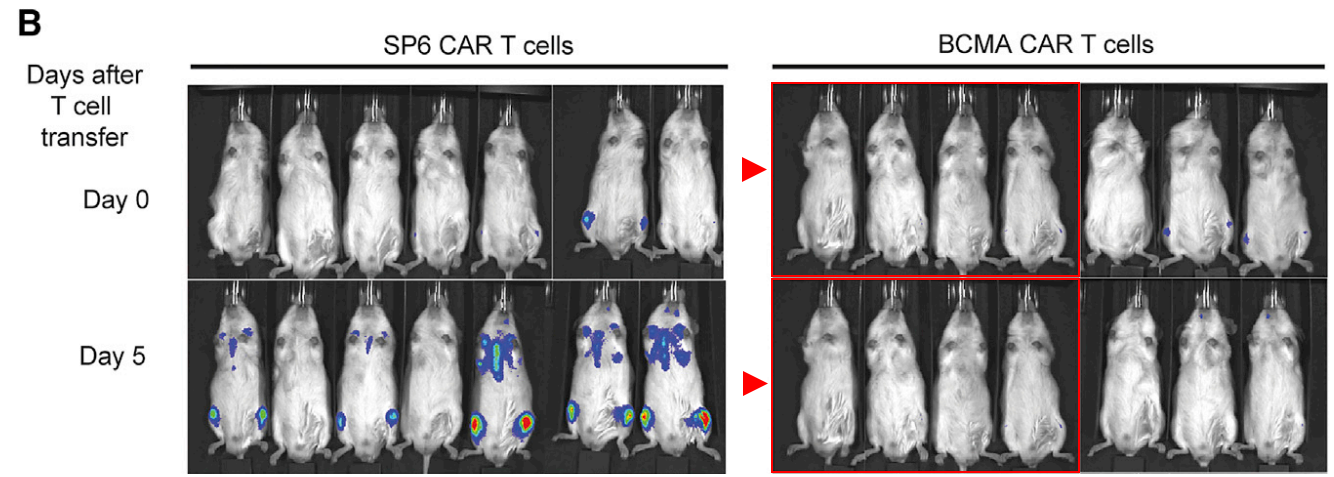

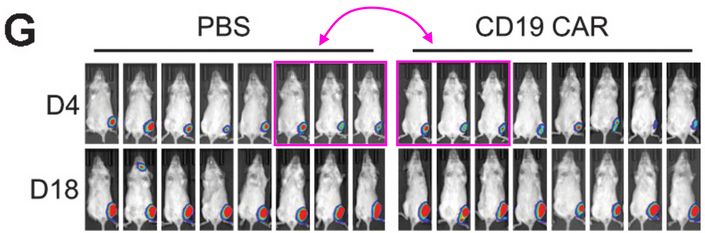

Also at Harvard Medical School, but this time at Massachusetts General Hospital, allow me to introduce the delightfully named Associate Professor Marcela V. Maus! In this case, look at the magenta rectangles in Figure 3b, and the green shapes in Figure 3e. Figure 3e is a little trickier to see, because the bioluminescence signal is not the same; it is better to pay attention to the tails.

Rebecca C. Larson, Michael C. Kann, Charlotte Graham, Christopher W. Mount, Ana P. Castano, Won-Ho Lee, Amanda A. Bouffard, Hana N. Takei, Antonio J. Almazan, Irene Scarfó, Trisha R. Berger, Andrea Schmidts, Matthew J. Frigault, Kathleen M. E. Gallagher, Marcela V. Maus Anti-TACI single and dual-targeting CAR T cells overcome BCMA antigen loss in multiple myeloma Nature Communications (2023) doi: 10.1038/s41467-023-43416-7

Professor Maus replied to me by email after I sent Figure 3b to the journal. She seems well intentioned, although good intentions aren’t really a substitute for competence. I was able to find three more papers with similar errors, as well as further contradictions between the data shown in charts and the bioluminescence images. There are now 8 PubPeer threads for Professor Maus, with possibly more to find, and there is even a fish on PubPeer (Jan et al 2022), which unfortunately falls outside the scope of this rodent blog. As far as I am aware, Professor Maus intends to correct all these papers.

Maria Ormhøj, Irene Scarfò, Maria L Cabral, Stefanie R Bailey, Selena J Lorrey, Amanda A Bouffard, Ana P Castano, Rebecca C Larson, Lauren S Riley, Andrea Schmidts, Bryan D Choi, Rikke S Andersen, Oriane Cédile, Charlotte G Nyvold, Jacob H Christensen, Morten F Gjerstorff, Henrik J Ditzel, David M Weinstock, Torben Barington, Matthew J Frigault, Marcela V Maus Chimeric Antigen Receptor T Cells Targeting CD79b Show Efficacy in Lymphoma with or without Cotargeting CD19 Clinical Cancer Research (2019) doi: 10.1158/1078-0432.ccr-19-1337

The next paper by Professor Maus has been already corrected twice.

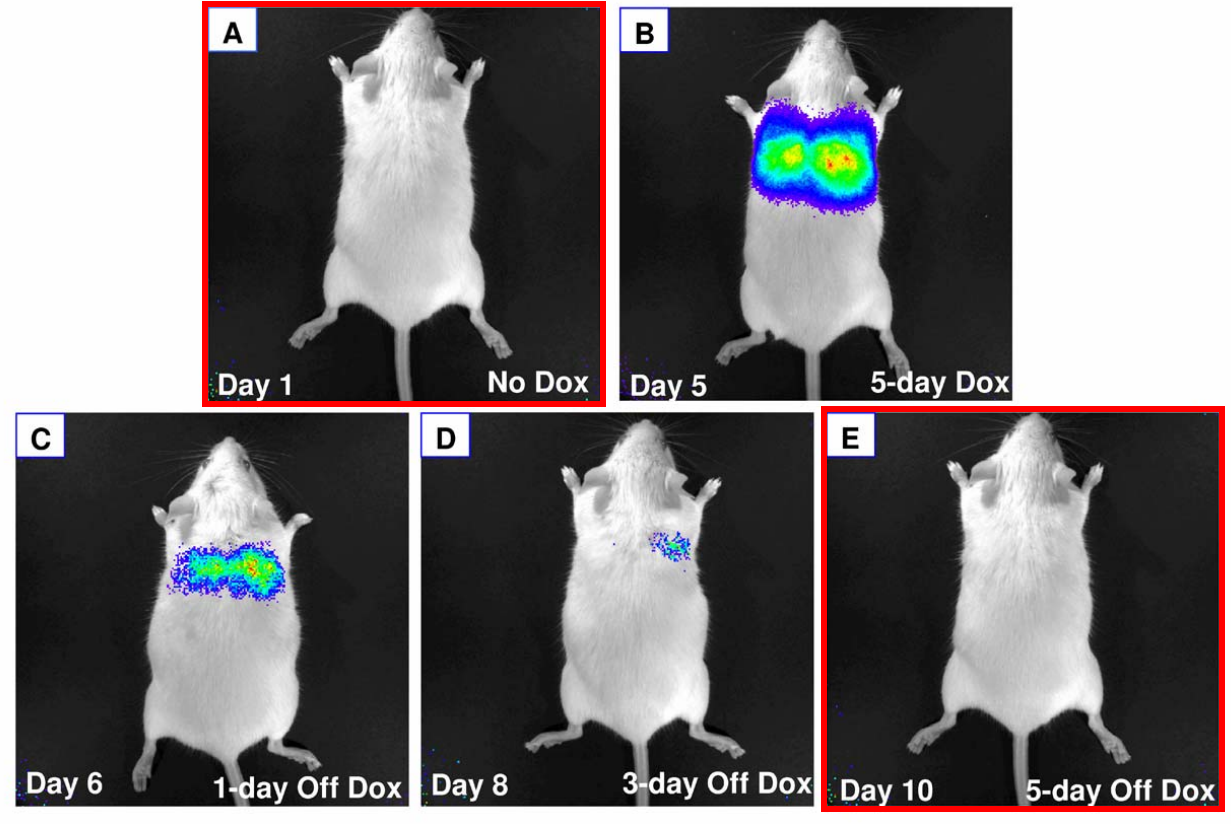

Max Jan, Irene Scarfò, Rebecca C. Larson, Amanda Walker, Andrea Schmidts, Andrew A. Guirguis, Jessica A. Gasser, Mikołaj Słabicki, Amanda A. Bouffard, Ana P. Castano, Michael C. Kann, Maria L. Cabral, Alexander Tepper, Daniel E. Grinshpun, Adam S. Sperling, Taeyoon Kyung, Quinlan L. Sievers, Michael E. Birnbaum, Marcela V. Maus, Benjamin L. Ebert Reversible ON- and OFF-switch chimeric antigen receptors controlled by lenalidomide Science Translational Medicine (2021)

doi: 10.1126/scitranslmed.abb6295

In April 2022, the Erratum informed that

“Fig. 5G contained two errors. A bar graph pertaining to a different experiment (from Fig. 4G) was inadvertently included instead of the intended bar graph. The drug concentrations within the flow cytometry histogram were inadvertently mislabeled 100 nM, and now have been corrected to match the bar graph drug concentration label of 1000 nM. Figure 5 has now been corrected. The text and overall findings of the study are unchanged.”

In July 2023, the second Erratum reassured the readers:

“Figs. 4A and 5A contained a error. The sequence fragment from ZFP91 was inadvertently misnumbered. The Fig. 4 legend and Fig. 5 and its legend and have now been corrected. The online PDF and HTML (full text) have been updated. These corrections do not affect the interpretation of the data or the conclusions of the paper.”

There is now a need for a 3rd Erratum, to make sure that the interpretation of the data or the conclusions of the paper remain forever unaffected:

Also this paper was corrected in 2018, but for a formality issue: “the supplemental figures were omitted“. Instead, a mouse featured twice in a main figure:

Irene Scarfò, Maria Ormhøj, Matthew J Frigault, Ana P Castano, Selena Lorrey, Amanda A Bouffard, Alexandria Van Scoyk, Scott J Rodig, Alexandra J Shay, Jon C Aster, Frederic I Preffer, David M Weinstock, Marcela V Maus Anti-CD37 chimeric antigen receptor T cells are active against B- and T-cell lymphomas Blood (2018) doi: 10.1182/blood-2018-04-842708

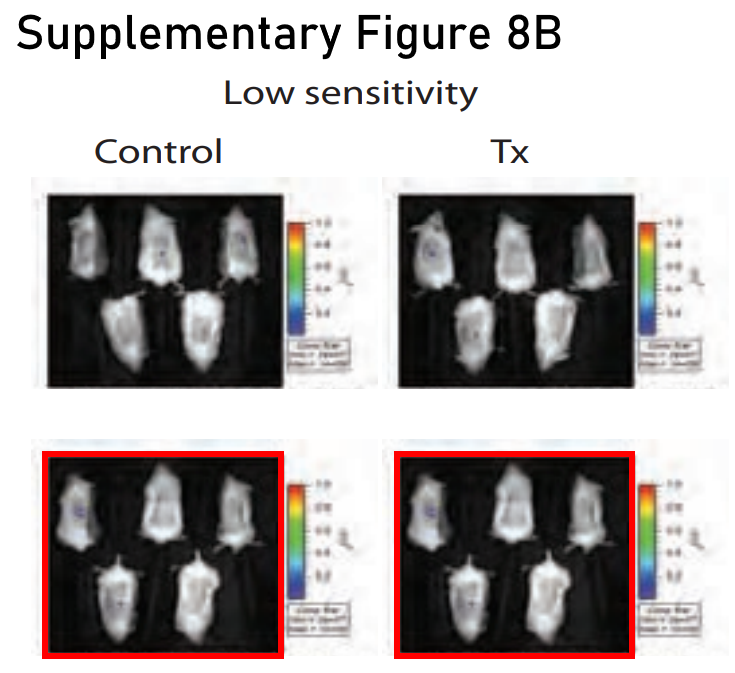

I wasn’t going to write about Harvard Medical School without bringing up Kenneth C. Anderson, of course. In this figure of atrocious quality (published by Nature Medicine, current APC $12,290) you have to pay attention to the noise as well as the signal. I spotted this one after the initial DFCI post went live, so it has not been previously blogged. Don’t worry if you’re struggling to see it, you can rest assured that these are duplicates, it was corrected on 14 February 2024: “one of the bioluminescence images was erroneously duplicated. Treatment [Tx], day 19, “Low sensitivity” was inadvertently pasted into the figure twice, and thus was also shown as Control, day 19, “Low sensitivity””.

Douglas W McMillin, Jake Delmore, Ellen Weisberg, Joseph M Negri, D Corey Geer, Steffen Klippel, Nicholas Mitsiades, Robert L Schlossman, Nikhil C Munshi, Andrew L Kung, James D Griffin, Paul G Richardson, Kenneth C Anderson, Constantine S Mitsiades Tumor cell-specific bioluminescence platform to identify stroma-induced changes to anticancer drug activity Nature Medicine (2010) doi: 10.1038/nm.2112

Another Harvard Medical School finding, this time involving Sabina Signoretti. The mice in the red rectangles are labelled as having been imaged three days apart, but are near pixel-perfect duplicates, perhaps they were imaged three seconds apart? Really, Sabina is worthy of an entire blog post of her own, with an impressive and growing PubPeer history. Instead, she only got a mention in Friday Shorts.

De-Kuan Chang, Raymond J Moniz, Zhongyao Xu, Jiusong Sun, Sabina Signoretti, Quan Zhu, Wayne A Marasco Human anti-CAIX antibodies mediate immune cell inhibition of renal cell carcinoma in vitro and in a humanized mouse model in vivo Molecular Cancer (2015)

doi: 10.1186/s12943-015-0384-3

Recently, The New York Times covered allegations related to my previous blog about Sam S. Yoon at Memorial Sloan Kettering Cancer Center (MSKCC), Sam’s papers included plenty of cruel experiments on mice, but no bioluminescence errors that I could find!

Memorial Sloan Kettering Paper Mill

“Why do successful and apparently intelligent surgeons feel the need to play pretend at biology research? Has Sam S. Yoon ever performed an invasion or migration assay? […] if this is how he “supervises” his research does anyone trust his supervision of surgery?” – Sholto David

But here is an institutional crossover! Research conducted at MSKCC by Eric L. Smith, who subsequently moved to DFCI, very cosy! The red rectangles are pixel perfect duplicates, the green, blue, and yellow rectangles are more similar than expected.

Carlos Fernández De Larrea, Mette Staehr, Andrea V. Lopez, Khong Y. Ng, Yunxin Chen, William D. Godfrey, Terence J. Purdon, Vladimir Ponomarev, Hans-Guido Wendel, Renier J. Brentjens, Eric L. Smith Defining an Optimal Dual-Targeted CAR T-cell Therapy Approach Simultaneously Targeting BCMA and GPRC5D to Prevent BCMA Escape-Driven Relapse in Multiple Myeloma

Blood Cancer Discovery (2020) doi: 10.1158/2643-3230.bcd-20-0020

Scripps Campus in Florida

And now to the University of Florida’s Scripps Biomedical Research Institute with two papers involving their Associate Dean of Graduate Studies, Christoph Rader. The first one appeared in Oncogene.

Haiyong Peng, Thomas Nerreter, Katrin Mestermann, Jakob Wachter, Jing Chang, Michael Hudecek, Christoph Rader ROR1-targeting switchable CAR-T cells for cancer therapy Oncogene (2022) doi: 10.1038/s41388-022-02416-5

Christoph has accepted this above duplication on PubPeer and a correction has recently materialised, impressive, since the co-editor-in-chief Justin Stebbing is pretty busy these days.

Oncogene EiC Justin Stebbing, a hypocrite of research integrity?

‘The results have been replicated by ourselves or others, so the image manipulation is irrelevant.’ – Justin Stebbing, double bluffing

No one has claimed responsibility for the mistake in the following paper, although one would assume Christoph is aware. To be fair this was published in the Molecular Therapy “family”, I doubt there is any point in emailing, they are also rather busy these days.

Vijay G. Bhoj, Lucy Li, Kalpana Parvathaneni, Zheng Zhang, Stephen Kacir, Dimitrios Arhontoulis, Kenneth Zhou, Bevin McGettigan-Croce, Selene Nunez-Cruz, Gayathri Gulendran, Alina C. Boesteanu, Laura Johnson, Michael D. Feldman, Enrico Radaelli, Keith Mansfield, MacLean Nasrallah, Rebecca S. Goydel, Haiyong Peng, Christoph Rader, Michael C. Milone, Don L. Siegel Adoptive T cell immunotherapy for medullary thyroid carcinoma targeting GDNF family receptor alpha 4 Molecular Therapy Oncolytics (2021) doi: 10.1016/j.omto.2021.01.012 issn: 2372-7705

Industry efforts with Fate Therapeutics

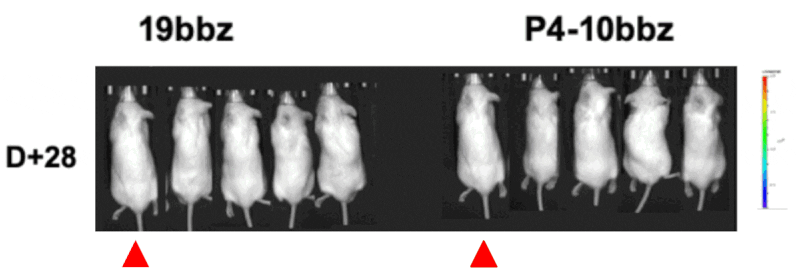

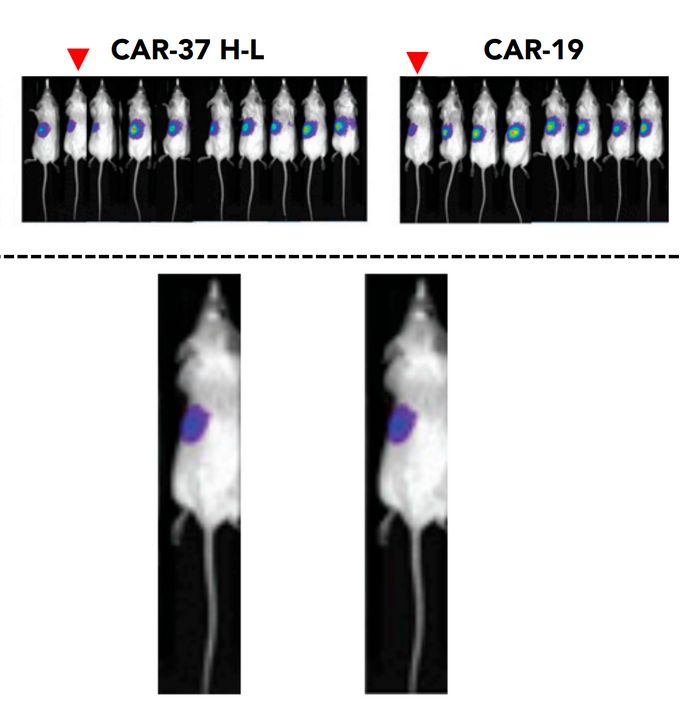

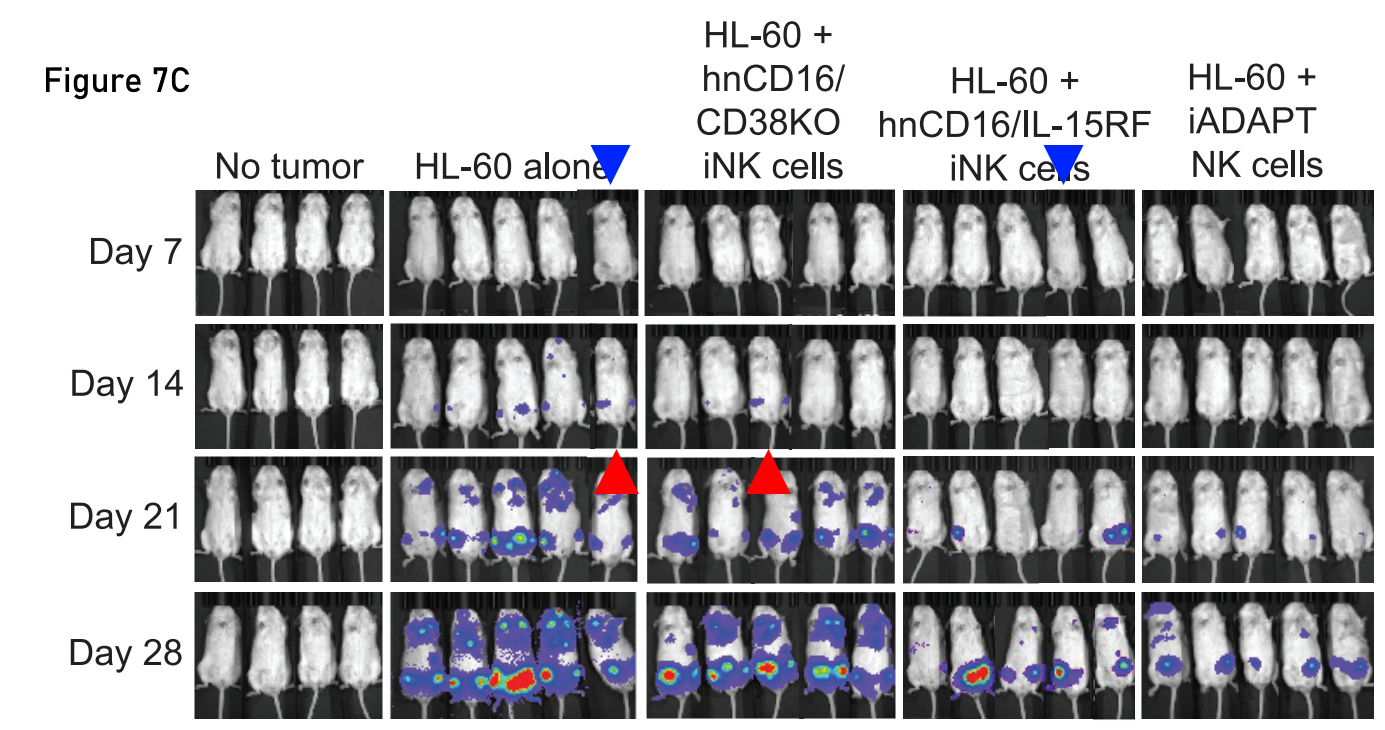

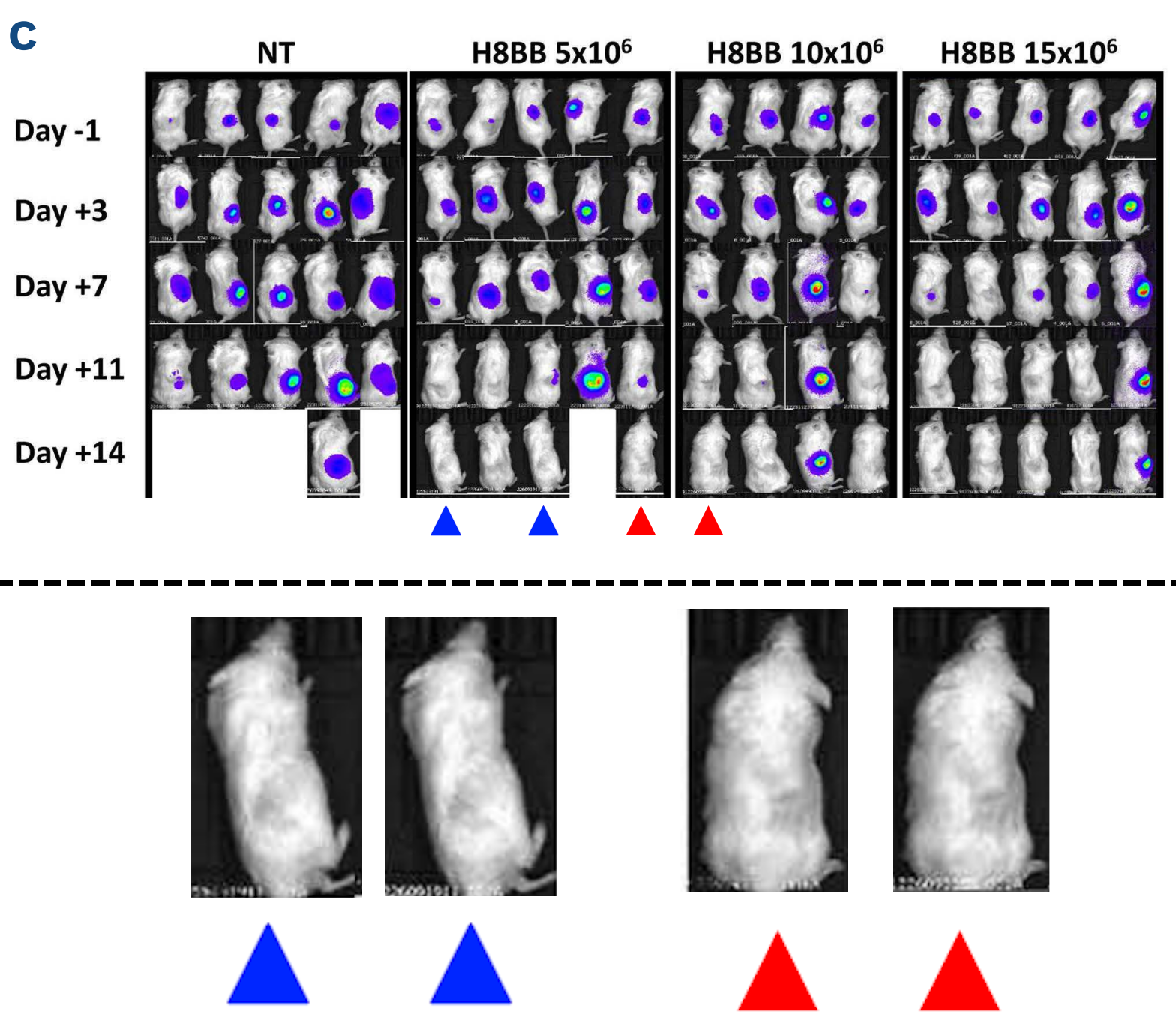

NK cells are the next CAR-T, and the University of Minnesota and Fate Therapeutics are joining the gold rush. The blue and red triangles in the following figure point to matching mice, the last author, Frank Cichocki, has accepted the duplications and promised to fix them.

Karrune V. Woan, Hansol Kim, Ryan Bjordahl, Zachary B. Davis, Svetlana Gaidarova, John Goulding, Brian Hancock, Sajid Mahmood, Ramzey Abujarour, Hongbo Wang, Katie Tuininga, Bin Zhang, Cheng-Ying Wu, Behiye Kodal, Melissa Khaw, Laura Bendzick, Paul Rogers, Moyar Qing Ge, Greg Bonello, Miguel Meza, Martin Felices, Janel Huffman, Thomas Dailey, Tom T. Lee, Bruce Walcheck, Karl J. Malmberg, Bruce R. Blazar, Yenan T. Bryceson, Bahram Valamehr, Jeffrey S. Miller, Frank Cichocki Harnessing features of adaptive NK cells to generate iPSC-derived NK cells for enhanced immunotherapy Cell Stem Cell (2021) doi: 10.1016/j.stem.2021.08.013

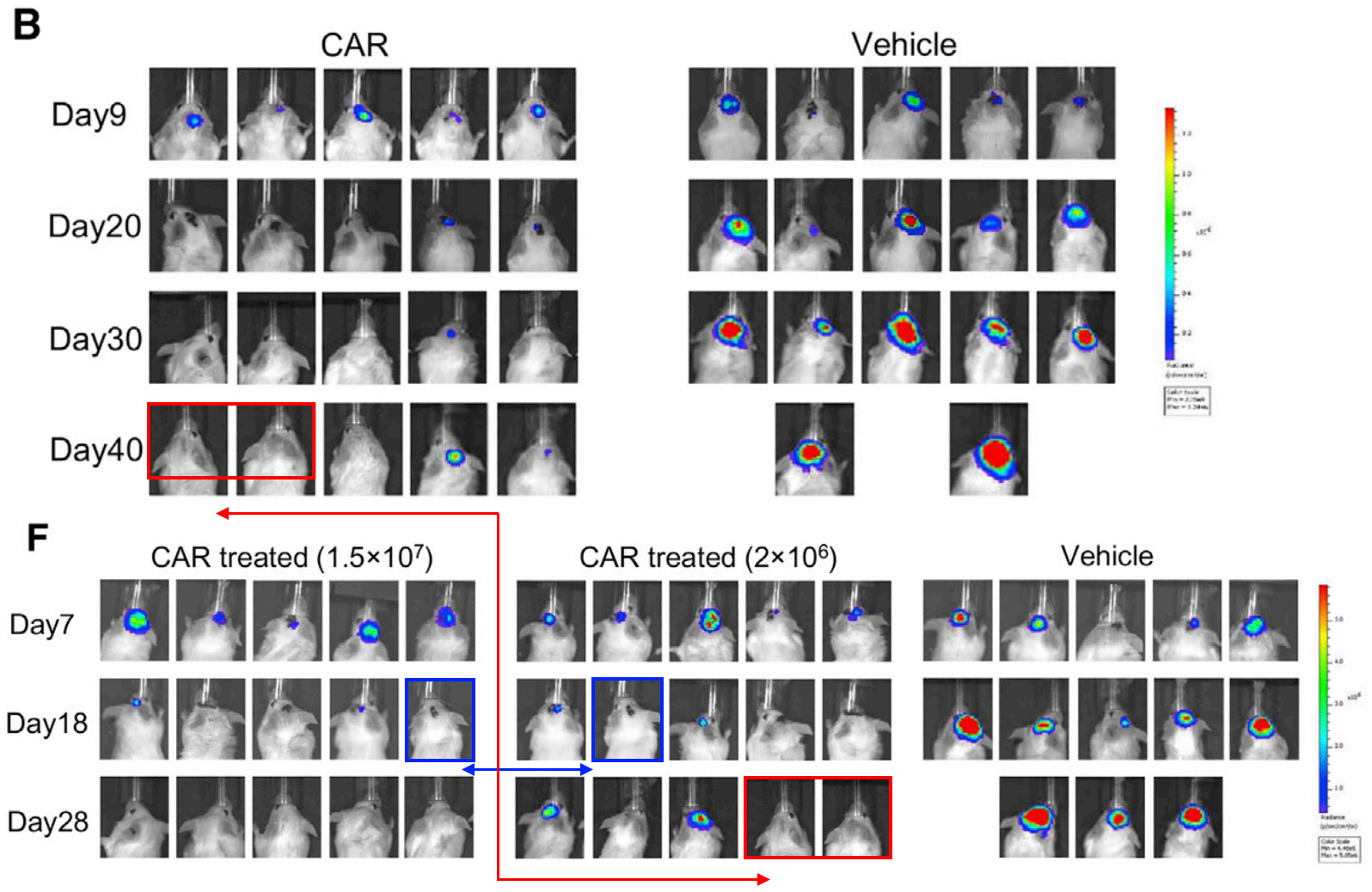

Associate Professor Cichocki is the first author in the following paper, the duplicated sections in the red rectangles were identified by a colleague and posted to PubPeer by me. The blue rectangles point to images which show different levels of background noise; I think this indicates that the detector has not be used at a consistent sensitivity, thus calling into question the validity of the entire figure, although Frank assured me it’s fine. Believe who you like!

Frank Cichocki, Jodie P. Goodridge, Ryan Bjordahl, Sajid Mahmood, Zachary B. Davis, Svetlana Gaidarova, Ramzey Abujarour, Brian Groff, Alec Witty, Hongbo Wang, Katie Tuininga, Behiye Kodal, Martin Felices, Greg Bonello, Janel Huffman, Thomas Dailey, Tom T. Lee, Bruce Walcheck, Bahram Valamehr, Jeffrey S. Miller Dual antigen-targeted off-the-shelf NK cells show durable response and prevent antigen escape in lymphoma and leukemia Blood (2022)

doi: 10.1182/blood.2021015184

Frank said he’s writing to Blood to correct the duplications. You’ll be more likely to get a correction from a stone than from Blood, as the idiom goes.

Remember Charles-Henri Lecellier?

Charles-Henri Lecellier is about to get promoted to CNRS research director 2nd class. Time to dig up old stories and let the ghosts rise to wash their dirty laundry.

Assorted US Universities

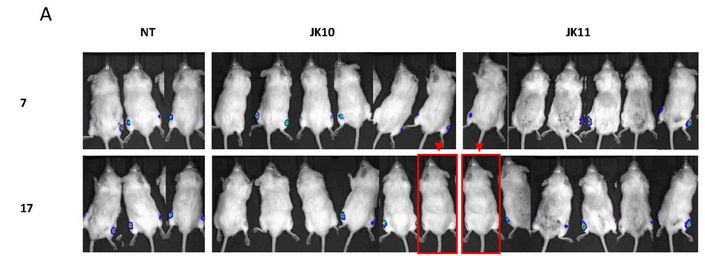

Now from researchers at University of North Carolina at Chapel Hill. Another set of pixel-perfect duplicates to conveniently prolong the duration of an experiment without requiring data collection, or perhaps a tragic mistake? The authors, led by the UNC Immunotherapy Program Director and Highly Cited Researcher (Top 1%, 2020-2023) Gianpietro Dotti, are yet to tell us which.

Sarah Ahn, Jingjing Li, Chuang Sun, Keliang Gao, Koichi Hirabayashi, Hongxia Li, Barbara Savoldo, Rihe Liu, Gianpietro Dotti Cancer Immunotherapy with T Cells Carrying Bispecific Receptors That Mimic Antibodies Cancer Immunology Research (2019)

doi: 10.1158/2326-6066.cir-18-0636

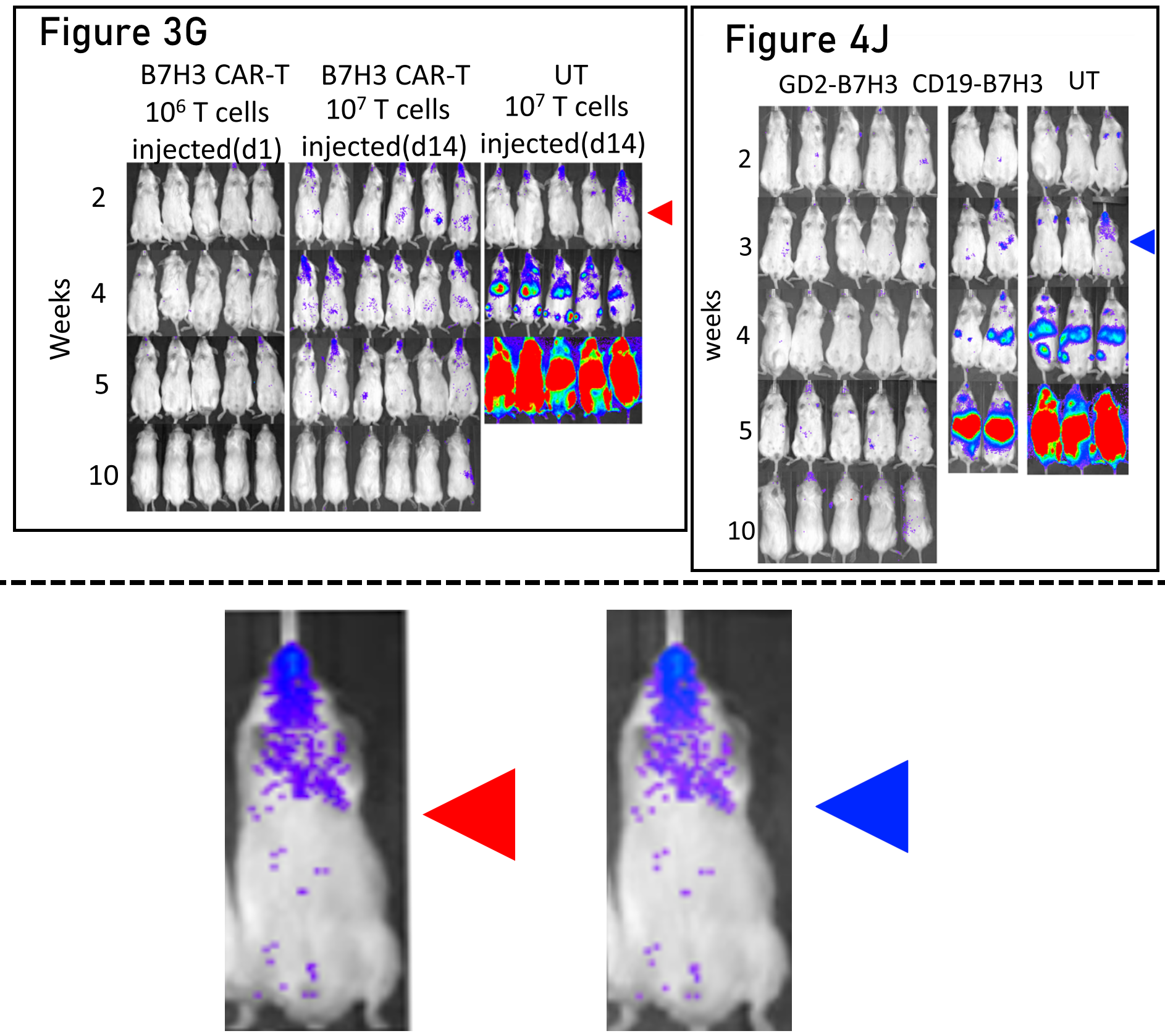

I could credibly be accused of having an anti-east-coast bias, so here’s a finding from UCLA-based researchers at the unfortunately named Keck School of Medicine. The figure legends in the original paper should be read carefully here, as the mouse in question is shared between control groups. However, I can’t find a way to reconcile the timings of the experiments. Splicing individual mice together like this to make figures is inadvisable:

Babak Moghimi, Sakunthala Muthugounder, Samy Jambon, Rachelle Tibbetts, Long Hung, Hamid Bassiri, Michael D. Hogarty, David M. Barrett, Hiroyuki Shimada, Shahab Asgharzadeh Preclinical assessment of the efficacy and specificity of GD2-B7H3 SynNotch CAR-T in metastatic neuroblastoma Nature Communications (2021) doi: 10.1038/s41467-020-20785-x

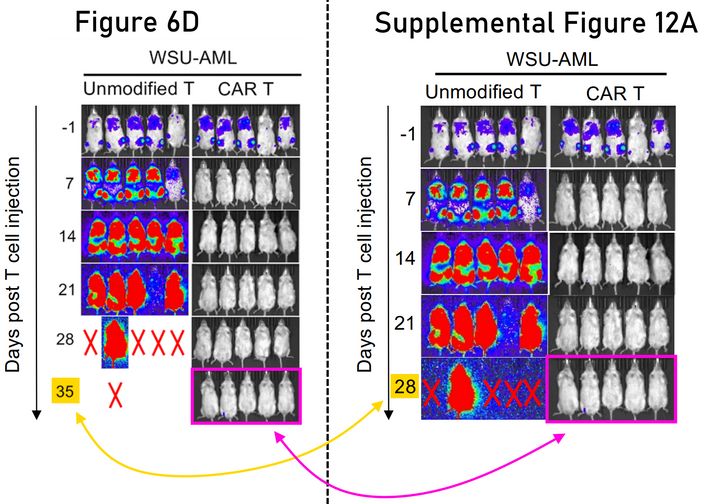

Here’s another west coast error, from researchers at Fred Hutch. Some of the same data is legitimately repeated in the supplemental figure, but the highlighted mice can’t show both day 28 and day 35.

Quy Le, Brandon Hadland, Jenny L. Smith, Amanda Leonti, Benjamin J. Huang, Rhonda Ries, Tiffany A. Hylkema, Sommer Castro, Thao T. Tang, Cyd N. McKay, LaKeisha Perkins, Laura Pardo, Jay Sarthy, Amy K. Beckman, Robin Williams, Rhonda Idemmili, Scott Furlan, Takashi Ishida, Lindsey Call, Shivani Srivastava, Anisha M. Loeb, Filippo Milano, Suzan Imren, Shelli M. Morris, Fiona Pakiam, Jim M. Olson, Michael R. Loken, Lisa Brodersen, Stanley R. Riddell, Katherine Tarlock, Irwin D. Bernstein, Keith R. Loeb, Soheil Meshinchi CBFA2T3-GLIS2 model of pediatric acute megakaryoblastic leukemia identifies FOLR1 as a CAR T cell target Journal of Clinical Investigation (2022) doi: 10.1172/jci157101

A single representative mouse was duplicated by researchers at City of Hope (pink triangles, following image). Some might say this is petty to point out, but if they felt it was important enough to include, presumably it is also important enough to be correct?

Eric Hee Jun Lee, John P. Murad, Lea Christian, Jackson Gibson, Yukiko Yamaguchi, Cody Cullen, Diana Gumber, Anthony K. Park, Cari Young, Isabel Monroy, Jason Yang, Lawrence A. Stern, Lauren N. Adkins, Gaurav Dhapola, Brenna Gittins, Wen-Chung Chang, Catalina Martinez, Yanghee Woo, Mihaela Cristea, Lorna Rodriguez-Rodriguez, Jun Ishihara, John K. Lee, Stephen J. Forman, Leo D. Wang, Saul J. Priceman Antigen-dependent IL-12 signaling in CAR T cells promotes regional to systemic disease targeting Nature Communications (2023)

doi: 10.1038/s41467-023-40115-1

Saul J. Priceman responded on PubPeer to say he would remedy the above issue; will he also correct the following much older (and much worse) figure?

Jeremy B. Burton, Saul J. Priceman, James L. Sung, Ebba Brakenhielm, Dong Sung An, Bronislaw Pytowski, Kari Alitalo, Lily Wu Suppression of Prostate Cancer Nodal and Systemic Metastasis by Blockade of the Lymphangiogenic Axis Cancer Research (2008) doi: 10.1158/0008-5472.can-08-1488

Diversity and Inclusion Initiative

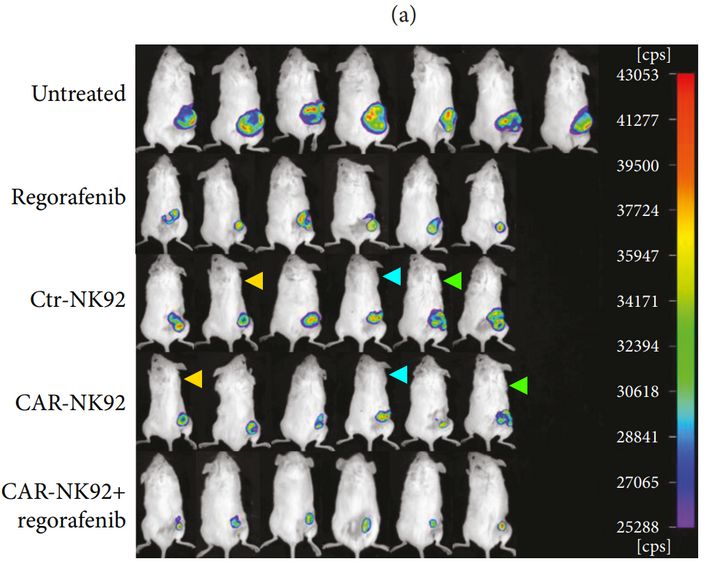

On the subject of bias, Barret J. Rollins wrote that my last blog on DFCI was quote: “Puerile, snarky, (and) misogynistic”… To avoid any accusations of racism against Americans, I should point the finger at some researchers based in other countries. Since the above-mentioned Christoph Rader is German, let’s start with Germany, specifically the prestigious Max-Delbrück-Center for Molecular Medicine in Berlin. Again we find images of mice (red rectangles) which are supposed to have been taken days apart, but match exactly.

Julia Bluhm, Elisa Kieback, Stephen F. Marino, Felix Oden, Jörg Westermann, Markus Chmielewski, Hinrich Abken, Wolfgang Uckert, Uta E. Höpken, Armin Rehm CAR T Cells with Enhanced Sensitivity to B Cell Maturation Antigen for the Targeting of B Cell Non-Hodgkin’s Lymphoma and Multiple Myeloma Molecular Therapy (2018) doi: 10.1016/j.ymthe.2018.06.012

Norwegian researchers publishing in MDPI, can’t they afford the APC for something better? Mice in red rectangles are identical again.

Yixin Jin, Claire Dunn, Irene Persiconi, Adam Sike, Gjertrud Skorstad, Carole Beck, Jon Amund Kyte Comparative Evaluation of STEAP1 Targeting Chimeric Antigen Receptors with Different Costimulatory Domains and Spacers International Journal of Molecular Sciences (2024)

doi: 10.3390/ijms25010586

To israel now, the Hadassah Medical Center of the Hebrew University of Jerusalem. These mice also appear to be rather more similar than one would expect by chance. Looking carefully at what’s left of the labels at the bottom of the pictures seems to confirm they were derived from the same original image. Again, the practice of mouse-splicing should be strongly condemned.

Ortal Harush, Nathalie Asherie, Shlomit Kfir-Erenfeld, Galit Adler, Tilda Barliya, Miri Assayag, Moshe E. Gatt, Polina Stepensky, Cyrille J. Cohen Preclinical evaluation and structural optimization of anti-BCMA CAR to target multiple myeloma Haematologica (2022)

doi: 10.3324/haematol.2021.280169

Now, some low-hanging mice. How about researchers from China? Zhigang Nian admits these three mistakes in one paper, but fortunately “The conclusion still stands the same“! AACR are remarkably accommodating for these things, I’m sure the authors won’t need to trouble themselves with a correction.

Zhigang Nian, Xiaohu Zheng, Yingchao Dou, Xianghui Du, Li Zhou, Binqing Fu, Rui Sun, Zhigang Tian, Haiming Wei Rapamycin Pretreatment Rescues the Bone Marrow AML Cell Elimination Capacity of CAR-T Cells Clinical Cancer Research (2021) doi: 10.1158/1078-0432.ccr-21-0452

Another from China, the authors also accepted this error, again, Elsevier’s Molecular Therapy is unlikely to respond quickly, if at all.

Xin Tang, Shasha Zhao, Yang Zhang, Yuelong Wang, Zongliang Zhang, Meijia Yang, Yanyu Zhu, Guanjie Zhang, Gang Guo, Aiping Tong, Liangxue Zhou B7-H3 as a Novel CAR-T Therapeutic Target for Glioblastoma Molecular Therapy – Oncolytics (2019) doi: 10.1016/j.omto.2019.07.002

Now that Hindawi has committed Seppuku at the behest of Wiley, documenting problems in their titles might seem like a waste of time, but some people still think Frontiers is a real publisher (hence the shock about AI rat), so perhaps it is best to stay on top of these things. The mice annotated with triangles below may have different bioluminescent signal, but they are certainly not different mice.

Qing Zhang, Haixu Zhang, Jiage Ding, Hongyan Liu, Huizhong Li, Hailong Li, Mengmeng Lu, Yangna Miao, Liantao Li, Junnian Zheng Combination Therapy with EpCAM-CAR-NK-92 Cells and Regorafenib against Human Colorectal Cancer Models Journal of Immunology Research (2018)

doi: 10.1155/2018/4263520

A Blast from the (Recent) Past

So far I have discussed never-blogged-before findings, but to finish I would like to resurrect two examples from a previous effort of mine. Ned Sharpless was Trump’s pick for director of the National Cancer Institute, a choice apparently inspired by Ronald DePinho‘s whisperings.

Sharpless Ned, or how half a mouse died

“The President’s goal of ending cancer as we know it today is grounded, in part, in the work of scientific discovery that Ned Sharpless has led at NCI”

These two findings bring us full circle, because it was Ned’s connections that caused me to look carefully at DFCI in the first place… Now that Ned and his coauthors have had a few months to get to grips with these problems, has anything been done? Unfortunately not, maybe because the last author, the Buck Institute professor and senolytics entrepreneur Judith Campisi recently died, taking all raw data to the grave.

Marco Demaria, Monique N. O’Leary, Jianhui Chang, Lijian Shao, Su Liu, Fatouma Alimirah, Kristin Koenig, Catherine Le, Natalia Mitin, Allison M. Deal, Shani Alston, Emmeline C. Academia, Sumner Kilmarx, Alexis Valdovinos, Boshi Wang, Alain De Bruin, Brian K. Kennedy, Simon Melov, Daohong Zhou, Norman E. Sharpless, Hyman Muss, Judith Campisi Cellular Senescence Promotes Adverse Effects of Chemotherapy and Cancer Relapse Cancer Discovery (2017)

doi: 10.1158/2159-8290.cd-16-0241

But the last author here, NYU Langone professor Kwok-Kin Wong is certainly alive and well:

Hongbin Ji, Danan Li, Liang Chen, Takeshi Shimamura, Susumu Kobayashi, Kate McNamara, Umar Mahmood, Albert Mitchell, Yangping Sun, Ruqayyah Al-Hashem, Lucian R. Chirieac, Robert Padera, Roderick T. Bronson, William Kim, Pasi A. Jänne, Geoffrey I. Shapiro, Daniel Tenen, Bruce E. Johnson, Ralph Weissleder, Norman E. Sharpless, Kwok-Kin Wong The impact of human EGFR kinase domain mutations on lung tumorigenesis and in vivo sensitivity to EGFR-targeted therapies Cancer Cell (2006) doi: 10.1016/j.ccr.2006.04.022

Closing Remarks

I like mice a lot, so perhaps I have a conflict of interest in writing this blog? I imagine mice have their own goals and aspirations, presumably more honourable than the scientists who experiment on them… Enjoying a tasty snack? Building a cosy burrow? Raising a family?

Perhaps I am too whimsical!

The mice probably wouldn’t care whether the experiment they suffered and died for was meaningful or not, but it does seem to matter to me. When I see a supplementary dataset downloaded by almost nobody, it gives me the sense of a recent memorial that is never visited, while muddled up experiments feel like a grave someone has trampled on.

Here they corrected quickly the mice but forgot other anomalies…let’s wait more for the second correction.

Here the link

https://pubpeer.com/publications/517D900AD2553680B68527CBC1C250

LikeLiked by 1 person

I am going to say it again but : stop having your work checked by reviewers, who by definition have much less understanding than you of your work. Even when you are crooked enough to use AI mice, they are still lower than you and unable to grasp it. Publish your work as preprints, publish your methodology (complete, no bullshit such as “we used custom scripts “, publish them too). And let the whole community decide if your work is worthy of citation, being continued, etc. Peer review is broken, and will never improve. If anything it’s probably going to get worse thanks to Frontiers and mdpi. Scientists are getting what they deserve. The move to change the system is not hard to make.

LikeLiked by 1 person

How would removing peer review stop any of these, or any other, cases of fraud and/or bad science from happening? It wouldn’t. If peer review disappeared overnight and publishing only happened through preprints (and what does “preprint” even mean if that is then the main form of publication?) then fraudsters will still commit fraud, bullies will still bully people, liars will still lie and shoddy science will still be performed. And now we wouldn’t even have the peer review step in place to try to prevent any of that. You’d be throwing the doors wide open to any charlatan, pseudo-scientist, or extremist out there with access to the internet. There are already enough forums where the corrupt and loonies can claim that their opinion trumps science and evidence.

Peer review isn’t perfect by any means and too many bad practices, and outright fraud, have evolved, but just removing peer review solves nothing and just increases the risks of the worst examples becoming more commonplace. Your other advice of “publish your methodology (complete, no bullshit such as “we used custom scripts “, publish them too). And let the whole community decide if your work is worthy of citation, being continued, etc.” is all what should be happening already with any published work. Just because a paper is published doesn’t elevate it to ‘written in stone’ levels, anything published is always open to being questioned for ever more. eRemoving peer review will do nothing to stop the fraudsters who don’t follow those principles, it would just make things easier for them.

I disagree also with your claim that “stop having your work checked by reviewers, who by definition have much less understanding than you of your work”. I’ve reviewed a number of papers over the years where I am certain that I knew more about what the authors were claiming than they did. And with most reviewing processes, even if the reviewer isn’t an expert in everything reported in a paper they may very well know more about at least part of that work. Throwing away the peer review process would just lead to a higher proportion of crap being put online.

Peer review is imperfect and needs to be improved, but you can say the same for laws and courts (people still commit crimes), hospitals (people still die from diseases) etc etc etc. I assume that no one would recommend ending those imperfect systems?

LikeLike

because reviewers don’t add anything useful. You think a preprint that will attract the attention of the whole community can easily contain AI mice? You think if a paper has 6 open reviews by people interested in it (and not who were asked by a friend for a service to do), it can easily contain fake images and photoshoped gels? What I suggest is that the value of a paper needs not to be determined by useless and incompetent peer reviewers, but once the paper went through the filter of the community (open review) post publication review, pubpeer. Peer review as it stands now, is the worse of the system. You realise they are failing to spot what a 10 years old could spot? You remember the avalanche of peer reviewed Chloroquine madness? Stop with that. We already have tools in place for better publication review. Another thing to change is the pressure to publish, for that I don’t know what to do.

and seriously, you can also trust your judgement. Most reviewers don’t read the paper, don’t redo the basics stats, don’t even look at figures. I would trust your own opinion on a paper you have thoroughly read, over peer reviewers opinion anytime and I don’t even know you. We have to stop handling our scientific work to two anonymous people who can offer no guarantee of quality or of fairness, of anything, actually.

We have to reclaim the science. We don’t have to hand it over to private publishers who do a dismal job.

LikeLiked by 1 person

The problem is not in the understanding. It is in practice in the non-existent peer review, reviewers hardly and rarely care what has been done, they care whether their papers have been cited. That’s the way to boost your scientific career, more citations leading to more papers and grants, (rinse) and repeat.

That said, when you send your a manuscript for review, you suggest reviewers that you have cited in that very same paper (and previous ones). Of course, these reviewers return the “favor” when it’s their turn to send a manuscript for review. Pretty nice, isn’t it? Very much resembles a mafia cartel…

Changing the system is very hard since none of its leading participants in it has any benefit in doing so.

In conclusion: yes, mice don’t count, the H-index only counts.

LikeLiked by 1 person

Also every such instance should lead to the authors’ prosecutions for waste of public money, AND for animal cruelty. What are animal research ethics committee doing? They are as incompetent as peer-reviewers?

LikeLike

Yes, I feel quite strongly about the animals here, this kind of work shouldn’t be muddled. Mistakes happen but the ease at which you can find these is concerning. Never mind that we can’t tell if anything else is labelled correctly.

LikeLike

Dear David, thank you for the great job and informative post.

I have recently suspected some figure duplication of the work of this professor:

https://www.ini-hannover.de/en/amir-samii-2/

https://www.leading-medicine-guide.com/en/medical-experts/amir-samii-m-d-hannover

his father, Majid Samii is the surgeon of the Islamic leaders of Iran:

https://en.wikipedia.org/wiki/Majid_Samii

Majid now is the hottest figure in Iran media and people hate them a lot.

LikeLike

Hi, can you please post your finding on PubPeer? And share the link with us?

PS: David is family name, Sholto is first name. I know, confusing! 🙂

LikeLike

The post is informative and trains the eyes how to spot irregularities. Great work Sholto. Thank you Leonid for advice. I previously tried to post two times on Pubpeer but still remains under moderation for three weeks. Perhaps regular Pubpeer users are more efficient.

LikeLike

Please contact me while leaving a FUNCTIONING email address

LikeLike

Madjid Samii and his son Amir Samii:

https://www.scopus.com/authid/detail.uri?authorId=8926812000

https://www.scopus.com/authid/detail.uri?authorId=56024678300

LikeLike

“De-Kuan Chang, Raymond J Moniz, Zhongyao Xu, Jiusong Sun, Sabina Signoretti, Quan Zhu, Wayne A Marasco Human anti-CAIX antibodies mediate immune cell inhibition of renal cell carcinoma in vitro and in a humanized mouse model in vivo Molecular Cancer (2015)doi: 10.1186/s12943-015-0384-3”

Another paper by the same last author.

https://pubpeer.com/publications/8F2AB8D9665A54DF363243CC4184DC

The ethics team for the American Society of Microbiology, which covers J Virol, has written that it cannot detect any scientific issues with the data.

LikeLike

We us IVIS to image vascular/heart valve calcification in mouse models. The image duplication/presentation of the same mouse for different times/conditions stuff is just incredibly lazy. Mice can be repositioned and the image acquisition settings can be changed to generate or exaggerate differences between animals. In fact I suspect that if you had enough time and the inclination you could generate one of these mega panels from one control and one experimental animal…In fact I wonder if there are some examples of this in several of the panels accompanying this article…

LikeLiked by 1 person

Yes, very likely that some figures are made up of just one or two mice repositioned or imaged, I see a lot of very suspect figures that don’t include “pixel-perfect duplications”. As ever, these duplications are only scratching the surface.

LikeLike

“because reviewers don’t add anything useful.”

That’s absolute rubbish. I’ve had many useful comments from reviewers that have made my papers better in some way, and I hope my reviews have improved other people’s papers. Absolutist comments are ridiculous when talking about the many people who honestly review other people’s submissions. You seem to think that everyone is a fraud or is rorting the peer review process. Is that what you do?

Preprints do not attract the attention of the whole community. Where did you get that perception from, a Bing Bang Theory repeat? Preprint services serve a useful role for some research communities but are not a substitute for all of the sciences. If you did suddenly replace all journals with preprints then the preprints would suffer the same issues plaguing journals but without the screening (imperfect as it is it still helps to catch dodgy work) of peer review. Plus you’d have the non sequitur of what do you mean by prepublishing when you no longer have publishing?

What makes you think that fraud can’t and wouldn’t easily propagate in a global prepublishing system when all of the influences encouraging fraudulent behaviour would still be in play? Of course they would, and there would be no independent control at all to prevent the liars, paper millers, or plain shoddy from immediately putting out whatever they felt like. We have seen enough examples of how the approach of ‘let’s do the media announcements about our big discovery before submitting to peer review’ can go badly awry, dispensing with peer review all together just increases the chance of such farces becoming a weekly or even daily event.

The issue with greedy publishers does need to be dealt with but you are convoluting that with the issues of fraud and poor science. Throwing out peer review just so that you can raise two fingers to the publishing houses is throwing the baby out with the bath water.

I’m a little concerned that some scientists don’t seem to feel they can comment upon anything that they aren’t a card carrying expert on, and apparently that they can’t honestly review someone else’s work from a slightly different field. That is just wrong. As a scientist I’ve been able to work on a range of topics, I’m not an expert in most of them but I do know enough to be able to critically assess a stranger’s writing, understand how different techniques work and whether that fits with what is being presented, to look at their data and assess whether it looks reliable or not, and to ask whether their analyses and conclusions convince me. And that’s what many other scientists do as well. As a scientist you should have been trained to be a critical thinker, more than just a technician who knows how to do a few things well but can’t think outside of that box. Removing the whole peer review system will not get every, or even anywhere near the majority, of preprints suddenly being earnestly considered by half a dozen world experts. All you would get is a short lived feeling of triumph from driving predatory publishers out of the business, but then see the depressing outcome when there is nothing stopping the fraud and gameplayers submitting to the world whatever they like at the click of a button.

If you think it’s frustratingly difficult to get bad papers retracted now just picture the scene in a prepublishing only system when nothing gets retracted and any old rubbish can be defended by an endless sequence of “No, I’m right, you’re wrong” posts. Consider how that would end up.

LikeLiked by 1 person

To Citrus, my last post was going on long enough and I forgot to add, thank you for your reply and the discussion. I obviously disagree with you on many points but thanks for taking the time to explain your thoughts on this. My replies aren’t directed to (or at) you, but to (or possibly at) the broader community.

LikeLiked by 1 person

Is anyone ever going to lose a job, or a grant, over these forgeries?

LikeLiked by 1 person

Fairly unlikely in most of these cases, although it can happen.

LikeLike

“Is anyone ever going to lose a job, or a grant, over these forgeries?”

It doesn’t happen as much as it should but yes it does happen.

LikeLiked by 1 person

Does anyone get a job, or a grant, thanks to forgeries? The unequivocal answer is yes. A recent survey by Conix et al. (Plos One, 2023) reveals that a staggering 60% of grant applicants confess to participating in questionable research practices during their submissions. This suggests a troubling inverse: failure to cheat may result in losing a job, or a grant. You right, ewanblanch, to say that not everyone is a fraud, but it seems we are quickly heading down that path.

LikeLiked by 1 person

“failure to cheat may result in losing a job, or a grant.”

Natural selection! A natural selection for dishonesty.

Odd that many scientists didn’t, or still don’t, recognise that it is a general principle in biology and that it applies to scientists as well.

LikeLike

Might be something to your argument. The data do seem to be in.

https://www.livescience.com/animals/the-animal-kingdom-is-full-of-cheats-and-it-could-be-a-driving-force-in-evolution

Darwin was hidebound by his times, so didn’t like to mention it.

LikeLike

Furthermore, successful cheaters are reproductively successful and are not incels. Example: https://www.inforum.com/caroline-lesne

LikeLike

The problem with photographing western blots, or images of cells and animals is that the images contain a lot of unique additional identifying information that pesky online sleuths can use to identify image manipulation and duplication. With that in mind, capillary western blotting systems like this one are a godsend to cheaters. The protein immunocomplexes are detected using fluorescence or luminescence and the software then generates a completely artificial image that looks like a western blot. What could possibly go wrong…

https://www.bio-techne.com/instruments/simple-western

LikeLike

Monty Python – mouse organ sketch (youtube.com)

LikeLike

no, not all the reviewers are incompetent or lazy. Concerning my experience, for my last paper, one of the reviewers was utterly incompetent but not the others. For the last paper I reviewed, I checked all thereferences and I made sure that the authors’ conclusions were supported by their data and that their experimentation was right

LikeLike