No scientist is safe from ending up as coauthor on a paper with manipulated data. Today’s science is an international and collaborative enterprise, with many contributors from several labs involved on the production of one research paper. It can be a rogue early career researcher or a rogue professor who provided rigged data. But eventually it becomes your responsibility to act. I know of several cases where scientists retracted own papers after having become aware of manipulated data therein. Some examples are the plant scientists Jonathan Jones and Pamela Ronald, they only gained additional respect for such brave acts.

Even Arturo Casadevall, professor and department chair at Johns Hopkins Bloomberg School of Public Health, AAAS Fellow, deputy chief editor of Journal of Clinical Investigation and Founding editor-in-chief of mBio, had his Road to Damascus moment now. Only that it was not a Saul to St Paul conversion, it was the St Paul who recognised his hypocrysy and promised to better.

Casadevall’s scientific specialty is microbiology and immunology, but he is well known beyond this domain, as the probably the most recognized patron saint of research integrity. His peer reviewed studies on the publish-and-perish culture, data manipulation and scientific fraud in the context of retractions are highly cited and make scientific news every time another one appears. Many of those were coauthored by the microbiologist colleague Ferric Fang, and more recently, Casadevall liaised with another fellow microbiologist, the image duplication sleuth Elisabeth Bik. She studied over 20,000 papers and together Bik and her coauthors determined that 4% of them contained image duplications. A follow-up study by Bik and Casadevall revealed that 59 out of 960 microbiology papers contained such irregularities. It proved worse with papers not yet published: 55 of 200 screened manuscripts had issues with their images.

Now however, first stones were cast against Casadevall’s own published peer reviewed research papers in microbiology and immunology. This is again a situation where it matters less who manipulated data and why, but how the professors in charge respond to the evidence. Casadevall’s expressed views when his own research was concerned have evolved in a brief Damascene moment, and a sinner St Paul promised to become a virtuous St Paul. A perfect story for Easter.

In April 2019, the St Paul of research integrity Arturo Casadevall wrote this in Nature, possibly already well aware of the PubPeer allegations against his own papers:

“I worry that the seeds of misconduct, although they grow in only a very few individuals, are planted in the very heart of academic biomedical sciences.[…]

Microbiologist Ferric Fang and I have proposed one method, based on the five pillars of logic, experimental redundancy, error recognition, intellectual honesty and quantitative analysis using probability and statistics (A. Casadevall and F. C. Fang mBio 7, e01902-16, 2016). Used in combination, these should produce more robust and resilient scientific results.

Practically everyone in the system now, including me, is part of the impact culture, so changing it will be hard.”

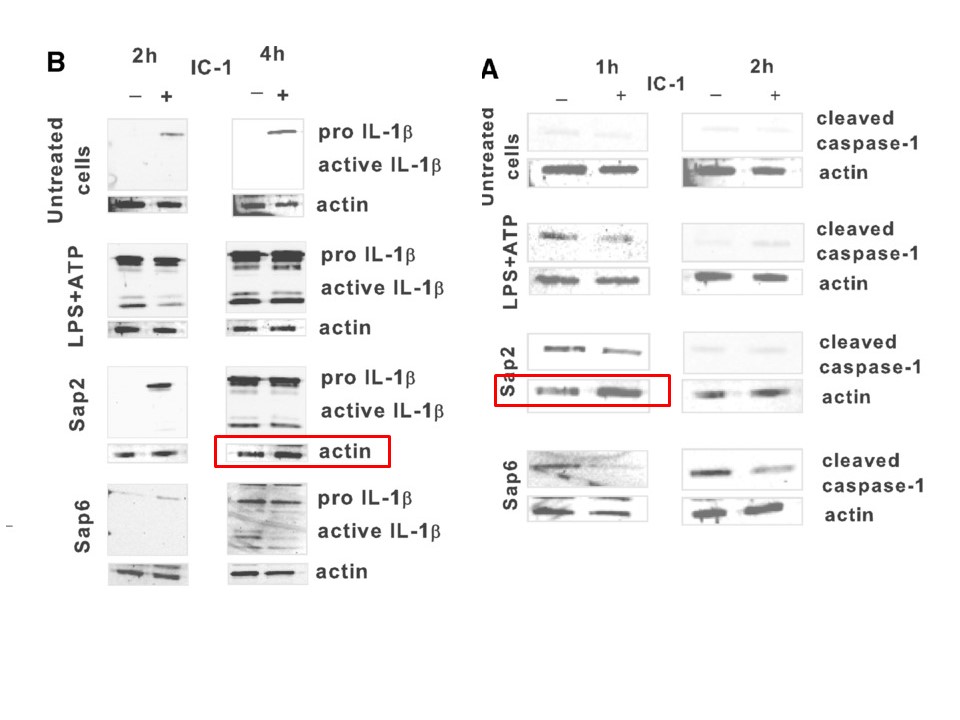

The impact culture apparently produced a loading control duplication in the Figure 2 of this paper:

Eva Pericolini , Elena Gabrielli , Elio Cenci , Magdia De Jesus , Francesco Bistoni , Arturo Casadevall , Anna Vecchiarelli

Involvement of glycoreceptors in galactoxylomannan-induced T cell death

Journal of Immunology (2009) doi: 10.4049/jimmunol.0803833

The last author Anna Vecchiarelli built her entire academic career at the University of Perugia in Italy, and is since 1994 professor of microbiology there. She lists Casadevall as the first name among her international scientific collaborators. Vecchiarelli explained on PubPeer:

“The actin control for panels A and D is the same because these images came from the same immunoblot probed with antibodies to caspase 8 and 9 after it was stripped. Hence the use of same actin control band for both panels is correct and appropriate. In retrospect, this information should have been added to the figure legend to avoid any misinterpretation or confusion.”

It is almost credible. Almost, unless you believe Vecchiareli’s novelty idea that one can strip one sample of a blot and re-blot it with another sample. Look at the last sample of each blot, highlighted with red circle: it shows different experiments, described in the figure legend as cell cultured “in the presence or absence of caspase-8 or caspase-9 inhibitors (IC-8 or IC-9; both dilution 1/1000)”. Those are different cultures, treated with different inhibitors. Meaning, the figure does show two physically different blot membranes, but the same loading control, which can belong to any of the two or even to none of these gels.

The two actin blot copies are not exactly identical, they might be different exposures of same blot, or same picture digitally brightness-enhanced. That would presumably fall into Casadevall’s Category 2, since he himself provided a handy guide under how such mistakes should be classified, in Bik et al MCB 2018:

“We categorized inappropriate image duplications as simple duplications (category 1), shifted duplications (category 2), or duplications with alterations (category 3), with category 1 most likely to result from honest error, while categories 2 and 3 have an increased likelihood of resulting from outright falsification or fabrication.”

Same categorization was done by Casadevall before, in Bik et al mBio 2016 and in Fanelli et al BioRxiv Preprint 2017.

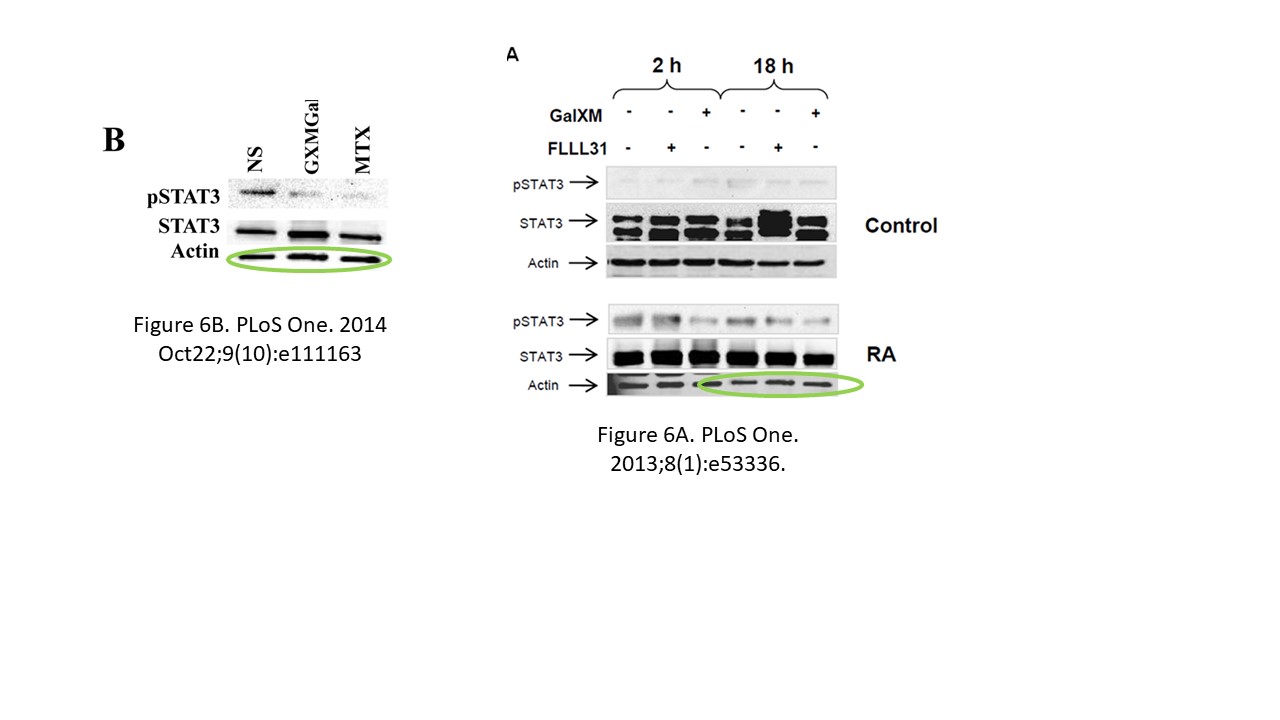

A similar kind of Category 2 blot reuse happened here:

Eva Pericolini , Elena Gabrielli , Alessia Alunno , Elena Bartoloni Bocci , Stefano Perito , Siu-Kei Chow , Elio Cenci , Arturo Casadevall , Roberto Gerli , Anna Vecchiarelli

Functional improvement of regulatory T cells from rheumatoid arthritis subjects induced by capsular polysaccharide glucuronoxylomannogalactan

PLoS ONE (2014) doi: 10.1371/journal.pone.0111163

Vecciarelli explained on PubPeer:

“The actin control for panels in Figures 1 and 6 are the same because this images came from the same immunoblot probed with different antibodies. Hence, the use of the same actin control band for both panels is correct and appropriate. In retrospect, this information should have been added to the figure legend to avoid any misinterpretation or confusion.”

It is true, both figures show same cells equally treated. But there is a significant difference: Figure 1 says “After 2 and 18 h (A) […] of incubation, cell lysates were analyzed by western blotting” while the Figure 6A contains no data from 2h incubation, as the legend says “After 18 h of incubation, cell lysates were analyzed by western blotting“. This means, the actin loading control from Figure 6A purportedly showing samples harvested after 18h incubation was cropped and reused to show the samples harvested after 2h incubation. Or maybe the other ways around, or the actin gel came from somewhere else altogether – if the author trolls like this, everything is possible.

The following example ventures more into Category 3:

Anna Vecchiarelli , Donatella Pietrella , Francesco Bistoni , Thomas R. Kozel , Arturo Casadevall

Antibody to Cryptococcus neoformans capsular glucuronoxylomannan promotes expression of interleukin-12Rbeta2 subunit on human T cells in vitro through effects mediated by antigen-presenting cells

Immunology (2002) doi: 10.1046/j.1365-2567.2002.01419.x

Also here Vecchiarelli explained on PubPeer:

“The two top panels are indeed the same. This was the result of inadvertently inserting the FACS same file into the figure twice. Note that the numbers denoting the percentage of cells in both panels are different and these are correct. The top panels represent the irrelevant antibody control, which shows little binding to these cells. The error in figure construction does not change any conclusions in the paper.”

That is actually a very outrageous explanation. Granted, it can happen to anyone to have accidentally reused same flow cytometry (FACS) file. But then the quantified numbers would be same in both panels. This is because when a flow cytometry experiment is quantified, same gating is applied to all samples. To apply deliberately variable gates to each sample would be data manipulation, as I explain here for the zombie scientist Sonia Melo and for another immunologist, Andrea Cerutti. Yet Vecchiarelli seriously wants us to believe that the difference in quantified numbers proves that the results are reliable. She also educates us the negative FACS controls are “irrelevant”. Maybe in her Perugia lab, but not in the real world of science. To me, all this proves that either the authors rigged FACS settings, or covered up their file duplication by posting different numbers. Either way, this looks like Casadevall’s own category 3, which suggests “outright falsification or fabrication“.

In 2016, St Paul of research integrity (together with Fang) proposed for exactly such situations a “Pentateuch for scientific rigor”, inspired by “five elements, pillars, or sacred texts” on which “Traditional Chinese philosophy, Hinduism, Islam, and Judaism are each founded“:

“We suggest that scientific rigor combines elements of mathematics, logic, philosophy, and ethics. We propose a framework for rigor that includes redundant experimental design, sound statistical analysis, recognition of error, avoidance of logical fallacies, and intellectual honesty”.

Now put Casadevall’s Rigor Pentateuch into the context of the following figure.

C. Monari , T. R. Kozel , F. Paganelli , E. Pericolini , S. Perito , F. Bistoni , A. Casadevall , A. Vecchiarelli

Microbial Immune Suppression Mediated by Direct Engagement of Inhibitory Fc Receptor

Journal of Immunology (2006) doi: 10.4049/jimmunol.177.10.6842

This was posted on PubPeer in September 2018, Casadevall was informed of the problem with that paper, but neither he nor Vecchiarelli replied there yet. Admittedly, there is not much to say really. The blots look like a Photoshop train wreck with their cornucopia of copy-pasted bands. This Monari et al 2006 paper acknowledges NIH grant funding, and it is reassuring to know that, as Casadevall put it in Stern et al eLife 2014:

“We make the assumption that every dollar spent on a publication retracted due to scientific misconduct is ‘wasted’. However, it is conceivable that some of the research resulting in a retracted article still provides useful information for other non-retracted studies, and that grant funds are not necessarily evenly distributed among projects and articles. Moreover, laboratory operational costs for a retracted paper may overlap with those of work not involving misconduct. Thus, considering every dollar spent on retracted publications to be completely wasted may result in an overestimation of the true cost of misconduct”

Oh well, that’s OK then. But it was not just papers coauthored by Vecchiarelli. Together with Alexandre Alanio, tenured researcher at Institut Pasteur in Paris, France, Casadevall deployed the Infinite Improbability Drive to generate some remarkably overlapping data.

Benjamin Hommel , Liliane Mukaremera , Radames J. B. Cordero , Carolina Coelho , Christopher A. Desjardins , Aude Sturny-Leclère , Guilhem Janbon , John R. Perfect , James A. Fraser , Arturo Casadevall , Christina A. Cuomo , Françoise Dromer , Kirsten Nielsen , Alexandre Alanio

Titan cells formation in Cryptococcus neoformans is finely tuned by environmental conditions and modulated by positive and negative genetic regulators

PLoS Pathogens (2018) doi: 10.1371/journal.ppat.1006982

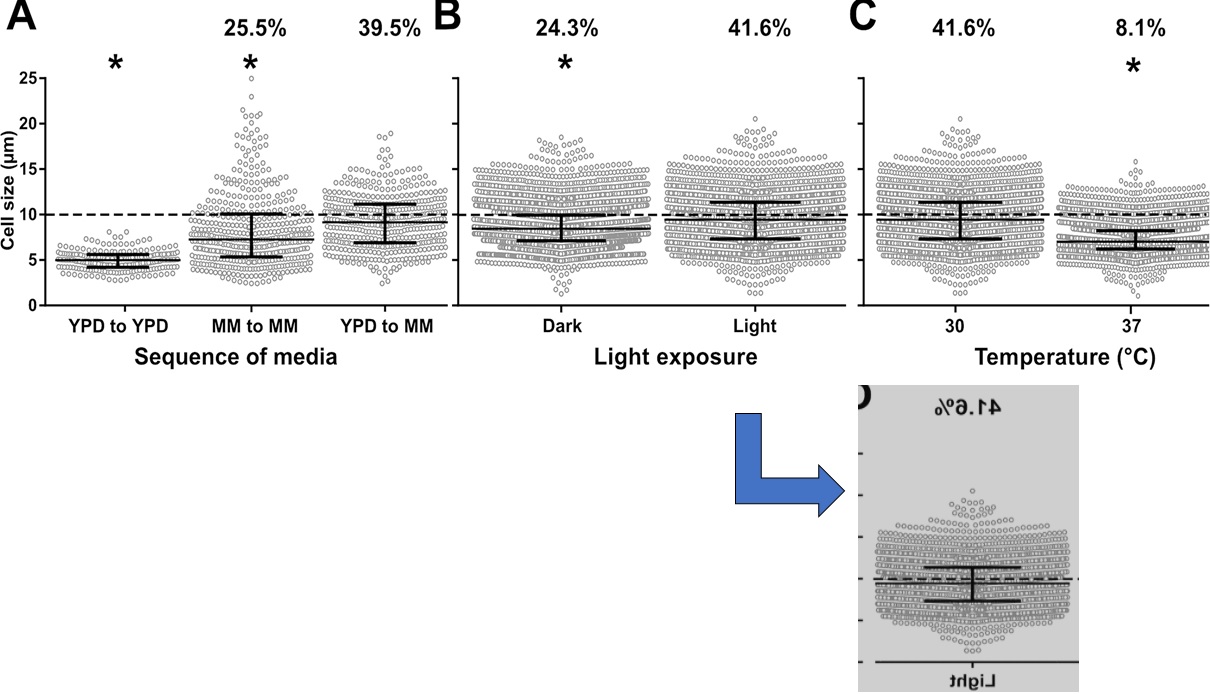

The anonymous PubPeer critic was puzzled, two large sets of data point look identical, only flipped horizontally: “Figure 4: Light (B) and 30 (C) are mirror images. It isn’t clear if they are the same or different cells“. Same commenter then added this peculiarity, saying “Larger control values are the same in different controls“, as highlighted with boxes:

By the laws of probability, one should expect those to be same sample datasets, presumably mistakenly copy-pasted. Or maybe there was a scientific rationale to reuse them? Alanio explained on PubPeer:

“Thank you for pointing this out. As different conditions are tested together (rpm, light, Temperature), some data are exactly the same corresponding to the combined condition. In retrospect, this should have been made clearer in the legend”

The man is apparently not joking. Well, his own Institut Pasteur recently declared that three different gel lanes can look identical if assembled into a figure, so maybe Pasteur is some kind of a black hole where data is subject to mysterious quantum singularity effects?

I contacted Casadevall, and since I didn’t receive any reply, I sent a reminder. This was what came back:

I had drafted a response to you but after reading your letter to me several times I was not sure whether to send it since your letter implied that you had already made conclusions, as you stated that ‘one sees evidence of research misconduct and intentional falsification of data’. In fact, if we have learned anything about figure problems from the Bik et al MCB and JBC studies is that one cannot discern ‘intent’ from looking at problematic figures. When these have been investigated by institutions and two journals the overwhelming majority (>90%) were found to be errors. Please know that this will be my only response.

Arturo

The same message was followed by what must have been the drafted response:

Dear Leonid,

Thank you for your message. From your letter, the Pubpeer and Twitter comments I assume that it was you who posted the criticisms on those figures. Thank you for identifying yourself.

We have taken the Pubpeer comments seriously and responded rapidly. We thank you for alerting us to those figure problems, which provided us with the opportunity to respond in Pubpeer. Responses have been posted to 4 of the 5 Pubpeer comments. I have reviewed the papers and discussed the figure problems with my collaborators and I believe that each was either an error in figure construction or a problem in not stating what was done in the figure legend. One comment has not been answered because we are still trying to find the original data that is more than 13 years old and it can be difficult to reconstruct what was done so long ago. I do not believe that there was misconduct or intent to deceive in any of these cases.

I do not agree with your comment that these problems represent ‘a problematic attitude to research integrity, one sees evidence of research misconduct and intentional falsification of data‘. Both Anna Vecchiarelli and Alexandre Alanio are fine individuals who are working very hard to understand fungal diseases in the hope of making better therapies for those affected. They are both scientists with great integrity who are highly respected by their colleagues. Both Drs. Alanio and Vecchiarelli responded right away and agreed to look into the figure issues, which is a measure of how responsible they are.

As you know, two studies have now shown that the majority of figure problems (> 90%) are errors in figure construction. The first was the Elisabeth Bik MCB study and the second was from the JCI. I don’t know if you have ever assembled figures for publication but I assure you that it is very easy to make errors when cutting and pasting different files into a composite figure. Until recently, we were not aware of how easy it was to make such errors. In fact, it was not until the work of Elisabeth Bik and others like yourself that the scientific community learned how prevalent these were. The Pubpeer postings to the Vecchiarelli and Alanio papers fall into the figure error category. These figure construction errors or lack of precision in the legend do not affect the conclusions of these papers. We stand by the conclusions of these papers and no retractions are warranted, or planned.

Another conclusion from the two published studies on image problems in the literature is that it is very difficult to infer intent from looking at figures alone without reviewing the original materials. From your letter, I understand that you are not completely satisfied with the answers that were provided in Pubpeer. However, these are the answers that Drs. Vecchiarelli and Alanio provided based on the review of the pertinent data from their laboratories. I accept their explanations.

Sincerely,

Arturo

Actually, it was not I who posted the comments on PubPeer, and I don’t even know who did. Casadevall’s tirade speaks volumes and needs no further commenting. Except maybe, with more of his own quotes. It seems our the expert for research integrity spoke of himself here, in Casadevall et al mBio 2016:

“Current training in research integrity is largely focused on young scientists who are in educational programs and is accomplished in the form of didactic courses or case studies that seek to teach ethical principles. However, an analysis of scientists found to have committed misconduct shows that the problem is prevalent throughout all ranks, ranging from students to established investigators (39). This finding suggests the need to increase the focus of research oversight and training to all members of the research community irrespective of their academic rank”

Here is another useful Casadevall quote, from Fang et al PNAS 2012:

“Although articles retracted because of fraud represent a very small percentage of the scientific literature (Fig. 1B), it is important to recognize that: (i) only a fraction of fraudulent articles are retracted..”

Ferric Fang refused to share his views on Vecchiarelli’s comments and Casadevall’s support of those, because of traveling. He merely stated:

“All I can say is that I have learned from experience not to arrive at conclusions until I have had an opportunity to review the primary data”

Funny, Fang and Casadevall were perfectly able to sort the duplication issues discovered by Bik (in papers from other people) into 3 distinct categories, raging from honest errors to misconduct, without reviewing the primary data:

“Category II (duplication with repositioning) and category III (duplication with alteration) may be somewhat more likely to result from misconduct, as conscious effort would be required for these actions”

Soon after my rather pointless exchange with Fanc, Casadevall unexpectedly sent me another email:

Dear Leonid,

Although my last message stated that I would not write further, I take that back.

On reflection, both of us are interested in improving science and I respect you for your efforts. I was so upset by your conclusion that there was evidence of misconduct in the figures and in the initial Pubpeer replies from the Vecchiarelli laboratory that I did not consider your criticisms carefully. I apologize for that. However, after I cooled off, I re-read your message, went back to the figures and I agree that the response to the blot showing the IC8 and IC9 lanes is insufficient. Like Anna, I initially totally missed the red circle and focused only on the actin band and the other postings need additional information and may warrant formal corrections. I contacted Anna again urging her to make additional clarifications to pubpeer comments and your criticisms and she already responded that she is contacting the trainees and digging up the original data. I have also spoken to Dr. Alanio about the concern that his Pubpeer response is not clear and urged him to either write a more detailed response in PupPeer and/or post a correction to the Plos Pathogens paper in the journal.

I believe that both Drs. Vecchiarelli and Alanio are honest hard working scientists and both to want to correct the literature. I have no reason to believe any of these issues were due to intent to deceive or misconduct.

One more thing in response to your last message. I did look at your website and I know that you are a molecular biologist. My prior comment as to whether you had assembled figures simply reflected the fact that just because one is scientifically trained does not imply that one has assembled figures.

For example, I have never assembled a figure for publication as this is usually done by my trainees. However, you can be sure that as a result of the work of Elisabeth Bik, yourself and others that I have become much more savvy in inspecting figures for errors.

Stay tuned for additional corrections and clarifications. You can be sure that I am committed to getting to bottom of these issues and I have no problem in urging retraction if warranted, or repeating the work if there are any remaining questions about the results.

Sincerely, Arturo

Even though I specifically asked Casadevall to comment on this figure he and Vecchiarelli published, he is not fully there yet.

And here ends the parable of St Paul on the road to Damascus. Happy Easter everyone.

Donate!

If you are interested to support my work, you can leave here a small tip of $5. Or several of small tips, just increase the amount as you like (2x=€10; 5x=€25). Your generous patronage of my journalism will be most appreciated!

€5.00

someone who has published many articles and says with impunity “I have never assembled a figure for publication as this is usually done by my trainees” is not a scientist and should be out of science. Where the trainees trained to make manipulate images? Who did the experiments? who wrote the articles?. Unfortunately there are many people who did not even read the articles that granted them honors.

LikeLike

Agree what a horrific claim!

LikeLike

The man apparently knows what’s coming next (“Stay tuned for additional corrections and clarifications”), and it seems to me he’s preparing his exit strategy now. I bet you a pint, in a few weeks we’ll hear: “Sure, yes, there’s conclusive proof of intentional falsification now, but please be reasonable – I personally never was involved in the work of my trainees!”

No new story here.

LikeLike

Casadevall and Fang. Check their co-authored editorials, especially the dates of publication. Abuse of editorial power at mBio?

LikeLike

You know that he’s not actually a corresponding author on any of these papers, right? That doesn’t absolve him of guilt, but at the same time I think you’re misplacing the blame.

LikeLike

I am not sure why you protest this association. Prof Casadevall gave his expert view that these papers contain no manipulated data, only minor errors, and that Prof Vecchiarilli’s integrity is not to be doubted. What was your point again?

LikeLike

I did not get your point about FACS plots. Indeed, they look pretty different to me.

LikeLike

My bad, I forgot to mention that the cell populations are shifted in Vecchiarelli et al 2002. Otherwise they do look same though, see red box.

LikeLike

It looks more than just a simple shifting… Maybe I’m wrong but I think besides shifting, they stretched it a little bit to fool observer… Strange… These guys still manipulate their data in really outdated ways… I don’t know when they want to start to learn modern data fabrications rules… These plots could be created easily by some random sampling and a bit scripting and nobody can catch them never… sigh!

LikeLike

Be fair, the paper is from 2002! Back then, nobody expected scrutiny. Now cheaters got smarter

LikeLike

Now some interesting anonymous comments by an “Conospermum Wycherleyi” were posted on PubPeer on 20.04.2019.

Regarding duplicated, shifted and re-quanified FACS in Vecchiarelli et al 2002:

https://pubpeer.com/publications/53682980E2263F92A8F4506B96D20A#3

Regarding Pericolini et al 2009, where blots with different samples listed are declared to be of same blot membrane:

https://pubpeer.com/publications/0363CEDE62D0A4D79F80697A2A7345#3

Regarding Pericolini et al 2014, where same bands stand in for samples at 2h and 18h:

https://pubpeer.com/publications/B828BF21183003EA7D993DDE0563CA#3

Regarding Hommel et al 2018:

https://pubpeer.com/publications/0186F35CC548FA9CD6E52E12AF86D6#5

Another comment was posted on the thread which indeed raised a false alarm:

https://pubpeer.com/publications/AD7408D99F99F380FE8E5A48F65AA2#4

This is peculiar in many respects. Whoever did this, seems to be responding to my reporting, trying to help Dr Casadevall. Not sure if this is helpful though. What I personally find worrisome, is that PubPeer moderation not only allows such trolling, but on other occasions repeatedly prevents posting of actual evidence, which was even verified by journal correction. How much of evidence never gets to appear on Pubpeer because it targets “protected” authors or comes from undesired commenters?

LikeLike

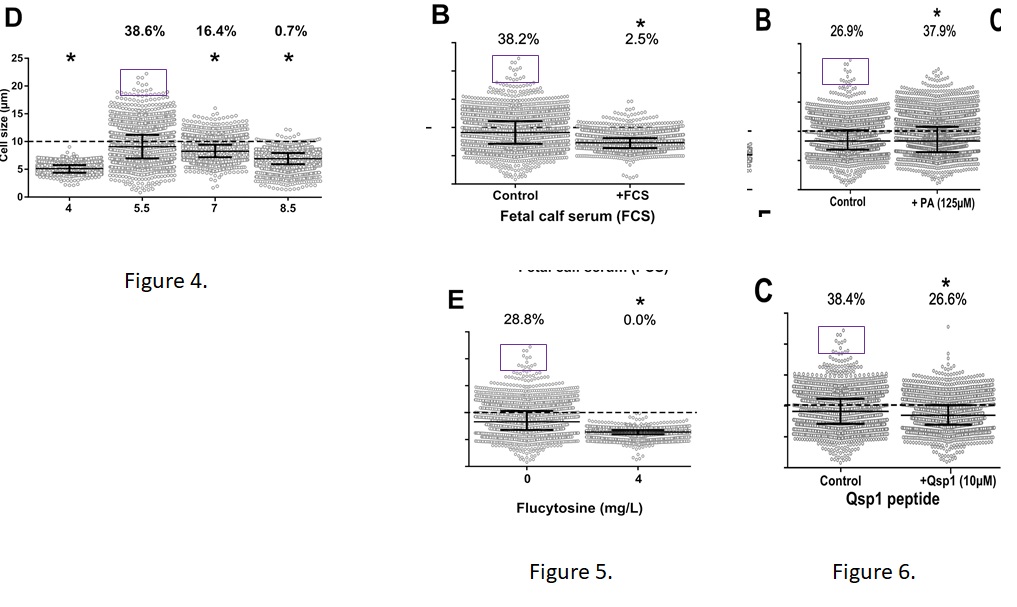

Meanwhile, another beauty from Vecchiarelli lab, this time without Casadevall:

Donatella Pietrella , Neelam Pandey , Elena Gabrielli , Eva Pericolini , Stefano Perito , Lydia Kasper , Francesco Bistoni , Antonio Cassone , Bernhard Hube , Anna Vecchiarelli

Secreted aspartic proteases of Candida albicans activate the NLRP3 inflammasome

European Journal of Immunology (2013)

doi: 10.1002/eji.201242691

https://pubpeer.com/publications/EF7DC117D9F3B32DAFD89CAE2CB044

LikeLike

It seems our little Pubpeer troll friend “Conospermum Wycherleyi” changed his/her name: https://pubpeer.com/publications/EF7DC117D9F3B32DAFD89CAE2CB044#3

“Commiphora Caerulea: Have you read this article? I’m just asking cause your questions are far from constructive comments…”

LikeLike

I have a hard time to understand why an independent person as “Conospermum Wycherleyi” is trying to defend Vecchiarelli and Casadevall? Maybe this little friend is Casadevall or Vecchiarelli or one of their associates/students?! Who knows…

LikeLike

Huh, if someone has other opinion than you, you call it “trolling”. I’m not sure if my comments are trolling, then what is the name of your blog?! Also, you should learn to not accuse people with sloppy evidences of “this picture is so “similar” to this one, so this is a case of research misconduct”. I think you received final coup de grace from Casadevall himself by saying: “I don’t know if you have ever assembled figures for publication”.

LikeLike

Behold a likely duplicated loading control, I am looking forward to Dr Vecchiarelli’s explanation how this is most appropriately reused same blot membrane, with extra insights frome PubPeer troll Conospermum Wycherleyi who is too chicken to sign his passive-aggressive diatribes.

Eva Pericolini , Alessia Alunno , Elena Gabrielli , Elena Bartoloni , Elio Cenci , Siu-Kei Chow , Giovanni Bistoni , Arturo Casadevall , Roberto Gerli , Anna Vecchiarelli

The microbial capsular polysaccharide galactoxylomannan inhibits IL-17A production in circulating T cells from rheumatoid arthritis patients

PLoS ONE (2013) doi: 10.1371/journal.pone.0053336

https://pubpeer.com/publications/ADE781DDC93376924BBE34FE85E5F4#1

LikeLike

The problem with your replies is not your anonymity, but your defense of the questioned images without an explanation. Rather than questioning/defending the motives or skills of the people involved, question or defend the work. If you don’t do that you will be viewed as a partisan and your views disregarded.

LikeLike

Regarding Hommel et al. (the box plots) I think the authors’ response makes perfect sense. The same control is used to compare experimental variables in different dimensions (i.e. illumination, temperature) in a single experiment, and since these comparisons are presented in different panels for simplicity, the controls are duplicated (don’t know why inverted, but this is immaterial – could be a software thing). For example, in 4B, “light” is the control for “dark” (presumably both at 30°) and in 4C the same light, 30° population serves as control for light, 37°. The legend could certainly have been clearer, but it took me about 1 second to figure it out. And I admit that I probably would have put all these comparisons in a single panel (same with the other figures), but there is a tradeoff between clarity of exposition of how the experiments were done, and clarity of exposition of the scientific narrative, so I can see why the authors did it the way they did.

Regarding the Westerns, from cursory examination I agree there are problems there.

Now excuse me but I have to go back assembling some figures.

LikeLike

Of course, if I want to save my privacy, you call me chicken… but at the meantime you defend the anonymity of those people who commented on Catherine Jessus papers on Pubpeer and call the demand for identity of whistleblowers and her accusers, an act of stalinist pravda, cause you like their opinion but obviously not mine. Sigh… you must change your career to become a lawyer…

LikeLike

If not for your English, I would suspect you being Brandon Stell. So you are one of those Jessus-worshippers, this is what’s it about? Not Casadevall then?

LikeLike

annon spoiler alert: Conospermum Wycherleyi is a shrub.

LikeLike

Pingback: When gel bands go marching in, by Elisabeth Bik – For Better Science

Pingback: Seeing Double - Elisabeth Bik - Parsing Science

Pingback: Gregg Semenza: real Nobel Prize and unreal research data – For Better Science