Elsevier recently announced to crack down on their peer reviewers who rig citations. The problem goes however further, since most rotten fishes (which many Elsevier journals are) stink from the head down. The following guest post by a Scandinavian cancer researcher, under the pseudonym “Morty“, will reveal some clever tactics academic editors use to boost both their own and their journal’s publication output.

This time it is not the simple mandate to authors about citing random papers from the journal they submit to, or publishing any fraud without looking, it is more sophisticated. First of all, there are cases of editors who use their journal as the main pipeline for their own research. Sure, editors are expected to publish their own work in the journal they edit, but the key point is not to overdo it. Depending on the field, up to one or two papers per year can be OK, but otherwise one does wonder whether the work is so awful that no other journal but your own wanted it.

One particularly egregious case Morty presents is of a certain British former academic and now businessman: 95% of his entire “research” output was published in the two Elsevier journals he presides over as Editor-in-Chief. Another option to game the system: when several academics sit on editorial boards of two or more seemingly unrelated journals, they can push through each other’s papers, in a mutual back-scratching manner. And yet another route to boost your publication output seems to be to require a gift authorship as reward for pushing somebody’s paper into the journal you edit.

Editors: Captains on rat ships

By “Morty”

We are witnessing a crisis of trust in science, where researchers struggle to orientate in the wild west of scholarly literature. Instead of fighting against the global misinformation, research scientists are occupied with their own little universe and all too concerned about research funds and securing a future career. Although sad, it is in some way understandable. Even as academic journal editors, scientists often fail their responsibility. What kind of science do we get when the editors of so called scientific journals don’t bother about the quality and stop maintaining true scientific standards?

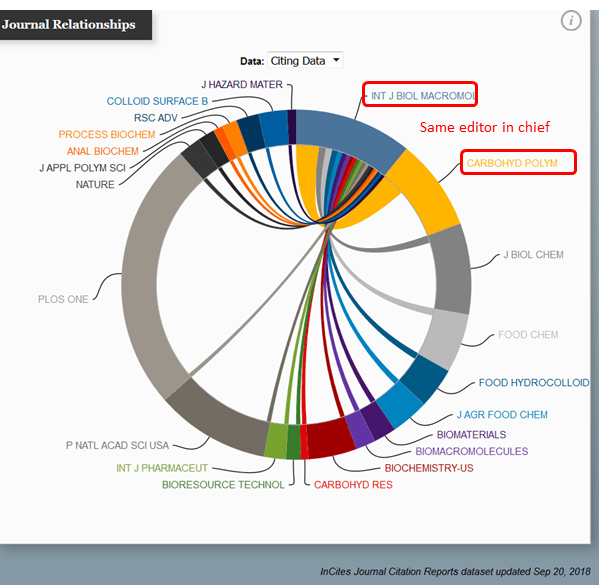

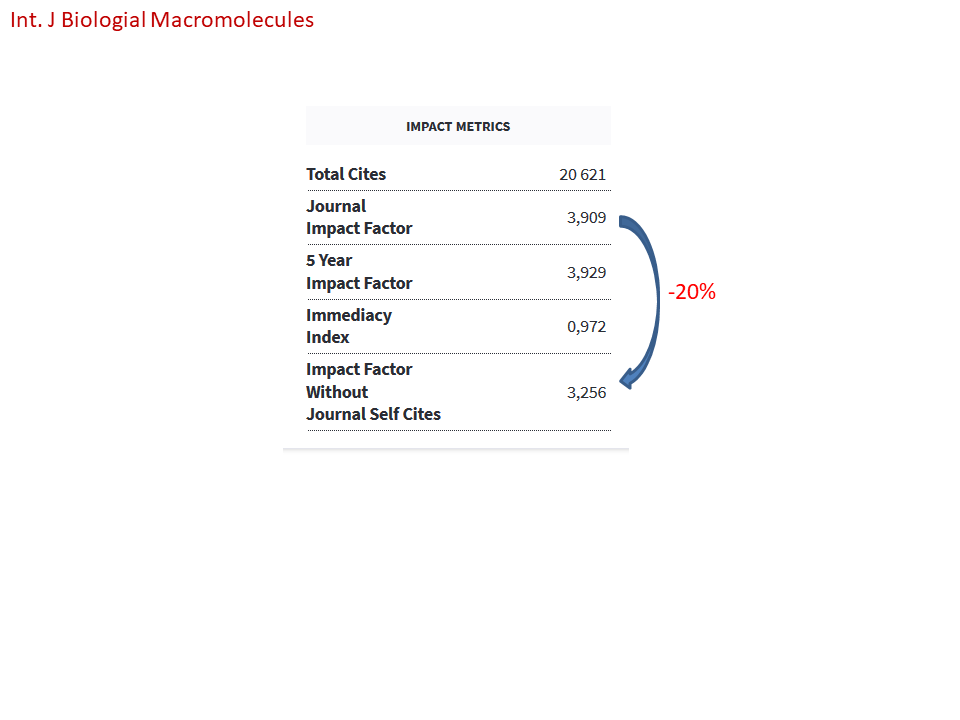

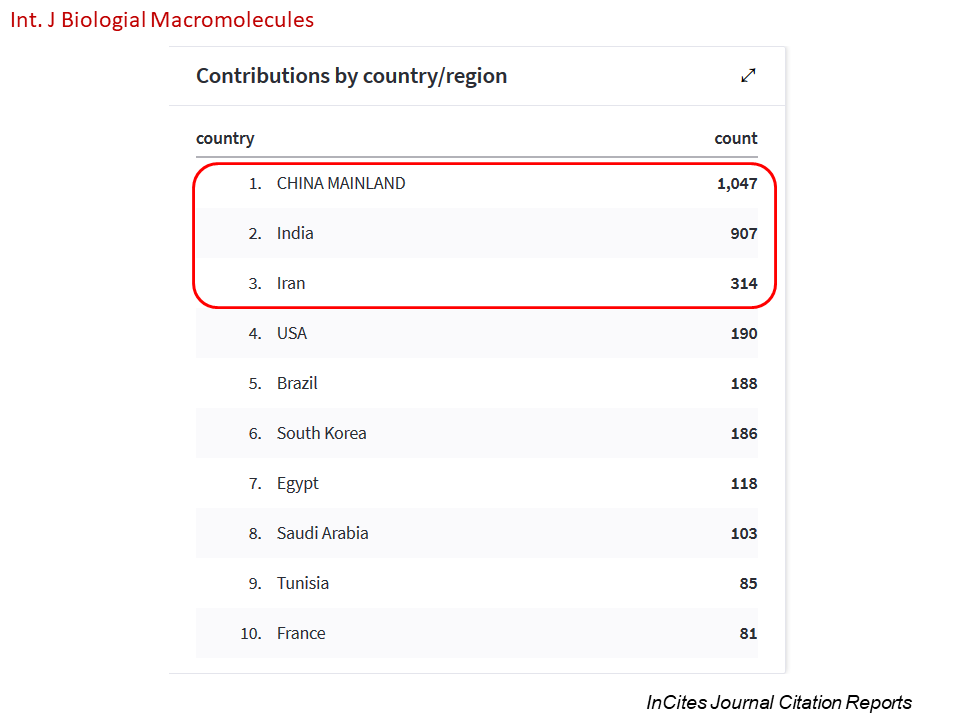

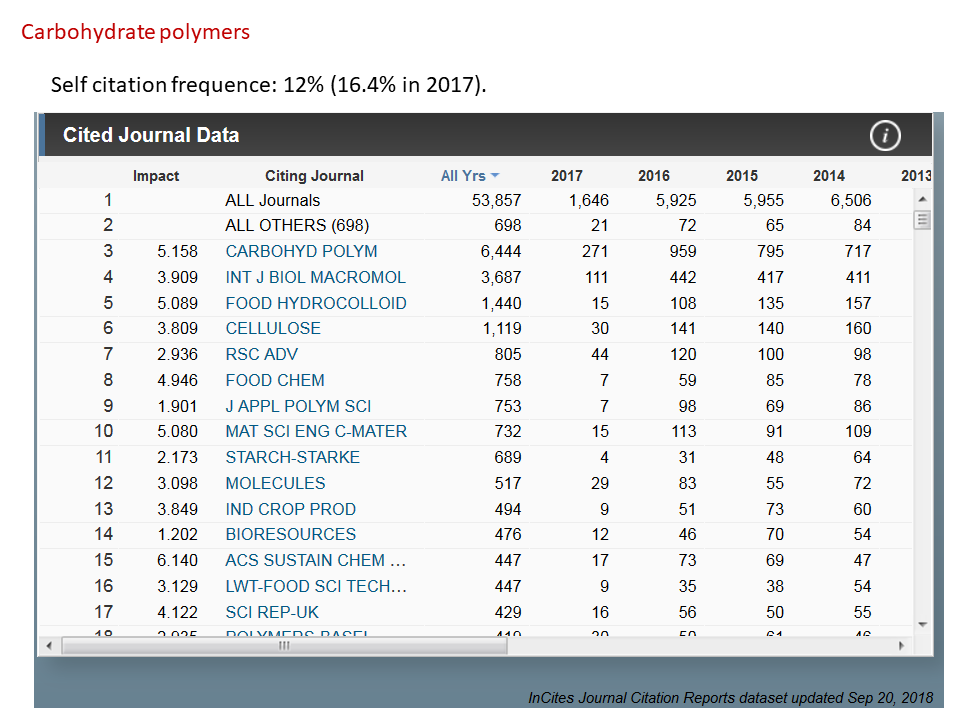

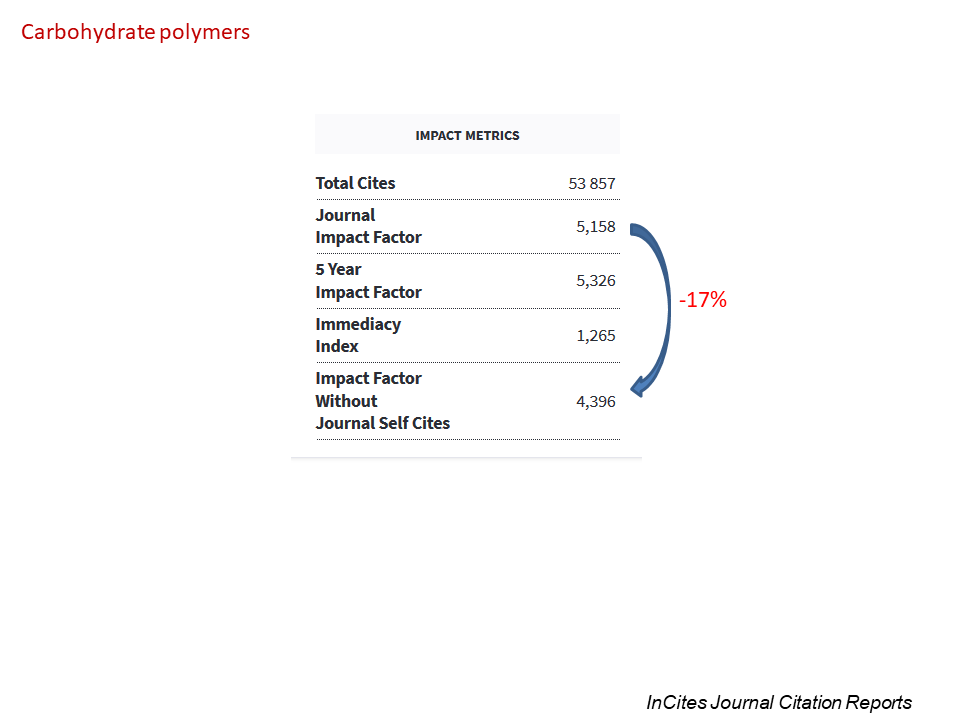

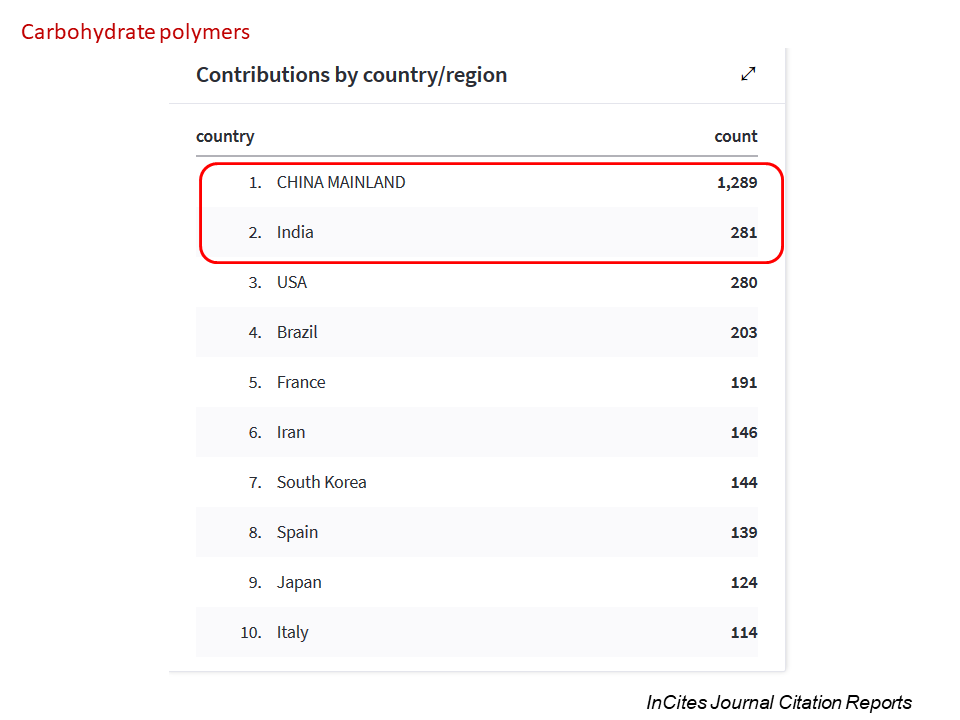

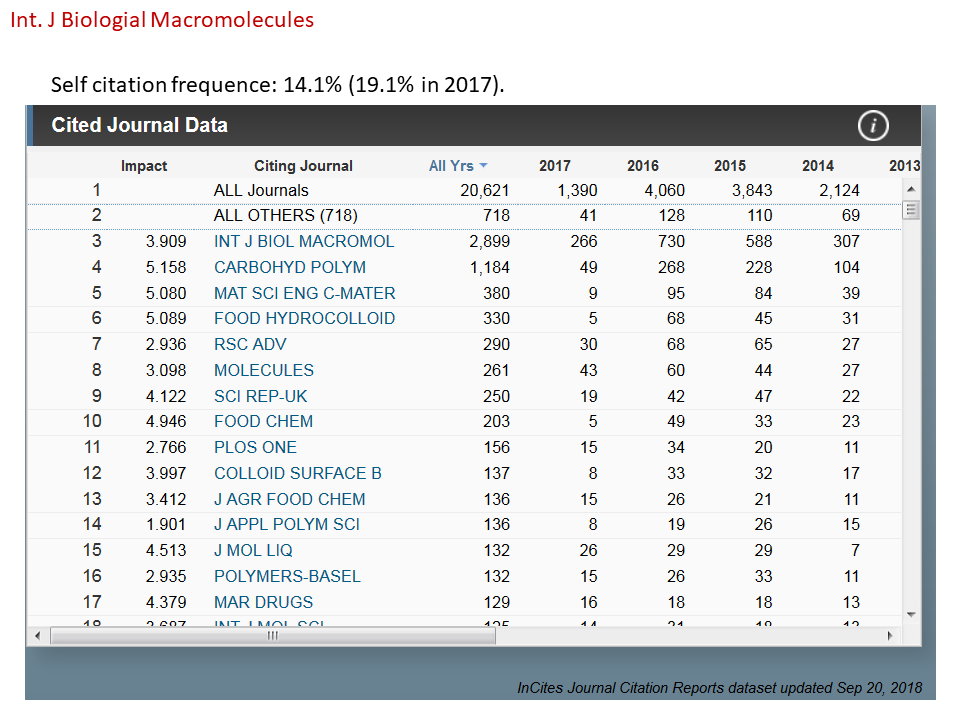

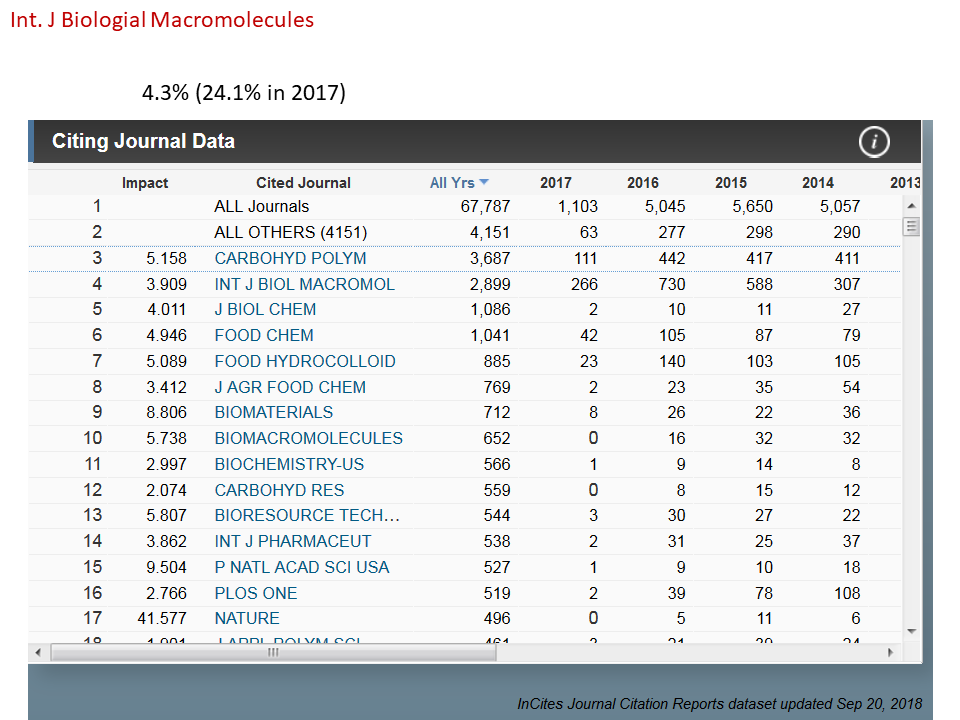

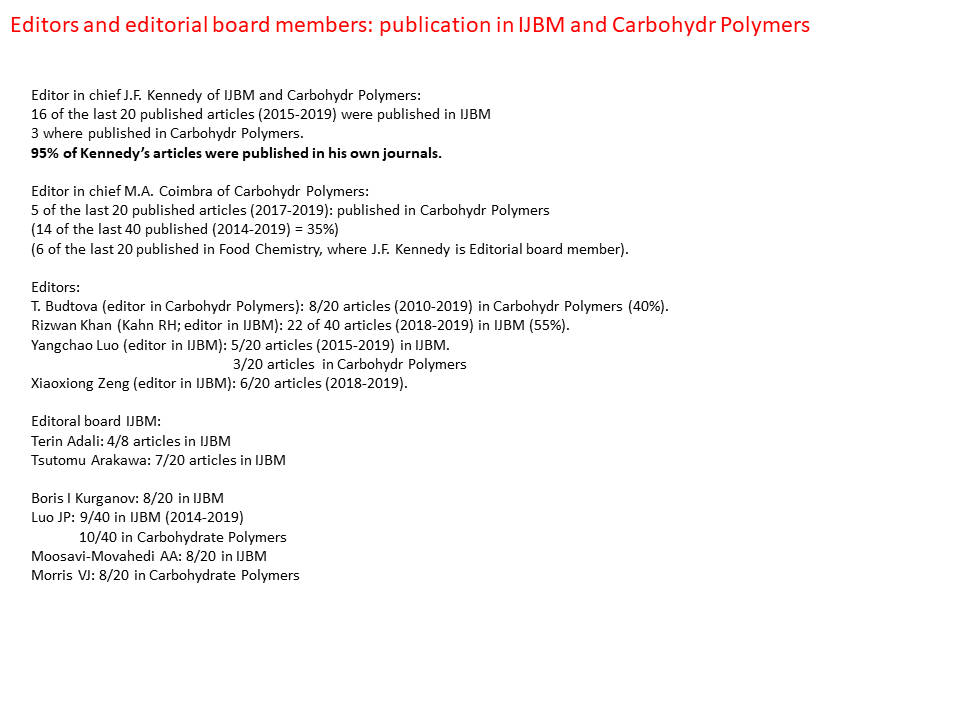

Some editors apparently use their journals as their own playground, where they publish with high frequency. John F. Kennedy, not the former US president, but a businessman and Editor-in-Chief of the two Elsevier journals International Journal of Biological Macromolecules (IJBM) and Carbohydrate Polymers, can serve as a good example. The two journals share many similarities besides having the same Editor-in-Chief. The vast majority of contributing authors is from China, with India as the second most contributing country. They have a high self-citation rate and the two journals are citing each other at an unusual high frequency. This means that their relatively decent journal impact factor would be much lower without this collaboration. Kennedy figures as a frequent contributor in both journals. We are not talking about editorials, but original articles or reviews. As many as 16 of the last 20 published articles where Kennedy figures as author, were published in IJBM (from 2015 to 2019). In addition, three of the articles were published in Carbohydrate Polymers. This means that 95% of Kennedys’ articles were published in the two journals where he is Editor-in-Chief!

You may have noticed the problem that editors are so eager to publish that a large proportion of their publications are in their own journal. This phenomenon seems to be common in many research fields, e.g. cancer research. Wafik S. El-Deiry, Editor-in-Chief in Cancer Biology & Therapy (CBT, published by Taylor & Francis), got nearly a quarter of his articles published in his own journal from when he started as Editor-in-Chief in 2001. Paul Dent, deputy Editor-in-Chief in CBT is even more eager: half of his scientific production since 2017 has been published in CBT! But he has already got enough publicity at PubPeer together with his colleagues Steven Grant and Paul B Fisher (editor and editorial board member in CBT, respectively). Another example is Marc E. Lippman, Editor-in-Chief of the Springer journal Breast Cancer Research and Therapy. He is a frequent contributor in his own journals, with 45% of his last 20 articles published there (since 2009). Moving over to polymer science, a research field closer to what is the focus of this story: Ann-Christine Albertsson, Editor-in-Chief in the ACS journal Biomacromolecules, has so far published 40% of her last articles since 2014 in her own journal.

So what about the scientific quality? You would think that the Editors- in-Chief should figure as a good role model and present data with the highest standards. I’m afraid not. Most of the data is presented as opaque graphical illustrations without any raw data, which makes it more challenging to analyze the quality of the published data. However, by looking at the statistical analysis, or more correctly lack of any statistical analysis, you are left with an impression that most of the results are scientifically worthless. Before I started at the university, I learned that only one measurement is equal to no measurement. In Kennedy’s articles, average values and standard deviations are conspicuous by their absence. In most of the articles, results from one measurement are shown (examples here), often without any explanations regarding the statistics. On top of the fact that most of the results are scientifically meaningless, the sloppiness shown in the graphical illustrations where e.g. errors in the graph axis are frequent makes it a complete disaster.

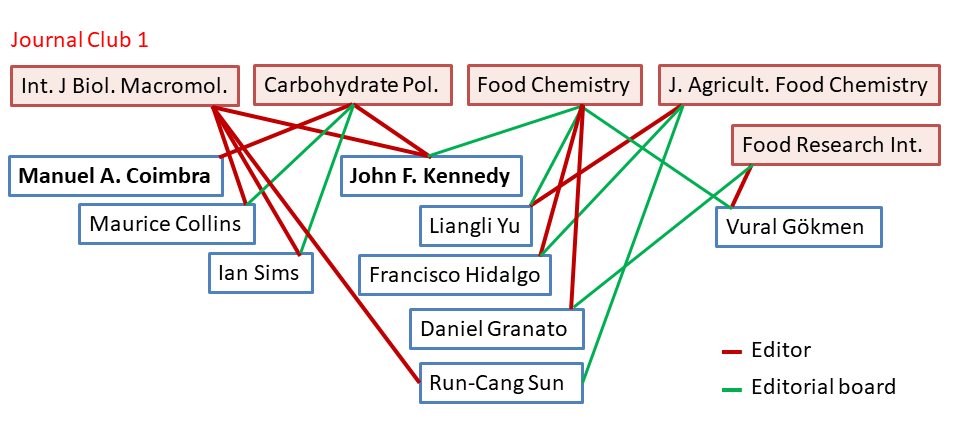

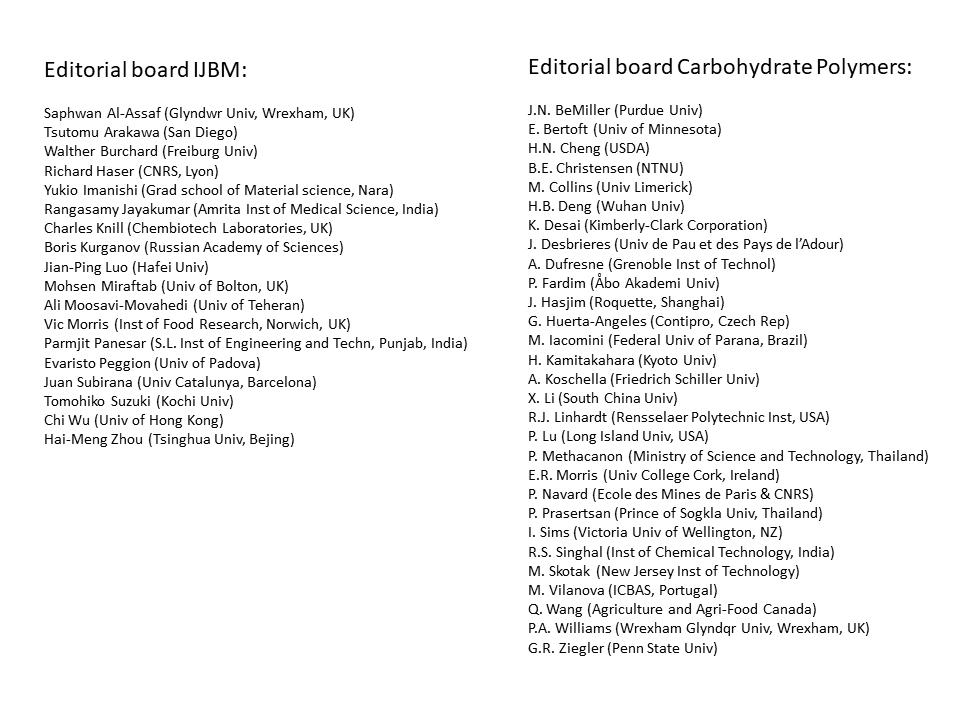

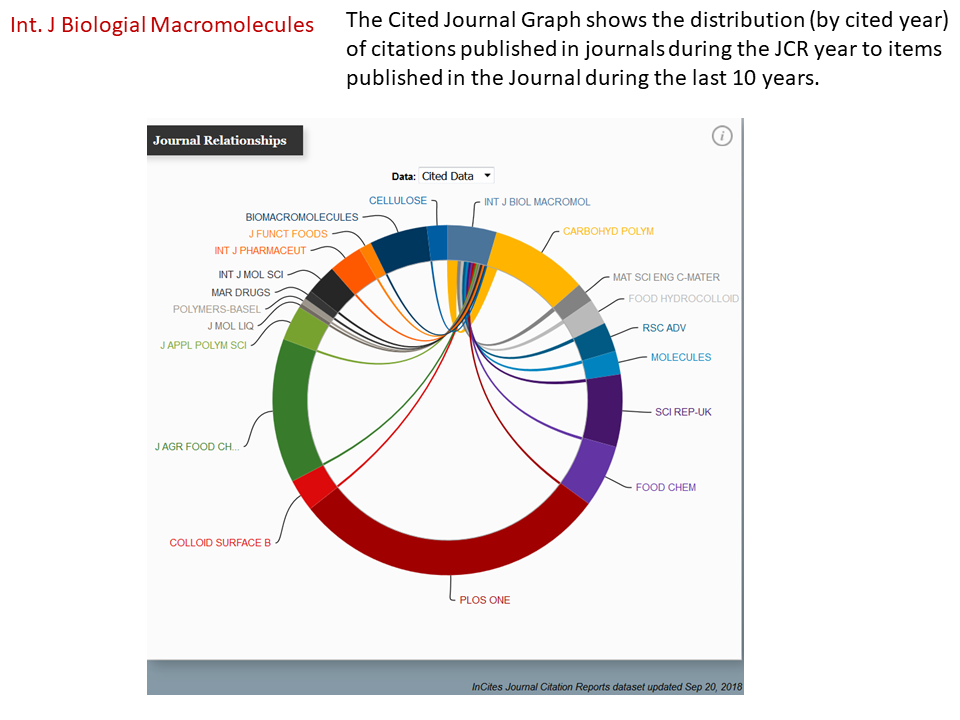

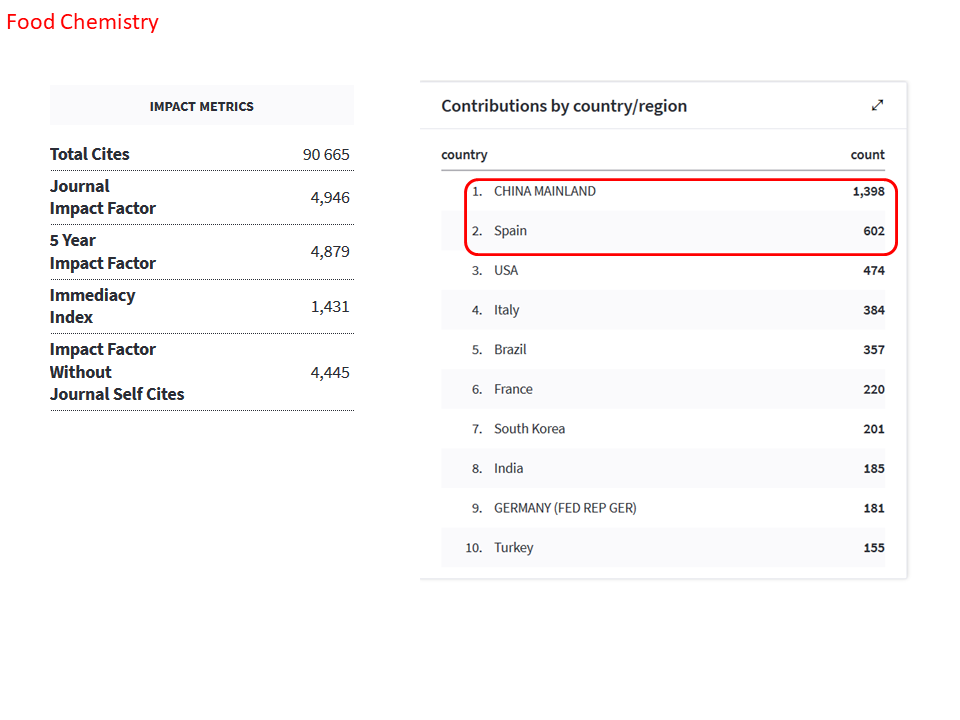

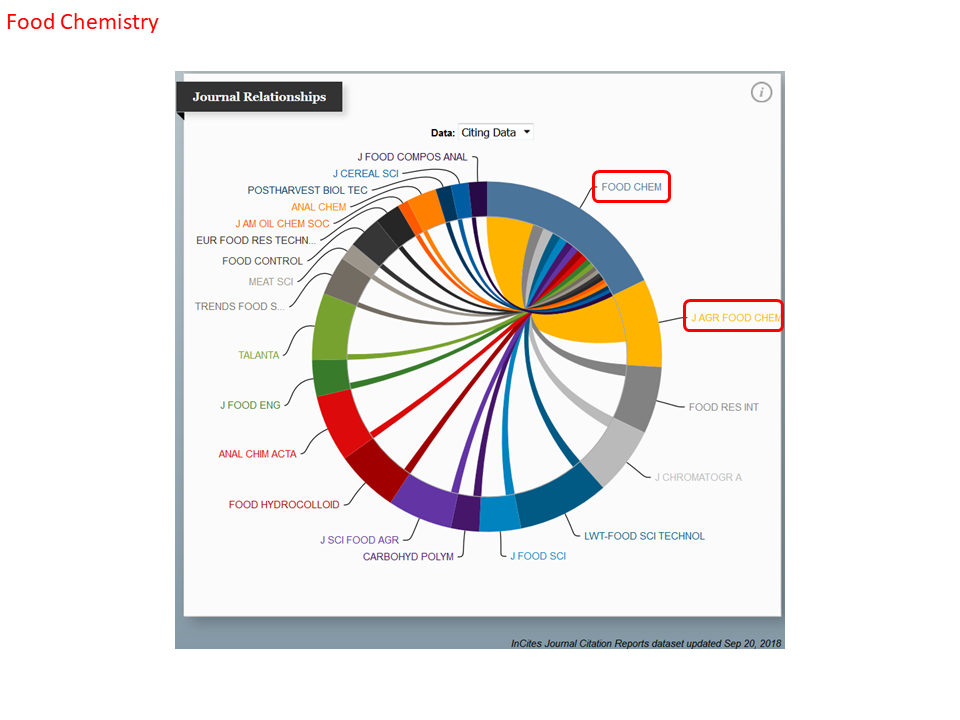

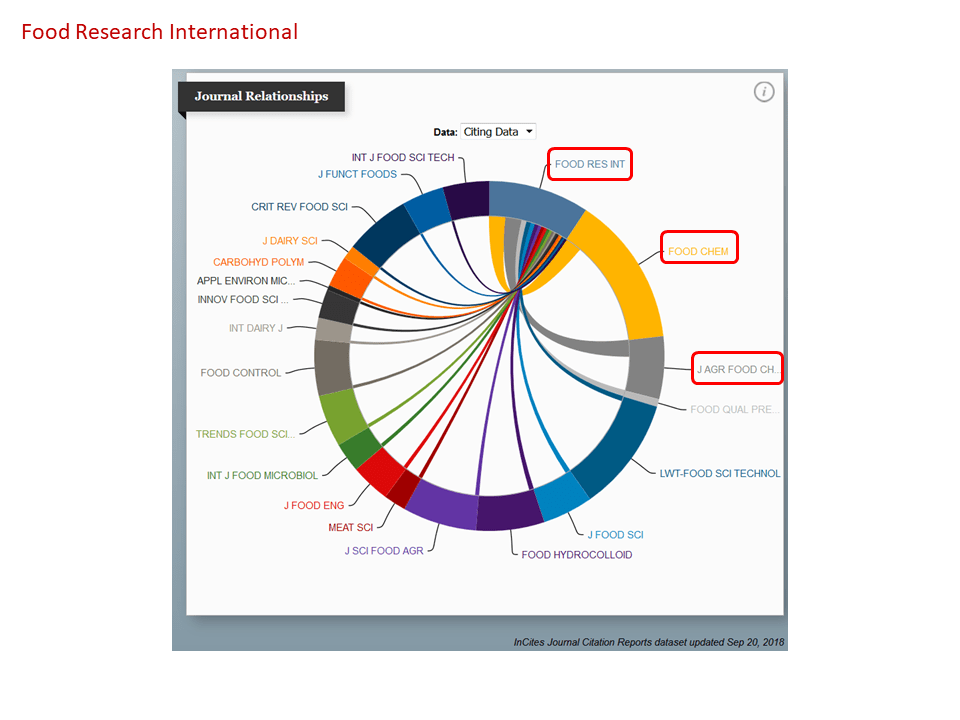

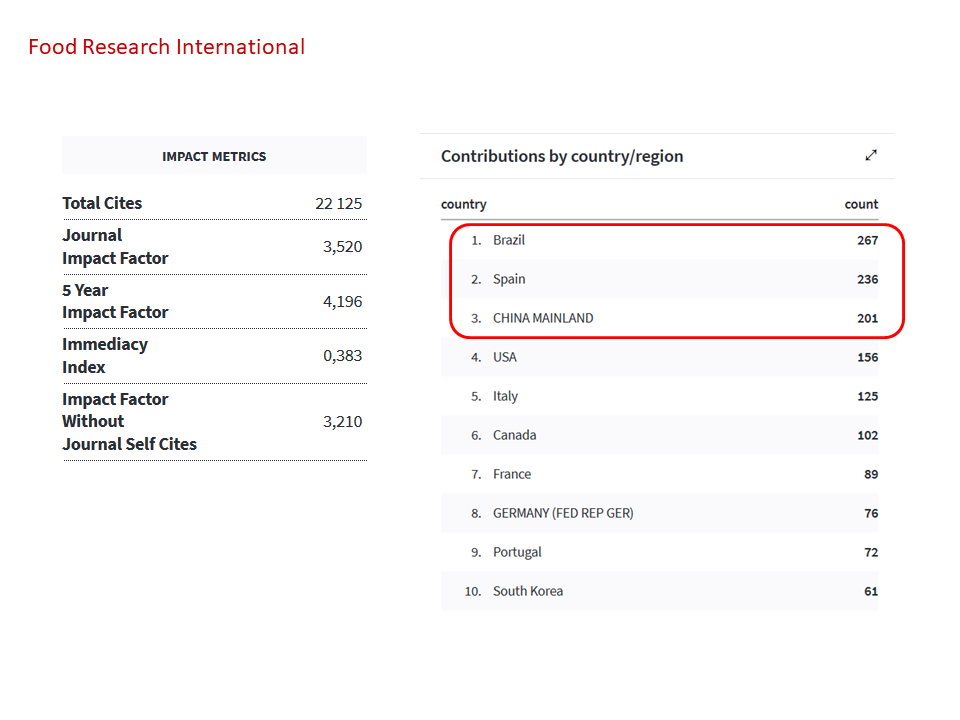

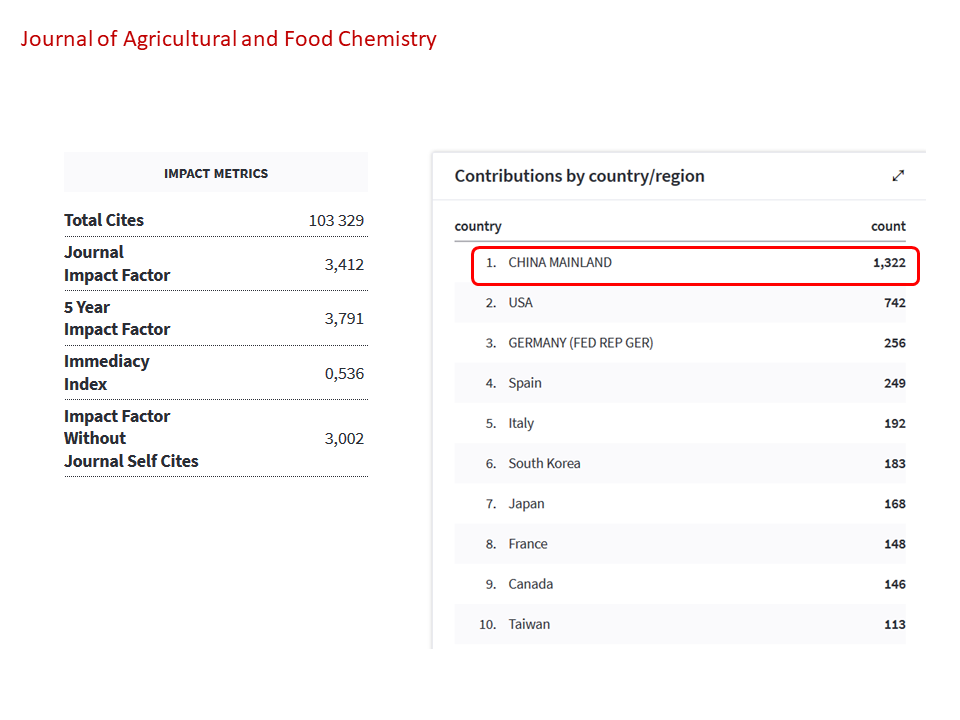

The journal Carbohydrate Polymers’ second editor-in-chief, Manuel A. Coimbra, is also a frequent contributor in Carbohydrate Polymers: A quarter of the last twenty articles by Coimbra were published in this journal. If you count the last forty it is even higher (35%). Interestingly, six of the last twenty articles by Coimbra were also published in the Elsevier journal Food Chemistry. I am not sure if it’s a coincidence, but his friend Kennedy is also a member of the editorial board of Food Chemistry. Friends like to play together, and that is clearly seen when studying the tight link between some of the journals, where journals share editors and editorial board members. Maurice Collins and Ian Sims are e.g. editors in IJBM, but they also figures as editorial board members in Carbohydrate Polymers. Francisco Hidalgo is editor in Food Chemistry, in addition to editorial board member at Journal of Agricultural and Food Chemistry, published by ACS. Another editor in Journal of Agricultural and Food Chemistry, Liangli (Lucy) Yu is also editorial board member in Food Chemistry. Daniel Granato, editor in Food Chemistry, figures also as an editorial board member of a fifth journal: Food Research International, published by Elsevier. An editor in this journal, Vural Gökmen, is also member of the editorial board of Food Chemistry. To end the circle, editor at IJBM, Run-Cang Sun, figures also as an editorial board member in Journal of Agricultural and Food Chemistry. An overview of the “journal club” is seen here:

The collaboration between IJBM and Carbohydrate Polymers (CP) is an example of apparently cross-citation between friendly journals. Another example is the association between Food Chemistry and J. of Agricultural Food Chemistry. Cross-citation can be an efficient way to boost the journal impact.

So what about the other editors in IJBM and Carbohydrate Polymers (CP) and their publication record? Many of them seem to have free vouchers for publications in these journals and an unusual high proportion of their articles have been published in the mentioned journals:

- Rizwan H. Khan (editor in IJBM): 55% of the last forty articles (2018-2019) in IJBM.

- Tatiana Budtova (editor in CP): 40% of the last twenty articles (2014-2019) in CP.

- Yangchao Luo (editor in IJBM): 25% of the last twenty articles (2015-2019) in IJBM (40% in IJBM + CP).

- Kevin J. Edgar (editor in CP): 35% of the last twenty articles (2016-2019) in CP.

- Robert G Gilbert (editor in CP): 35% of the last twenty articles (2016-2019) in CP.

- Xiaxiong Zeng (editor in IJBM): 30% of the last twenty articles (2018-2019) in IJBM.

The editorial board members of IJBM and CP are of course also frequent contributors in these journals, For IJBM, six of the eighteen members of the editorial board were identified with an unusual high publication frequency in IJBM and CP:

- Terin Adali: 57% of the last seven articles (2013-2019) in IJBM.

- Boris I. Kurganov: 40% of the last twenty articles in IJBM.

- Jian-Ping Luo: 23% of the last forty articles (2014-2019) in IJBM and 25% in CP.

- Ali A. Moosavi-Movahedi: 40% of the last twenty articles in IJBM.

- Victor J. Morris VJ: 40% of the last twenty articles in IJBM.

- Tsutomu Arakawa: 35% of the last twenty in IJBM.

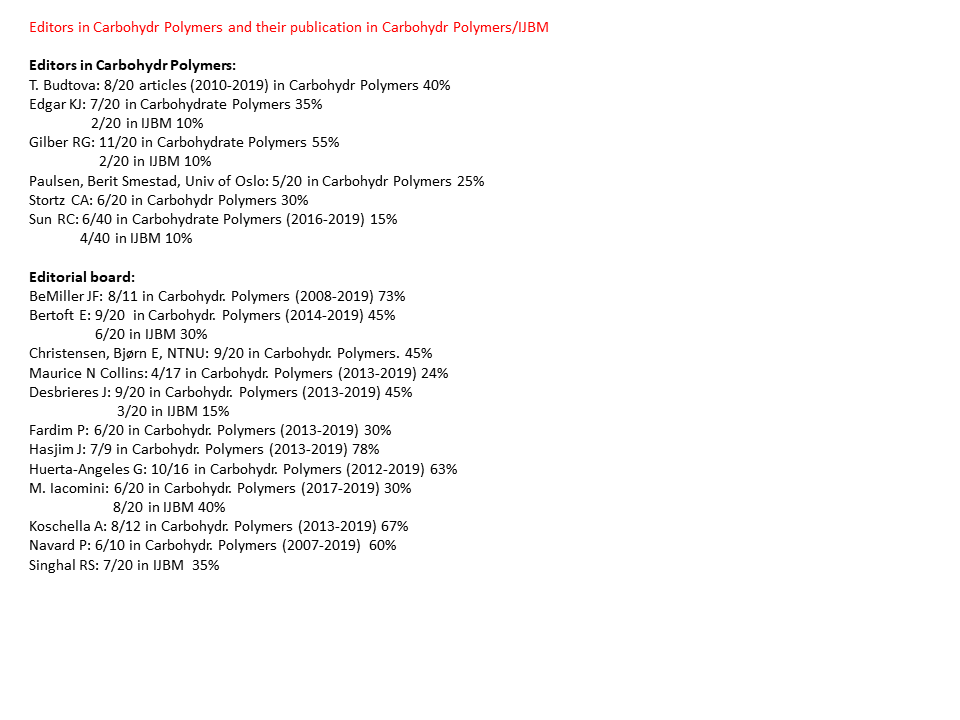

For Carbohydrate Polymers, eleven members of the editorial board showed a similar high contribution frequency in CP and IJBM:

- Jovin Hasjim: 78% of the last nine articles (2013-2019) in CP.

- James N. BeMiller: 73% of the last eleven articles (2008-2019) in CP.

- Andreas Koschella: 67% of the last twelve articles (2013-2019) in CP.

- Gloria Huerta-Angeles: 63% of the last 16 articles (2012-2019) in CP.

- Patrick Navard: 60% of the last ten articles (2007-2019) in CP.

- Eric Bertoft: 45% of the last 20 articles (2014-2019) in CP and 30% in IJBM.

- Bjørn E. Christensen: 45% of the last 20 articles (2013-2019) in CP.

- Jacques Desbrieres: 45% of the last 20 articles (2013-2019) in CP and 15% in IJBM.

- Rekha S. Singhal: 35% of the last 20 articles (2015-2019) in CP.

- Marcello Iacomini: 30% of the last 20 articles (2017-2019) in CP and 40% in IJBM.

Usually in research, where it has become more and more challenging to get manuscripts published in a decent journal if you don’t have the right contacts or cheat, you publish your results in a variety of journals. If a large proportion of a scientist’s papers ends up in one specific journal, you have reasons to be suspicious. How is the journal peer-reviewing policy and are there any unhealthy connections that interfere with the editorial decision making? After screening the articles from editors and editorial board members in IJBM and Carbohydrate Polymers, you may start to wonder how they were accepted for publishing after all. Because there are a lot of trouble, all from sloppy errors, texts full of spelling errors, lack of controls and statistical information, to more serious business with apparently manipulation and falsification of data and frequent re-use of data. Here are some examples:

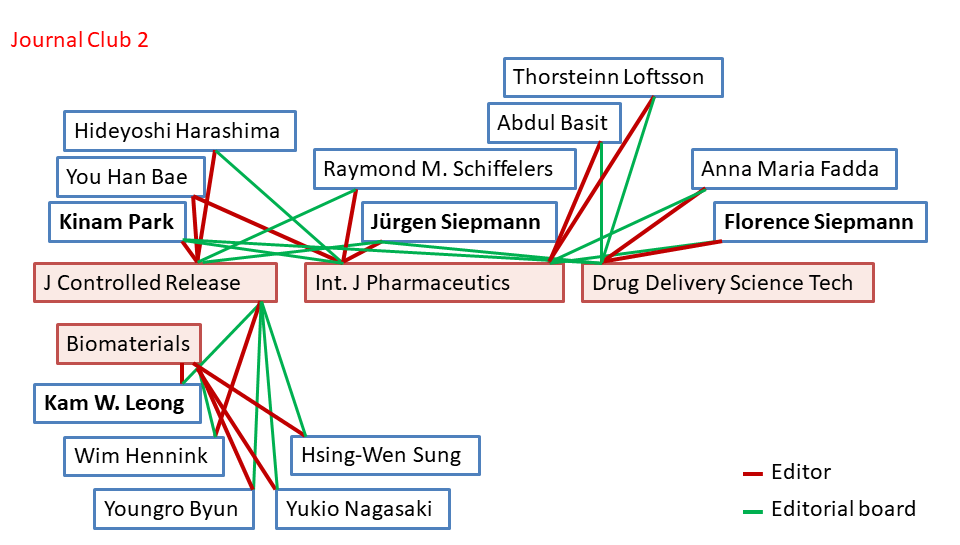

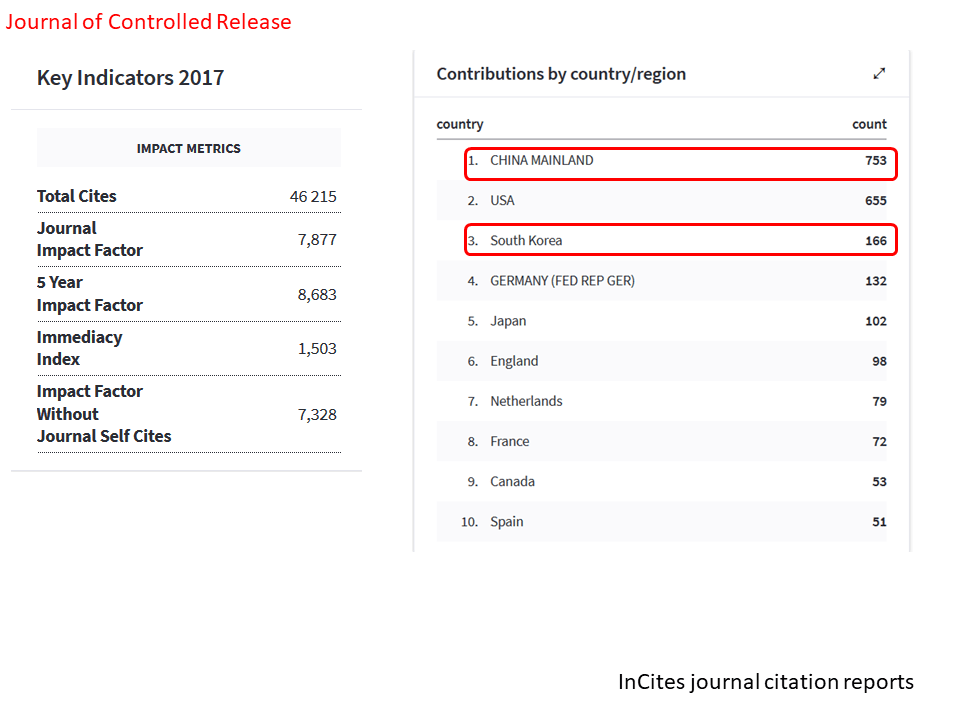

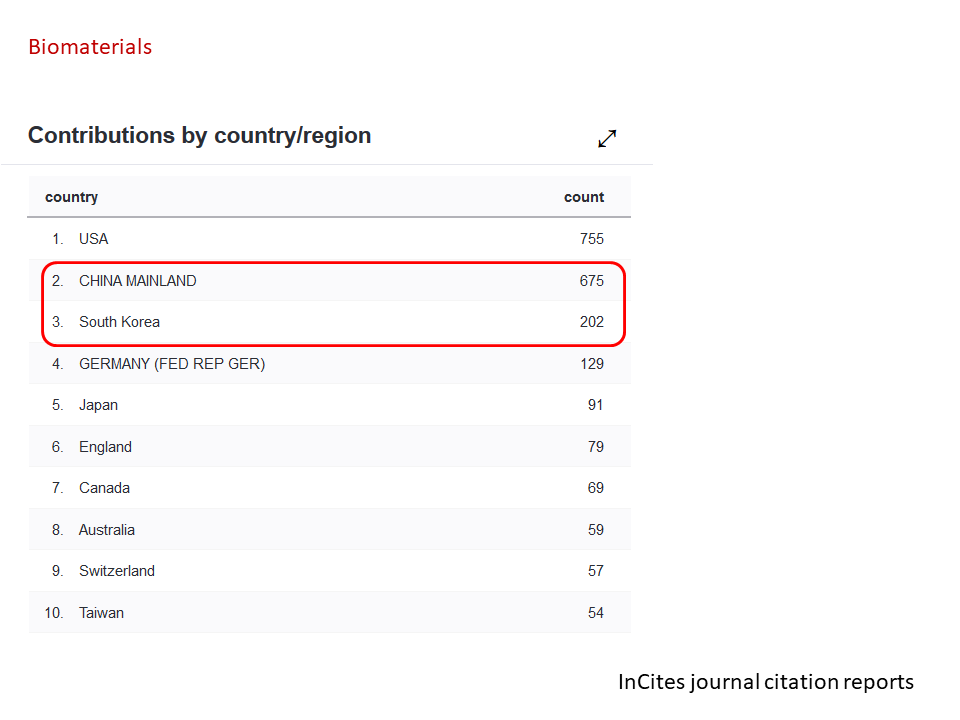

The journal club of IJBM, Carbohydrate Polymers and the other mentioned journals, is most probably not unusual. When screening the journals and checking the editors’ publications, you may easily find similar examples of journal clubs. Let me give you one more example, again from a collection of journals published by the great Elsevier. Let’s call it Journal club 2. Journal of Controlled Release (JCR) and Biomaterials are, in comparison with the journals in Journal club 1, in a higher division. They are elite journals in their field, with impact factors between 8 and 9. Similar to IJBM and Carbohydrate Polymers, there is no doubt that there are close ties between them. Kam W. Leong, Editor-in-Chief of Biomaterials, figures also as an editorial board member of JCR. Furthermore, three Biomaterials editors (Youngro Byun, Yukio Nagasaki and Hsing-Wen Sung) are also editorial board members of JCR. In addition, editor in JCR Wim Hennink, is also editorial board member in Biomaterials.

Major contribution countries are also here dominated by China and South Korea. For JCR, China is number one, with USA and South Korea as the second and third most contributing countries, respectively. For Biomaterials, USA is the most contributing country, but China and South Korea are at the second and third place, and together they are contributing more than USA.

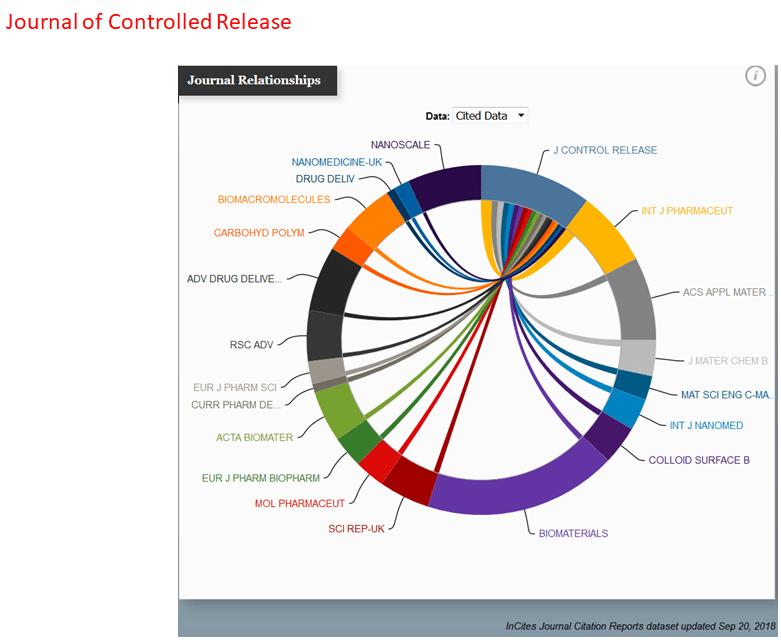

One may add two more journals to this journal club. Editor-in-chief of JCR, Kinam Park, is also an editorial board member of two Elsevier journals International Journal of Pharmaceutics (IJP) and Journal of Drug Delivery Science and Technology (JDDST). JCR editor You Han Bae is also editor in IJP, and Hideyoshi Harashima, another JCR editor, figures also in the editorial board of IJP. Jürgen Siepmann, editor-in-chief in IJP, is also member of the JCR editorial board together with another editor in IJP (Raymond M Shiffelers). The collaboration between these journals can also be seen by the frequent citation between the journals (figure). The Editor-in-Chief of the last journal in the club, JDDST, is Florence Siepmann (also member of Jürgen Siepmann lab) and you may not be surprised that there is collaboration between JDDST and IJP. Florence Siepmann is also editorial board member in IJP, together with another JDDST editor, Anna Maria Fadda. Furthermore, editors in IJP, Abdul Basit and Thorsteinn Loftsson, are also members of the editorial board of JDDST.

In Journal club 2 the editors and editorial board members also show a high contribution frequency. Just a few examples this time:

Editors in JCR:

- Kinam Park (editor-in-chief): 47% of the last 15 articles (2017-2019) in JCR.

- You Han Bae: 35% of the last 20 articles (2015-2019) in JCR.

- Steven P. Schwendeman: 28% of the last 40 articles (2011-2019) in JCR.

- Ick Chan Kwon: 28% of the last 40 articles (2016-2019) in JCR.

- Hideyoshi Harashima: 25% of the last 20 articles (2018-2019) in JCR.

Editors in Biomaterials:

- Youngro Byun: 30% of the last 20 articles (2017-2019) in Biomaterials (+ 30% of the last 20 articles in JCR).

- Yukio Nagasaki: 30% of the last 20 articles (2016-2019) in JCR.

- Hsing-Wen Sung: 30% of the last 20 articles (2016-2019) in Biomaterials.

Editors in IJP:

- Jürgen Siepmann (editor-in-chief): 45% of the last 20 articles (2015-2019) in IJP.

- Abdul W. Basit: 45% of the last 20 articles (2018-2019) in IJP.

- Veronique Préat: 20% of the last 20 articles (2017-2019) in IJP + 25% in JCR.

- Fumiyoshi Yamashita: 20% of the last 20 articles (2015-2019) in IJP + 20% in JCR.

Editors in Drug Delivery Science and Technology (DDST):

- Florence Siepmann (editor-in-chief): 35% of the last 20 articles (2015-2019) in IJP.

- Anna Maria Fadda: 45% of the last 20 articles (2017-2019) in IJP.

The collaboration between the journals in Journal Club 2 is seen by the high contribution by many of the journal editors in more than one journal. There is also an example of apparently cross-citation between friendly journals JCR and IJP.

So why do the editors publish so frequently in their own journals? One obvious reason is of course improving their own publication record to generate research funding. Many of the editors have an impressive publication record which has generated a lot of public funds. There are examples of editors that usually are last authors of their publications. Good examples here are John F. Kennedy (IJBM/CP), Hsing-Wen Sung (Biomaterials) and Jürgen Siepmann (IJP). Some of the editors are in many cases not figuring as last authors, which may suggest possible gift-authorship agreements. Examples here are You Han Bae (JCR/IJP) and Abdul W Basit (IJP). Another driving force for frequent publication in their own journals is prestige. Some editors are eager to raise the journal impact factor, maybe too eager.

Elsevier controls a fleet of rat ships. They are filled with treasure, but without scientific values. Their captains do fulfill their duty: to carry the vessels to a Dutch harbor to unload the treasure. However, infested ships are spreading a devastating pest which now are threatening the fundament and trust in science all over the world.

Donate!

If you are interested to support my work, you can leave here a small tip of $5. Or several of small tips, just increase the amount as you like (2x=€10; 5x=€25). Your generous patronage of my journalism, however small it appears to you, will greatly help me with my legal costs.

€5.00

The current editorial system works like a “censorship mesh” like in Portugal or Spain during dictature. The only goal of this system is to benefit certain groups of scientists i.e. don’t publish the truth but publish “the truth” or even the cheated results that are beneficial to keep the fund mill rotating.

LikeLike

The rates of the editor publishing in the journal are quite suspicious, and the suggested quality of articles even more so.

However, the concept of citation clubs is not quite as clear. If there is a sub-field that has a number of dedicated journals, it is quite likely that they cite each other frequently and have many editors in common. It seems difficult to distinguish the healthy occurences of this from the fraudulent ones by looking at citation counts and numbers of shared editors.

LikeLike

There was a time when fraud/cheating was thought to be rare, and everybody in science was surprised that in did not happen more often in light of how much benefit it gives to the cheaters.

My (provocative) hypothesis is that in the past, science was mostly done in western culture where never telling a lie, even if it means not “getting ahead” (making more money and living better), was extremely important. As more groups of people from different cultures enter scientific publishing, we are seeing more cheating. My hypothesis suggests that in these other cultures, telling lies is more acceptable, when it leads to a better life.

Again, this is not a race issue, but a culture issue. Some cultures are more likely to sacrifice truth if it means more prestige and money.

LikeLike

Alternatively:

Gaming citation metrics has grown in terms of benefits.

There used to be fewer people doing science, and thereby stronger social contact. Small villages know who the troublemakers are, large cities less so.

Western cultures have longer traditions in science, and maybe some of the traditions are good.

Some Western countries are fairly uncorrupted, which correlates with wealth of the nation, and (I might be wrong here) equality in wealth of citizens.

But the hypothesis would be interesting to investigate. Take data from Pubpeer or Retraction watch or such, control for some measure of corruption, and see how much the nationality, country of education and country of residence matter. This would leave your explanation and explanations 2 and 3 as possible causes, plus any others neither of us has mentioned.

LikeLiked by 1 person

Do you read this blog? How many of stories introduced in this blog is about white people born and raised in western countries? I would say 70% of them are white people born and raised in western countries, but you see they are pretty good cheaters as well. So please close your mouth and don’t spread more racist nonsense. I think that’s enough for you. You are already pretty famous for being just a crank, and you don’t need to mark yourself as a miserable racist crank!

LikeLike

As I said earlier: this is NOT a race issue, but a culture issue. Hence, my hypothesis predicts that white, or, non-white races that are raised in western cultures where truth is paramount are less likely to commit fraud then the same white, or non-white races raised in non-western cultures.

However, there is always the aberrant data point, like you, “Mike” (I presume you were raised in the western-tradition) who famously said (June 16, 2019):

“It’s not that easy…. sometimes faking data is the only way to survive for some people.”

sigh

Please keep posting Mike, you are absolutely delightful!

LikeLike

Yes, that is fact that distinct cultures have distinct moral systems. Telling the truth is not a moral imperative in some of them. For instance, the Bhagavad Gita explains that the moral compass depends on the caste in which a person has born. For example someone born to the caste of merchants, indeed should lie in order to keep living (i.e. should cheat and mislead the consumers to get the product sold). Also, the World´s second largest religion (once rare in Europe, now becoming prevalent) states that it is not a shame to lie to those who do not belong to the same religion. Sorry, Leonid, maybe you will be in trouble because of my comment. Probably some readers form the fields of social studies and moral theology can confirm what I wrote.

LikeLike

About telling truth…..

It is not race or culture. It is a moral choice and individual choice. There are more morally

bankrupt lying, thieving, cunning people in western culture than elsewhere (go to wall street in New York or Silicon valley in California).

LikeLike

Boris Johnson a western culture representative and highly educated man says only truth?

I will give you a hint – he is a confident lier and rules western country called GB

Royals are big fans of tax heavens and give him support.

That’s how western culture keeps making money

LikeLike

World Champion of self citation -Rahul Hajare (India). All his references in all his papers are his own work

This is what happens when the Journal editor does not care about checking content or citations and their relevance to the content or sends it for peer review. This type of copy and paste random internet material into predatory Journals is a novel case of plagiarism and scientific misconduct that is funny and tragic at the same time.

https://scholar.google.com/scholar?start=80&q=rahul+hajare&hl=en&as_sdt=0,22&as_ylo=2018

https://pubpeer.com/publications/7F8673782BB016456E8B8291332760

LikeLike

… this guy MUST be a troll just testing the predatory journals. There is no other way this can be. I mean:

“In Vitro, Widowed and Curse Words form Principal During Unplanned Meeting of the College in Private Pharmaceutical Institutions in Pune University India: An Attractive Study”

😀

LikeLike

He was fine until 2017 working as postdoc under ramesh parangape, retired director of national AIDS res institute (see ACKNOWLEDGEMENT). Something happened to him (may be loss of

job or trauma) and he started this nonsensical publishing in 2018. I am sure he is not paying for the article processing fees considering the huge amount of output.

LikeLike

I am sure he is not paying for the article processing fees

I looked at a few of the parasitical journals where Rahul Hajare was publishing and found him on the Editorial Board each time. So he signs up to swell their numbers of Editors, and gets a fee waiver in return.

LikeLike

To zmudzka: the moral choice of the individual is determined by the social conditioning, and the culture in which the individual has been raised. Regarding your example about prevalence of fraud in business and innovation centers: for a proper comparison you would need a same size population from “non-Western” cultures, and a similar threshold for definition of “lie”. Now the second one is the problem. You do not know the degree of lie infiltrated into everyday life and professional life in some countries. Why, do you think, some non-Western countries have banknotes with print on them: do not support corruption? Or, why in some “non-Western” cultures the academic dishonesty peaks? If a society had a moral standard for thousands of years which legitimated lies, the lie is normal, and not persecuted, and even not noticed. Human being is prone to lie for many reasons. However, if a particular human being is socialized in a lie-tolerant society, will face problems in a lie-intolerant culture (and peers, colleagues, institutions will also face problems with a lie-conditioned fellow). While the intent of lie may be similar in both cultures, in a society which states that lie is a sin, the liars are seen by the society as sinners, and telling lies is NOT considered as normal. This is a huge difference between the two moral standards. If a researcher has grown in a culture which states that “‘Satyameve Jayate’= truth alone triumphs, [but] there is no contradiction in it when we say we are allowed to tell lies for the good of the humanity.”.

LikeLike

And one more thought to add: if there is a person with the socially conditioned moral standard which states “Tell the truth. Except in cases A, B, C and D.”, it is challenging for this person to work/live in synergy with another person who has the socially conditioned moral standard which state “Tell the truth. Period.”. And for the letter person it is frustrating also, since that person is unaware that there are cases A, B, C and D when the truth is allowed to be distorted by the other person. So, this conflict can be solved in three ways: 1. all scientists adapt the moral standard that lie is allowed for the good of the humanity, 2. all scientists adapt the moral standard that do not lie and tell the truth, 3. a new ode of publication ethics should be set up, which provides a list of cases where lie is allowed, to comply with the moral standard “Tell the truth. Except in cases A, B, C and D.” . So in this case everybody can understand that in cases A, B, C and D, the truth is a lie.

LikeLike

Japanese society and culture prides on honesty and telling truth. If you look at the retraction watch most retracted, Japan leads by a wide margin.

LikeLike

I think context is very important. In some cultures, some individuals in that culture may be more willing to sacrifice the truth to “save face” to authority. I wouldn’t be surprised if this happens in labs, where you have all, or almost all members of the lab, along with the lab head, from the same culture, and the members want to “save face” to the lab head.

Recently, at the U of Kentucky (USA) there was a case where two labs (run by Xiangling Shi and Zhuo Zhang) were shut down where the lab heads, and most of the people working for them, were Chinese nationals (ie, born in mainland China). The investigators found in just about all of the papers they looked at, all of the original primary data was missing, even for figures published in 2019 (!). This also included clear fabrications and duplications of data with a few retractions so far. During the investigation, the two lab heads fabricated “missing” data to investigators. Again, could be a case of “saving face” to authorities. The investigators concluded that this may be the tip of the iceberg with this group, as they have been extremely productive (if that is the right word, maybe better to use “prolifi”c) since they got to the U of Kentucky around 2006.

As pointed out in the Retraction Watch thread (https://retractionwatch.com/2019/08/23/university-of-kentucky-moves-to-fire-researchers-after-misconduct-finding/#comments), there are a large number of Chinese-run labs at U and Ky that are closely associated with Shi, and their publications are starting to appear on pub peer. Guess what they all have in common? These are all labs run by Chinese nationals, with mostly Chinese nationals in the lab generating data. Again, let me repeat: this is not a race issue, but, IMO, an example of a culture issue: people, here, could be trying to “save face” and lifestyle, even if it means sacrificing the truth.

LikeLike

Basic ethics are universal in all functioning human societies. It’s not like you needed Moses and his mate the Burning Shrub to tell people not to steal or murder.

Problem lies in detail. What is theft and what is just some Photoshop art which you need to advance your career and which you assume hurts no one? What is murder, and what is a “maverick” medical therapy which, oops, killed all your patients? Can you point me to one single country where such practices constitute an actual crime?

LikeLike

Zmudka said: „Japanese society and culture prides on honesty and telling truth. If you look at the retraction watch most retracted, Japan leads by a wide margin.“

That is not generally true. I learned that in Japan it is frowned upon to be overly direct and tell the truth if it generates an uncomfortable situation. Famously, a „yes“ in Japan can mean „no“, depending on the situation.

LikeLike

Furthermore: there was a recent analysis of data from the „Retraction Watch Database“ in a press outlet (https://www.nzz.ch/wissenschaft/chinesische-forschung-wieso-so-oft-gefaelscht-wird-ld.1484509).

What it indicated was that the % of „problematic papers“ (relative to total number of publication) was highest in Iran (+325%), followed by Taiwan (+147%), India (+119%), Italy (+50%), China (+27%), Korea (+23%), UK (+1%), USA (-19%), Japan(-28%), Germany (-41%). The numbers are relative to the overall incidence of „problematic papers“.

So, Japan does in fact not fare too bad.

LikeLike

@Leonid,

Here is the Danish law against scientific fraud and questionable research practices. Maybe it qualifies?

https://www.retsinformation.dk/Forms/R0710.aspx?id=188780

LikeLike

well, Denmark can apply it here: https://forbetterscience.com/2018/08/13/janine-erler-dossiers-which-erc-doesnt-want/

LikeLike

A small sampling of rahul hajare massive output in predatory journals include

Cows die from an overdose of love

Sensitivity and Specificity of the Nobel Prize Testing to the Dogs

Abortion Patient Whose Family Thinks She is a Virgin

Why Tall Individuals are More Prone to Cancer

My Wife has Murdered Undocumented Immigrant

Doggy Style Sex Distorts the Appearance of Face

Why Non Naked Family Produce Ape Baby

Obesity Aging Linked to Over Sex more to Get Alzheimer’s

LikeLike

This article is written just based on pure speculation… The writer accused a lot about citation and publishing gangs and more importantly about sloppiness of the science in the articles published in accused journals but never shows any solid evidence besides just nonsense graphs or searches in google or the rank of countries or the possible connection between some researchers.

For example: “Because there are a lot of trouble, all from sloppy errors, texts full of spelling errors, lack of controls and statistical information, to more serious business with apparently manipulation and falsification of data and frequent re-use of data. Here are some examples:” I was waiting to see some evidence of “apparently manipulation and falsification of data” but there is nothing about it besides speculation of writer. You said “Here are some examples:” where is those examples? I didn’t see it. You accused people but did not give any clear reason so it’s defamatory.

LikeLike

That Editors in Chief publish mainly in their “own” journal is not very special. It raises eyebrows, though, if a considerable portion of the entire content is made up of articles in which the EiC is a (co)author. This is not special to Elsevier. The journal Clin Oral Implants Res (a Wiley publication) was founded in 1991 and its founding EiC remained in charge until June 2016. During that time, the EiC authored/coauthored 240 of 3540 articles, a stunning 6.59% of the entire content (just 2 editorials).

The former EiC has an even more stunning PubMed record, 732 hits (a few articles in the list seem not to be his), i.e. 34.3% of his articles were published in his “own” journal (or in that he “owned” before).

https://www.ncbi.nlm.nih.gov/pubmed/?term=Lang+NP+AND+Clin+Oral+Implants+Res

LikeLike

„Usually in research, where it has become more and more challenging to get manuscripts published in a decent journal if you don’t have the right contacts or cheat, you publish your results in a variety of journals“

That is a hypothesis which is in many cases wrong, and because of that, many conclusions in this piece are also unjustified if they are generalized.

The scientific culture in which I grew up was such that a scientist had a somewhat fixed group of journals in which they would publish their results. Often they sent much of their standard work to the same journal. The quality was usually similar, and if something was not accepted, they sent it to a more specialized journal.

From an editorial board member it is usually expected that they publish in „their“ journal, since if they would not, that would be a bad sign for the quality of the journal.

People in more secure positions still prefer sending their output to journals they know, as opposed to go „hunting for impact factors“.

One personal example: I hate sending my work to RSC journals because they change the name of their journals too often. I do not think they are bad, but I prefer sending my work to a journal which I believe will be around for many years to come.

I also hate sending my work to Elsevier journals for other reasons (go figure).

It is certainly true that if a well-known author often publishes in one place, it might be easier for them to get their next paper in there, too. That must not necessarily indicate foul-play.

The quality might be absolutely o.k., and their paper might have chances in a „higher impact factor journal“, but they simply don‘t want to bother with another set of formatting guidelines, or risk that their paper is not read in their usual community.

I recently went through the publication list of a former mentor, and I noticed the journals where he published formed „clusters in time“, i.e., he often published several papers over 2-3 years in the same journal. My guess is: if the experience was o.k., and the formatting was still in his mind, or he gave the old manuscript as a template to the coworkers, then they used the same formatting and sent it to the same journal… (until they had a bad experience…?).

LikeLike

RE{ What it indicated was that the % of „problematic papers“ (relative to total number of publication) was highest in Iran,taiwan,india,,,,, (FROM THAC)

When was the last time you read a science paper from IRAN, TAIWAN or INDIA?

I am more concerned about research fraud in leading scientific nations USA, EUROPE and JAPAN

LikeLike

“Sure, editors are expected to publish their own work in the journal they edit”. I disagree. I have never been pressured by any publisher (or anybody else) to publish in a journal of which I was (associate) editor. Some journals have asked me to consider them, as member of their editorial board, but with the understanding that the manuscript would undergo the normal review process. In fact, when I started “Genes, Brain and Behavior” (G2B, Wiley-Blackwell) my publisher discouraged me from publishing in the journal myself and to similarly discourage any associate editors. The reason for this was that it looks bad for a new journal if only people connected with it submit papers, it’s a sign that others don’t trust it and that it might never get a healthy submission rate (and, hence, go under rapidly). As long as I edited G2B, I published a few editorials and some book reviews, but only 1 research paper (a short note, I knew that the journal reviewed fast and thorough and I needed the paper for a grant application). The paper was handled by an associate editor, outside of the manuscript submission system, so that I could not see who the reviewers were.

Publishing regularly in your own journal is bad for the journal and for yourself. Outsiders will not know whether your paper was handled with the appropriate rigor or whether you got an easy ride, so it doesn’t help your career much to publish in your own journal excessively (on the contrary, I’d say), and it is bad for the journal because it gives the impression that it cannot attract enough good papers so that the editors feel obliged to provide manuscripts.

LikeLike

Dear Leonid:

Your blog was brought to my attention.

Of the last 30 manuscripts I published, there was 1 original manuscript in Cancer Biology and Therapy that was a senior author paper and 1 co-author paper. That’s less than 10%.

Of the last 55 manuscripts, 2 were in Cancer Biology and Therapy and on 2 others I was a co-author. That’s less than 10%.

I was not involved in handling the review of such manuscripts.

I have minimized submissions to Cancer Biology and Therapy to avoid the appearance of conflict of interest.

I have 392 entries in PubMed (https://www.ncbi.nlm.nih.gov/pubmed?term=Deiry%20W%5BAuthor%5D&dispmax=200) and have published 53 original senior author papers in Cancer Biology and Therapy over nearly two decades of my career which is 13.5%.

There were 4 co-author papers, 6 editorials and 1 meeting report as well as 10 reviews in Cancer Biology and Therapy.

One review has been cited over 1000 times.

If the coauthor original papers are included, the number is 14.5%.

If the denominator includes papers since 2002 (293), the number for senior author papers in Cancer Biology and Therapy is 18%.

However, these statistics have changed due to the trends in recent years as described above where I have minimized submissions to the journal.

Thank you for your interest in our work that we hope has a positive impact on the field and helps bring new knowledge and treatments for patients with cancer.

Sincerely,

Wafik El-Deiry

LikeLike

Dear Wafik,

many thanks for your reply, and for following me on Twitter. To my shame, I missed that and thus have neglected to notify you of this guest post. I asked Morty to provide a reply, since he did this analysis, and we will see from there.

Leonid

LikeLike

Dear Leonid:

I should add that 9 of the 53 original manuscripts over the nearly two decades I mentioned are clinical case reports in the Bedside-to-Bench category and do not represent original research. They were intended for educational purposes.

W. El-Deiry

LikeLike

Dear Wafik El-Deiry,

Pubmed search retrieved 269 entries for you since 2001.

74 of these were published in Cancer Biology & Therapy. From the 74 publications I found six editorials

and one meeting report = 67/269 = 24.9%. I did not sort in senior authorship and co-authorship.

Best wishes,

Morty

LikeLike

Dear Morty:

Here is a better link for my PubMed publications.

https://www.ncbi.nlm.nih.gov/pubmed?term=Deiry%20W%5BAuthor%5D&dispmax=200

It shows 313 publications since 2001.

But the journal started publishing in January 2002, and so the number is 293 since then.

Career publications in PubMed are at 392.

You did not subtract other material that is not original research as I mentioned in my comments.

By my count with the proper denominator, that would be 44/293 = 15%.

For co-author papers, those are coming from other groups based on different collaborations, and the decision as to where to submit is certainly beyond my control.

And you did not look at the trends I mentioned for recent years (last 55 publications) where the % has drastically gone down to <10% even if you include co-author publications, and <5% if you include senior author papers. This is important because while there is no guideline for this issue, these numbers would not attract the type of attention you are bringing to this.

There was never any promotion of self-citations and the journal has not asked authors to cite any articles from the journal or otherwise. We strive to abide by the high standards of publication ethics and to always improve our practices.

Best to move to greener pastures and pursue predatory journals or egregious offenses.

Sincerely,

W. El-Deiry

LikeLike

Dear Dr El-Deiry,

Thank you for answering here about this issue.

To obtain your numerator, you removed the co-authored papers, editorials, meeting reports, reviews and clinical case reports from the initial 74 to obtain 44. I think you should also remove such manuscripts from the 293 to obtain a meaningful denominator. Otherwise the ratio you provide does not make any sense.

In addition, I do not understand why co-authored papers should be removed as being “non original research”, and I would be grateful if you could explain it.

Best regards,

Shinia Honesta

LikeLike

A list of the most influential Biomed Researchers shows most of them are very prolific (several hundreds of papers). This really sets a bad example for the future generation that you have to publish a lot to be influential in the field. Leaders in the field should come up with some guidelines.

https://onlinelibrary.wiley.com/doi/full/10.1111/eci.12171

LikeLike

A few of those on the list have a number of retractions (Aggarwal, Croce).

Yet another hypothesis: In these labs (like the ones on that list) where you have a prolific and at least somewhat famous advisor, such that if you post-doc for these people and can get a few papers is highly cited journal, there is a greater chance of fraud, compared to labs which are less prolific and with advisors that are relatively unknown.

The reason is for individuals who get into these labs—post-docs know all they need to do is get a couple of first author papers in highly ranked journals to get a decent job (assist prof), so if you project is not working, there is a greater temptation to cheat.

LikeLike

Most of the “reviews” were “Journal Clubs” which were sent to this journal. Similar journal clubs we have submitted to F1000 in recent years were not included as they don’t show up in PubMed. So they were already not in the denominator. The co-authored papers were removed because I was not the submitter and did not make the decision as to where to submit them. In any event their number are small. I have not written editorials in other journals, and there was only one meeting report.

W. El-Deiry

LikeLike

Thank you for providing more details.

Best,

LikeLike

In summary:

Science is not a show where you present magic results in magic expensive meetings.

Anything takes time to be developed and always will be far from perfection. For example ibuprofen a very good drug as drawbacks and took 12-14 years to develop.

I keep thinking researchers should publish less and better, trustful results and should publish the original results and that research funds should be more democratically distributed. Any scientist or any journals should have the status of “stars” and get more funding for the super status. “Super stars” are maddona or prince or Michael Jackson, these are the ones who indeed should be paid by their art.

LikeLike

Pingback: Society journal Biochemical Reports, ravaged – For Better Science