On the evening of December 5th, I participated at the OpenCon Satellite Event in Berlin. It was organised by Jon Tennant and Peter Grabitz, my travelling was kindly subsidised by Stephanie Dawson on behalf of the publisher ScienceOpen.

First of all, I am glad that it is now understood that Open Access (OA) is not a final goal in itself, but the first key step to achieve reliable and transparent academic research. Open Science is about more than just open access to scientific literature. It is even more that openness of published data. It is about the openness of the entire research and the researchers. Academic research is riddled with back-room dealings and hidden conflicts of interests at peer reviews and scientist evaluations as well as with irreproducibility of published results, unacceptably widespread over- or even false interpretation of experimental data and even misconduct. Opening scientific literature without changing what is actually being published, without addressing the way how science is performed and presented, and how scientists are evaluated, could easily result in the OA revolution being hijacked by utterly wrong people. The currently hotly debated issue of predatory publishing and the scientists involved therein is just one example to be named here.

It is good therefore, that Open Science meeting and workshops involving young scientists and activists take place.

At the the Berlin meeting, we heard some brief talks and discussed at length about the future of Open Science. First, Andreas Degkwitz, university library director at the Humboldt University of Berlin, expressed the key scepticisms OA publishing faces from the established scientists: concerns about the peer review quality and the perception of lower respectability of OA journals as compared to the major well-known subscriptions outlets. The latter is not the case anymore, as journals such as PLOS Biology and eLife are perceived as rather selective and even elitist, similar to their traditional, non-OA competition. However, his other concern is valid: a worryingly high number of OA journals run only a low-quality or even a pretend peer review, thus falling into the category of predatory journals. Of course, the plague of bad peer review is not the exclusive domain of OA journals, far from it. Yet publications placed with such dishonest publishers are eagerly used by dishonest scientists to pad their CVs and to advance their careers, on the costs of their honest and hard-working researcher colleagues. Thus, we have to engage with both: predatory publishing (both in OA and in subscription journals) and predatory scientists.

This is what we discussed in regard to this topic at the OpenCon Satellite Event in Berlin. I first suggested to impose more transparency on scientific publishing, thus forcing predatory publishers either to retreat or to face being exposed for their lack of proper peer review. As I then learned, transparency is also what is urgently needed in building new criteria for scientists’ reputations. Only when enough scientists engage in science transparency and post-publication peer review, will the problem of predatory publishers become obsolete. Not only this, also scientific irreproducibility and irresponsibility might become rarer or even fade into insignificance. How to convince scientists to engage into open science activities then? By rewarding this engagement when scientists are evaluated, instead of obsessing about the journal impact factors and sheer publication number.

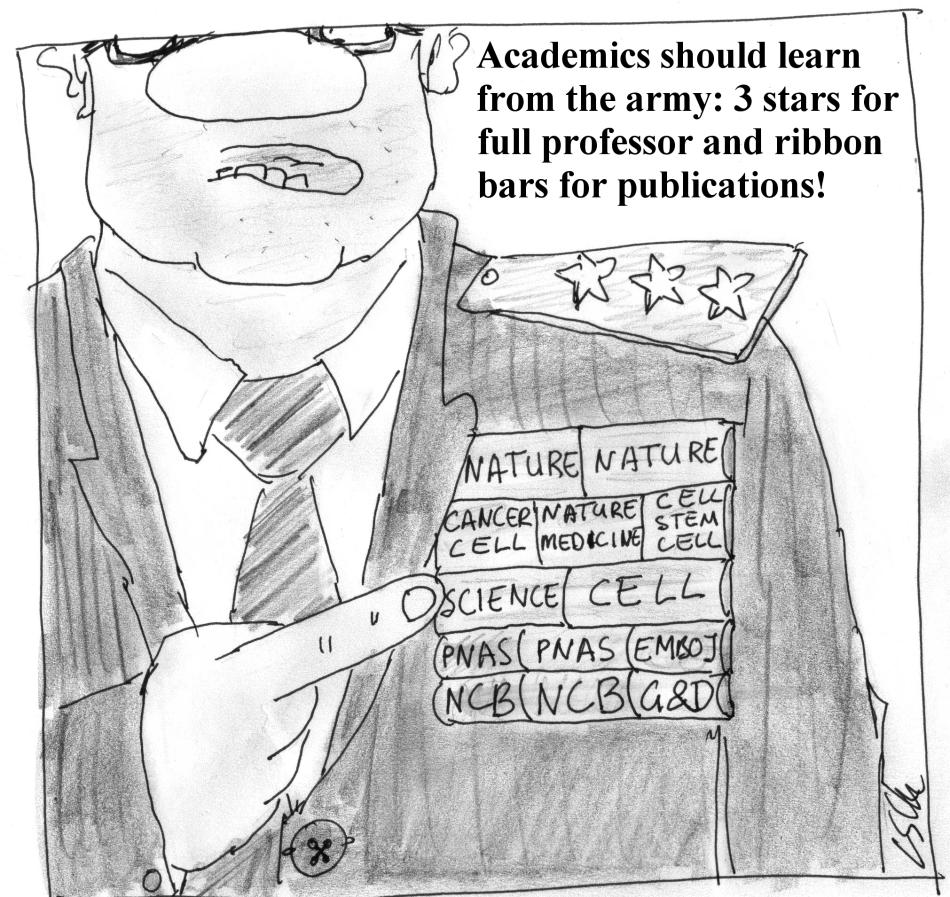

Then, how should scientists be evaluated, if journal impact factor, publication number and even citation index are not that reliable as read-outs? How can junior researchers prove their qualifications and build their reputation, while they are still waiting for their first publications to happen?

Of course, the traditional century-old method of personal networking is critical to advance one’s career in academia. But the internet and the many new concepts of scientific publishing, post-publication-peer-review and evaluation open new doors to the PhD students and postdocs, whose contact with the outside academic environment used to be so far tightly restricted and subject to a whim of their advisor.

This is what junior researchers should do: become active!

- Engage in post-publication peer review, and do it openly, under your own name, so you can also get credit for it. Many journals, especially PLOS, allow commenting. Aggregator websites such as PubMed Commons and ScienceOpen allow commenting on any published paper.

- Submit your own thorough peer review report on published literature to Publons or other appropriate output. Do it with any noteworthy paper you read or discussed in your group’s journal club. Share both appreciation and criticisms of the published research

- Try to engage the paper’s authors into online as well as offline discussions. Do not be afraid of their seniority or publication record. It’s your objective scientific arguments which count, not your personal status in academic hierarchy.

- Your comments and peer reviews will be available (often even with a digital object identifier (DOI)) to everyone on internet, including your potential employers and mentors. Therefore, you could directly refer to your peer reviewer activities with hyperlinks in your CV.

- There is nothing to be afraid of. Some senior scientists will surely be dismayed or even angry at your insolence, but as long as you stick to the facts and academic discussion, so should they, regardless who raises the issue. Besides, are you sure you want to have such people, who judge scientific merit solely on hierarchy and impact factor, as your mentors and employers? Yet those who value such engagements will be positively impressed by your initiative and competence.

This is what senior researchers should do: become open!

- Open post-publication peer reviewing should become one of several new criteria for the evaluation of senior scientists. Also, how do you expect your PhD students to engage in it if your tenured self is afraid of the wrath of your influential peers? Your contribution into post-publication peer review is needed beyond your professional competence. You are required to be a role model!

- Publish your official peer reviewer reports. It is not a breach of confidentiality anymore, after the paper you have been reviewing is published. Don’t bother about the opinions of the journals and their publishers, they have no ownership or copyright on your peer review report. The worst they can do to you is to ban you as reviewer. Their loss, not yours.

- Thus, make open peer review a pre-condition for your reviewer participation. You alone are the sole intellectual proprietor of your peer review and its report. If you post it on Publons or elsewhere, you do a great service to science, while presenting yourself as an honest and fair peer reviewer, a quality much valued in a scientist.

- Share you data! This applies to all researchers, young and old. Keep an open online lab book, wherever possible. Invite readers to comment and share advice and ideas.

- Publish pre-prints for all your manuscripts, for example on BioRxiv, to prevent getting scooped and to gather opinions and reviews from your peers and keen junior scientists.

- Post your research data as figures on Figshare, both the preliminary results as well as follow-ups to already published work of yours.

- Engage in reproducibility studies, and motivate your junior scientists to eagerly participate. Publishing replication studies, both failed and successful ones, is not a waste of time and money, in fact, it is even actual novel research if you employ new methods and certain controls omitted in the original study. Most researchers have to replicate published results of others first, before starting their own research projects based on these findings. Sometimes, when things seem to not work out, scientists contact the authors with their questions. Sometimes these authors reply, and the matter can be resolved when additional protocol details or crucial advice is shared. Too often though, scientists reluctantly accept that certain published data is not reproducible and move on. Normally, all these failed replication attempts end up buried in their desk drawers and computer hard drives. They should not.

- Finally, outreach at promoting science with students and the wide public should be also a duty of a good scientist. Blogging and contributing science-relevant articles to mass media can even be done from your own desk without leaving your office.

The challenge now, as we agreed in Berlin, is to make those who decide understand the value of Open Science. Faculties, funders and politicians should learn to reward those researchers who contribute to science transparency, and keep their own research open. On the other hand, the recruitment panels and funding bodies should give minus points to those scientists who do not. Regardless of the impact factor of their publications. After all, whose papers would you trust more?

This is what is the meaning of Open Science; an EXCELLENT blog indeed.

LikeLike

You might want to have a thorough look at this new global and consistent solution that implements the open science spirit at every level : http://www.sjscience.org (concept explained in detail at http://www.sjscience.org/article?id=46) .

A short comment about an essential point that is not tackled in this post: scientists do not have open practices because of the way they are evaluated, i.e. through the use of the impact factor, which is itself a closed and private criterion (besides a scientifically irrelevant one). In the current logic, the goal of a scientist is to exclusively please an editor, not his peers, and that’s why he has an interest into not being open. The dynamics of openness you suggest can spread outside the mere community of Open Science advocates only if it gives rise to an evaluation criterion that rewards openness and transparency (and penalizes secrecy). This evaluation criterion must be thorough and open (i.e. the power to evaluate must not concentrate again within a few hands) This is the missing key of all the open science and sjscience.org is today the first and only paradigm that proposes such an evaluation criterion. You can also have a look at this conference: http://www.dailymotion.com/video/x3hraac_the-self-journal-of-science-un-nouvelle-logique-ouverte-d-editorialisation-et-d-evaluation-michael-b_school

Hope you will want to discuss is further, this is a huge topic.

LikeLike

Hi Michaël, many thanks for your comment and sharing the information about the Self-Journal of Science!

I am sorry I did not make myself clear enough, because I actually fully share your views above. if you have a look at my last paragraph, you will notice I was advocating the re-evaluation of how scientists are evaluated. In my opinion, it is up to funders and faculties to make open data and open science a key prerequisite for funding or tenure.

Once this happens, journals and their editors will have no choice but to change their stance, otherwise they would risk losing authors. We already see this happening with Open Access, but for some reason funders, universities and the wider scientific community are less keen on moving beyond OA and towards Open Science (as I outlined here: https://forbetterscience.wordpress.com/2015/12/24/berlin12-closed-society-at-an-open-access-conference/).

At the same time, some journals prefer the illusion of transparency to the true thing: https://forbetterscience.wordpress.com/2015/12/17/optionally-transparent-peer-review-a-major-step-forward-but-which-direction/

I also proposed some time ago a method to make institutional misconduct investigations more transparent (or actually, make them happen at all): http://retractionwatch.com/2015/01/19/universities-agree-refund-grants-whenever-retraction/

LikeLike

Dear Leonid, thx for your quick reply and sorry for missing your last paragraph which indeed mentions this essential feature. Here I share with you more of my views in reaction to your reply:

– I do not believe in a real top-down change of the system. Too few of those who sit at the top under current rules would want them to change in the radical extent that is requested by Open Science. Moreover, when very big decisions like this depend only on a few people, this is a very favorable playground for private interests, that know how to play their cards to lock any evolution. I have seen financial reports stating that now “the market” sees OA as a growth opportunity and that Elsevier plans to make more money by publishing more (i.e. less selectivity and decrease of scientific quality) and getting more from developing countries. I bet this is the corrupted OA we’ll get if we let our representatives decide.

– I rather advocate for a bottom-up change. Another way to create value must be found and powered by the whole scientific community. It must have its own incentives so that it can spread beyond the mere community of Open Science advocates. It must be compatible with current systems since people still needs to play the “impact factor game” before this new way becomes strong enough to oblige institutions to recognize it and collapse the traditional system. It is such a thing that the Self-Journal of Science wants to achieve, by finding a new evaluation system that have the correct properties.

Very very briefly : a good article is not one that manages to convince some guy who happens to be a member of the editorial board of a high-IF journal, but one that convinces most of the community. The evaluation must therefore be communal. You may think at Facebook’s “like” system or Twitter’s “Retweet” but of course this is not enough, we know the kind of things which are promoted by pure popularity contests. SJS’ idea is to self-regulate this by also incentivizing evaluators to be seen as good evaluators, so that they involve a real scientific judgement into the materials they promote. Therefore the evaluation system is : each scientist has the autonomous means to curate any article in his own “self-journal”, a structured selection in which he can express his vision and point what he believes to be important (e.g. http://www.sjscience.org/memberPage?uId=90&jId=6#journal ). It is very useful to the peers of the curator and they will definitely read the self-journal if it is interesting enough. The curator has therefore an interest into doing it, and doing it well. In this system, the importance of an article is reflected by its number of curators. This system based on curation can immediately apply to the whole scientific literature, irrespectively of how close its legal owners want it to be.

Open science advocates could start maintaining their own self-journals, then other people start do it also once they have understood how useful it is by reading others’ , then it becomes clearer that it performs better than the impact factor, then some institution recognizes it, then a chain reaction.

In this model, open science is driven by scientists’ individual interest and unfolds naturally, which I think is (unfortunately) more efficient than ethical statements or legal obligations.

LikeLike

Very interesting approach about self-publishing and self-curating, Michaël!

But my suggestion towards policy change from the side of funders and universities is not about hoping for those in power to change their minds, quite the opposite. Please remember who stands above every big scientist, university or funding agency: the general public, which pays taxes and votes for the politicians in charge of research and its funding.

The current system is dependent of secrecy, private networks and backroom dealings. What it is most afraid of, is to see its dirty laundry being washed in the open. Transparency will be the end of it: no politician will want to support a rotten system which voters despise. The key task for scientists will be to engage the public and politicians and present them with the alternatives in science publishing, evaluation and funding.

It is the task of every decent and honest scientist to make bad research known through open and signed PPPR, and to try to do outreach to public and politics.

At the same time, science journalists should stop grovelling to their paymasters and do what their journalism colleagues elsewhere do: investigate!

LikeLike

Then I do agree. We need the pressure that comes from the general public, because the internal balance of power of academy really opposes quality science (=community-wide peer review, openness, transparency, evaluation based on quality and not on impact, long processes) by making the personal interests of scientists different from the general interest of science.

Science journalists have an important role to play to achieve this. However, my impression is that they just report examples of the many flaws of the traditional system and draw a picture where the evil is Elsevier et al. Though Elsevier brings his share of wrongdoings, it is in fact just the one which benefits most from a big mistake made first by the academy: institutions basically privatized the publishing process by giving up the evaluation of science to private players (the journals). If the same system of journals were entirely run by public institutions, all the scientific problems will remain. The way science is evaluated (i.e. the way it is funded) is the one that determines in practice what scientists can, should and shouldn’t do. I wish journalists point more often the mode of evaluation of science as the real origin of the problem, and I hope that eventually SJS’ unique and costless approach will be noticed 🙂 . Otherwise, people will just get angry and politicians will hit scientists harder instead of trying to understand the bad systemic logic that makes everything fail.

LikeLike

Pingback: False priorities at EU2016NL: Mandate Open Data instead of Gold Open Access! – For Better Science

Michaël Bon:

“[. . .]

[. . .] a good article is [. . .] one that convinces most of the community. [. . .]

[. . .]”

Whether or not an article is convincing does not depend on whether or not this article is good.

LikeLike

Reblogged this on samreen fathima.

LikeLike